Meta Title: How to Extract Data from Websites: A Developer's Guide Meta Description: Learn how to extract data from websites using Python, Node.js, and APIs. This guide covers web scraping basics, JavaScript sites, and anti-bot bypass.

Learning how to extract data from websites is a crucial skill for modern developers, turning the web into a massive, queryable database. This process involves sending a request to a server, getting back an HTML document, and then parsing it to pull out the specific information you need. These fundamental steps form the basis for everything from simple scripts to large-scale data pipelines.

Table of Contents

- Why Extracting Web Data Still Matters

- Your First Steps in Manual Data Extraction

- Tackling JavaScript-Driven Websites

- Overcoming Anti-Bot Measures and Scaling Your Scrapers

- Structuring, Storing, and Using Your Data

- Frequently Asked Questions

- Next Steps

Why Extracting Web Data Still Matters

Extracting web data is no longer a niche skill; it’s essential for building intelligent, data-aware applications. The web is the largest public database in existence, powering market intelligence platforms, price monitoring engines, and the training datasets for modern AI models.

But the path from a webpage to a clean, structured dataset is challenging.

Websites have evolved beyond static HTML documents. Today, they are complex applications built with frameworks like React or Vue that load content dynamically with JavaScript. This means the valuable data you see in your browser often isn't in the initial HTML your script receives.

Caption: Web data extraction transforms unstructured web pages into structured, usable data via APIs. Source: CrawlKit

Caption: Web data extraction transforms unstructured web pages into structured, usable data via APIs. Source: CrawlKit

The Growing Demand for Web Data

This complexity hasn't slowed the demand for high-quality web data. The market for web scraping software is exploding, projected to reach USD 2.7 billion by 2035, according to a report by Prophecy Market Insights. This signals how critical web data has become for businesses seeking a competitive edge.

To give you a clearer picture, here’s a quick breakdown of the common methods for extracting data from websites.

Common Web Data Extraction Methods

This table outlines different approaches, highlighting their complexity and ideal use cases.

| Method | Best For | Complexity | Key Challenge |

|---|---|---|---|

| Manual Copy-Paste | One-off, small tasks | Very Low | Unscalable and error-prone. |

| HTTP Request Libraries | Static websites (no JavaScript) | Low | Fails on dynamic, JS-heavy sites. |

| Headless Browsers | JavaScript-heavy websites | Medium | Resource-intensive; easily detected. |

| Web Scraping APIs | Complex sites, scale, anti-bot | Low | Manages infrastructure for you. |

Each method has its place, but complexity ramps up quickly with modern web architecture.

From Manual Code to Managed APIs

This guide is a practical roadmap. We'll start with foundational, DIY methods for simple sites. Then, we'll level up to advanced techniques for handling JavaScript-heavy pages and bypassing anti-bot systems. Finally, we'll see how modern APIs can do the heavy lifting for you.

Developer-first platforms like CrawlKit are built to abstract away the messiest parts of web scraping. As an API-first web data platform, it lets you scrape sites, extract structured JSON, take screenshots, and even get specific LinkedIn or app review data without managing any scraping infrastructure. With proxies and anti-bot measures abstracted away, you can focus on the data itself, which is the whole point. There are countless web scraping use cases that show just how powerful this approach can be.

Your First Steps in Manual Data Extraction

Before tackling complex websites, you must master the fundamentals. Learning how to extract data from websites manually is the best way to understand what's happening under the hood. This hands-on approach builds the foundational knowledge you’ll lean on for every project.

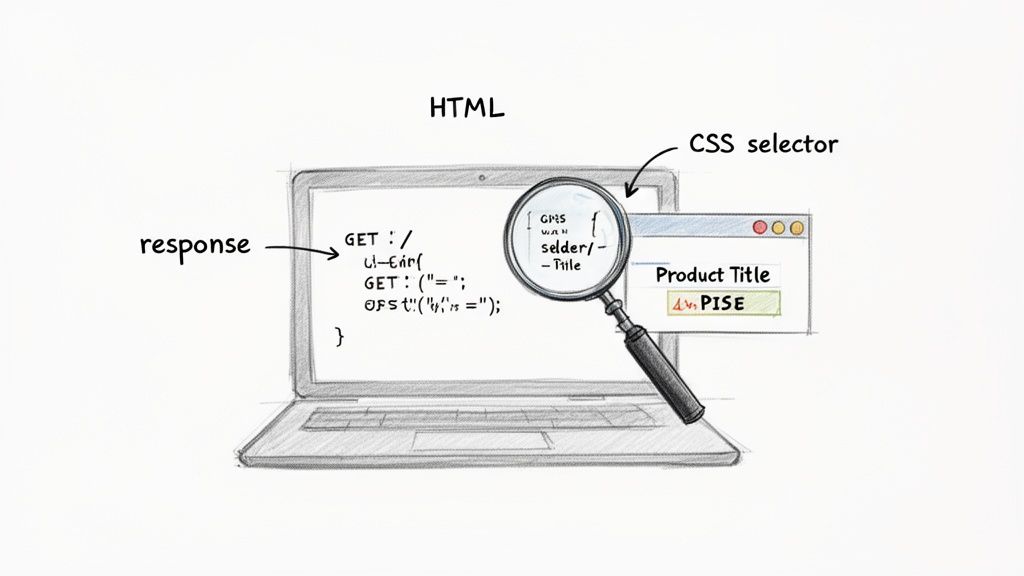

The process begins with a basic HTTP request. Think of it as asking a website for its public content. For this, developers typically use simple, powerful libraries.

Caption: Web scraping involves fetching HTML, identifying data with CSS selectors, and extracting it into a structured format. Source: CrawlKit

Caption: Web scraping involves fetching HTML, identifying data with CSS selectors, and extracting it into a structured format. Source: CrawlKit

Making Your First HTTP Request

In Python, the requests library is the top choice for its simple API. For Node.js developers, axios serves the same purpose with a clean, promise-based interface. A single GET request is all it takes to pull down the raw HTML.

Here's a straightforward example in Python:

1import requests

2

3url = "https://quotes.toscrape.com"

4response = requests.get(url)

5

6if response.status_code == 200:

7 print("Successfully fetched the page!")

8 # The raw HTML is in response.text

9 # print(response.text)

10else:

11 print(f"Failed to fetch page with status code: {response.status_code}")This script sends a request to the target URL. A status code of 200 means success, and the page's HTML content is now in the response.text variable. This raw text is the starting point for data extraction. For tougher sites that block standard requests, you might need to get the raw HTML using a managed API instead.

Parsing HTML with CSS Selectors

With the HTML in hand, the next step is parsing it. Raw HTML is a messy nest of tags, making it difficult to work with as plain text. This is where HTML parsing libraries are essential.

- BeautifulSoup (Python): Forgiving and intuitive, it transforms messy HTML into a clean, navigable object tree.

- Cheerio (Node.js): A fast, lean, server-side implementation of core jQuery. If you know jQuery selectors, you already know Cheerio.

These tools let you pinpoint elements using CSS selectors—patterns that match elements based on their tag, ID, or class. They act as a GPS for finding information inside an HTML document.

Understanding CSS Selectors

To target data effectively, you only need to know a few basic selectors.

- Tag Name: Selects all elements of a certain type (e.g.,

h2,p,a). - Class: Selects elements with a specific class attribute, like

.product-title. - ID: Selects a single, unique element with a specific ID, like

#main-content.

This Node.js snippet uses axios and cheerio to fetch our example page and extract the text from every quote.

1const axios = require('axios');

2const cheerio = require('cheerio');

3

4const url = "https://quotes.toscrape.com";

5

6axios.get(url).then(response => {

7 const html = response.data;

8 const $ = cheerio.load(html);

9

10 // Each quote text is inside a <span> with class="text"

11 $('.text').each((index, element) => {

12 console.log($(element).text());

13 });

14}).catch(console.error);The .text selector tells Cheerio to find every element with the class "text" and extract its content. Mastering these simple selectors is the key to unlocking data from any static webpage.

Tackling JavaScript-Driven Websites

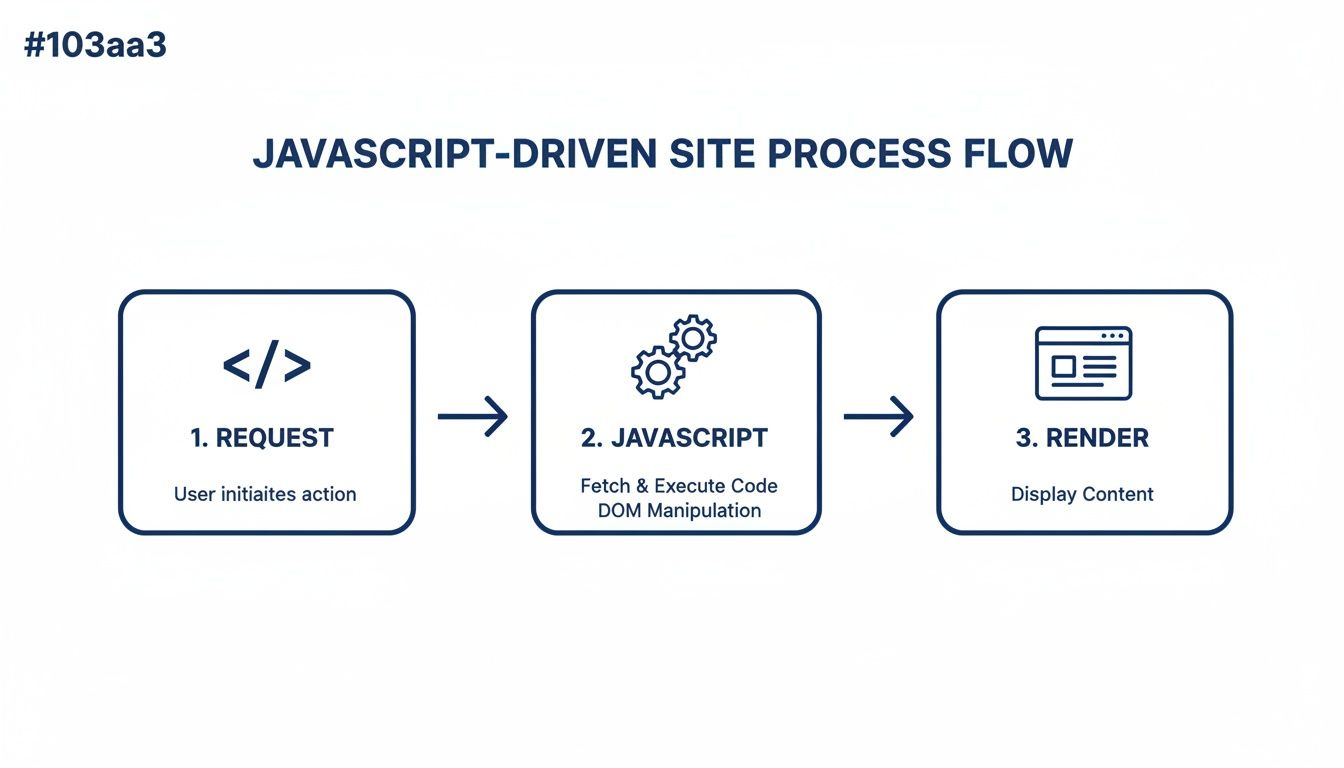

If you've ever scraped a modern website and found the data you see in your browser is missing from the HTML your script receives, you've hit the JavaScript wall. It's a major hurdle when you how to extract data from websites today. Many sites are single-page applications (SPAs) built with frameworks like React or Vue, which load content dynamically after the initial page load.

A simple HTTP request only grabs the initial, often barebones, HTML shell. To scrape these sites, you need to act more like a real user's browser.

Enter the Headless Browser

The solution is a headless browser—a real web browser like Chrome running invisibly in the background. You control it programmatically to navigate URLs, wait for elements, click buttons, and scroll.

By letting a real browser engine render the page and execute all its JavaScript, you can access the complete, final HTML.

Two powerful tools for this are:

- Puppeteer: A Node.js library from Google to control Chrome or Chromium.

- Playwright: A Microsoft-backed alternative supporting Chromium, Firefox, and WebKit.

Both tools let you launch a browser, go to a page, and wait for content to appear before scraping.

A Practical Node.js Example

This Puppeteer script navigates to a JavaScript-heavy page, waits for a specific CSS selector to become visible, and then grabs the fully rendered HTML.

1const puppeteer = require('puppeteer');

2

3async function getRenderedHTML(url) {

4 const browser = await puppeteer.launch();

5 const page = await browser.newPage();

6

7 try {

8 await page.goto(url, { waitUntil: 'networkidle0' });

9 await page.waitForSelector('.dynamic-content-class', { timeout: 10000 });

10 const html = await page.content();

11 console.log('Successfully retrieved rendered HTML.');

12 // Now you can parse this 'html' with Cheerio

13 } catch (error) {

14 console.error('Error fetching the rendered page:', error);

15 } finally {

16 await browser.close();

17 }

18}

19

20getRenderedHTML('https://example.com/dynamic-page');The waitUntil: 'networkidle0' option tells Puppeteer to wait until network activity has settled, a good sign the page has finished loading. Then, waitForSelector ensures the target data is present before proceeding.

Caption: The initial HTML (left) is often just a placeholder, while the rendered HTML (right) contains the actual data after JavaScript execution. Source: CrawlKit

The rise of such complex sites is a key reason you can discover more insights about AI-driven web scraping and its growing market. While powerful, headless browsers are memory and CPU-intensive, and many sites can detect and block them. If you need to do more than render JavaScript, you can learn more about taking automated website screenshots as part of your workflow.

Overcoming Anti-Bot Measures and Scaling Your Scrapers

Moving from scraping a few pages to thousands introduces a new challenge: sophisticated anti-bot systems. Websites deploy defenses like IP rate limiting, CAPTCHAs, and browser fingerprinting to block automated traffic.

Navigating Common Roadblocks

To bypass these defenses, your scraper must behave more like a human. This requires a multi-pronged strategy.

One effective tactic is rotating your User-Agent string. Sending the same default library header with every request is a dead giveaway. Instead, cycle through a list of common, real-world browser headers.

Even with a perfect User-Agent, thousands of requests from a single IP address will get you blocked. This is where proxies are crucial. By routing traffic through a pool of different IP addresses, you distribute the load and avoid rate limits.

- Residential Proxies: These use IP addresses from real internet service providers, making your requests look like genuine user traffic and significantly reducing the chance of being blocked.

- Datacenter Proxies: Faster and cheaper, but easier for websites to detect since their IP ranges are often known and blacklisted.

Caption: JavaScript execution is a critical step for scraping modern websites, turning a basic HTML shell into a data-rich page. Source: CrawlKit

Caption: JavaScript execution is a critical step for scraping modern websites, turning a basic HTML shell into a data-rich page. Source: CrawlKit

The Pain of Scaling Manually

The DIY approach starts to break down at scale. Managing a large pool of high-quality proxies, building robust retry logic, and maintaining server infrastructure 24/7 is a massive operational burden. It's a full-time job that pulls you away from your actual goal: getting the data.

Abstracting Complexity with a Managed API

This operational headache is exactly the problem a developer-first web data platform solves. Instead of building and maintaining your own scraping infrastructure, you can offload the entire process to a single API call.

A service like CrawlKit abstracts away anti-bot and scaling challenges. With its API-first approach, you get intelligent proxy rotation, automated CAPTCHA solving, and sophisticated anti-bot bypass techniques built-in. You don’t have to think about infrastructure—just ask for the data and get clean JSON back. Understanding the benefits of a managed API makes it clear how much time this saves.

DIY Scraping vs. Managed API (CrawlKit)

| Feature | DIY Approach | CrawlKit API |

|---|---|---|

| Proxy Management | Source, vet, and manage a pool of residential proxies. Build rotation logic. | Built-in premium residential proxy network with automatic rotation. |

| Anti-Bot Bypass | Constantly reverse-engineer and update scripts for Cloudflare, etc. | Handles major anti-bot systems automatically. |

| JavaScript Rendering | Set up and maintain a fleet of headless browsers (e.g., Playwright/Puppeteer). | Fully managed browser rendering with a simple API parameter. |

| CAPTCHA Solving | Integrate and pay for a third-party solving service. | Automated CAPTCHA solving is included. |

| Retry Logic | Implement custom exponential backoff and handle various failure codes. | Automatic, intelligent retries are built into the platform. |

| Infrastructure | Provision, monitor, and scale servers to handle the workload 24/7. | Zero infrastructure to manage. Start free. |

The table shows it clearly: the DIY path forces you to become an infrastructure expert, while a managed API lets you stay focused on using the data.

Structuring, Storing, and Using Your Data

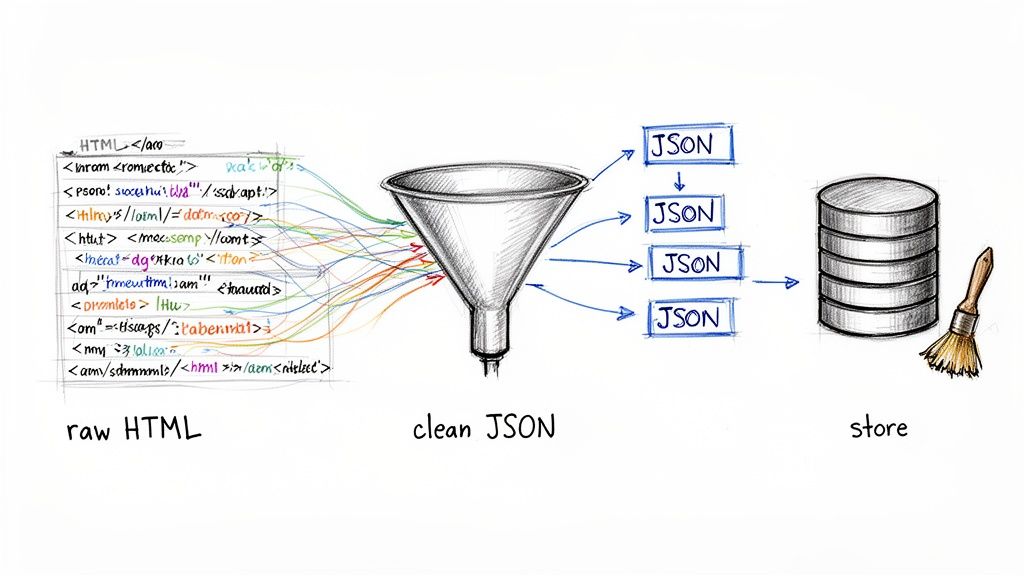

You've successfully grabbed the raw HTML. Now the real magic happens: turning that unstructured chaos into clean, predictable, and useful data.

The goal is almost always JSON (JavaScript Object Notation). It’s lightweight, human-readable, and easy for any programming language to work with, whether you're feeding an API, a database, or a front-end application.

Caption: The data extraction process involves parsing raw HTML, structuring it into a clean format like JSON, and storing it for analysis. Source: CrawlKit

Caption: The data extraction process involves parsing raw HTML, structuring it into a clean format like JSON, and storing it for analysis. Source: CrawlKit

From HTML to Structured JSON

The idea is to loop through the HTML elements you’ve parsed—like a list of products—and map each piece of information to a key-value structure. You'd grab the text from an <h2> with a .product-title class and put it into a "title" key in your JSON object.

Here's a quick Python example showing how to structure data from product listings.

1import json

2

3products_data = []

4# Assuming 'product_elements' is a list of parsed HTML elements from BeautifulSoup

5for product in product_elements:

6 item = {

7 "name": product.find("h2", class_="product-name").text.strip(),

8 "price": product.find("span", class_="price").text.strip(),

9 "url": product.find("a")['href']

10 }

11 products_data.append(item)

12

13# Convert the list of dictionaries to a JSON string

14json_output = json.dumps(products_data, indent=2)

15print(json_output)This simple loop builds a clean, predictable list of objects. For more advanced projects, you can explore techniques for enriching datasets with scraped web data.

Choosing the Right Storage Solution

Once your data is structured, it needs a home. The right choice depends on your project's scale and use case.

- CSV Files: Perfect for small datasets, one-off analyses, or importing into spreadsheets.

- JSON Files: Great for storing data with a nested or hierarchical structure.

- Databases (PostgreSQL, MySQL): Non-negotiable for large-scale or ongoing data collection, offering powerful querying, indexing, and analysis capabilities.

After pulling data, a good guide on marketing and data analytics can show you how to turn that raw information into business strategy.

Ethical and Legal Considerations

Web scraping operates in a legal gray area, but you can stay on the right side by following a few ethical rules. This isn't just about avoiding blocks; it’s about being a good citizen of the web.

- Respect

robots.txt: This file contains a website's explicit instructions for bots. Ignoring it is a fast way to get your IP address blocked. - Check Terms of Service: Skim a site's ToS for rules on automated access.

- Be Polite: Don't hammer servers with rapid-fire requests. Add a polite delay between requests to mimic human browsing behavior and minimize your impact.

Frequently Asked Questions

As you dive into extracting data from websites, you're bound to have questions. This FAQ covers the most common issues developers face.

Is It Legal to Extract Data from Websites?

This is the big one, and the answer is nuanced. Generally, scraping publicly available data is not illegal, but you must be careful. Always check a website's robots.txt file and read its Terms of Service. Avoid scraping copyrighted content, private information, or any personal data. Legality often depends on what you scrape and how you use it. For commercial projects, consulting a legal professional is always a good idea.

How Do I Scrape Data Behind a Login?

Pulling data from pages requiring a login is more complex. You'll need an automated browser tool like Puppeteer or Playwright. Your script must mimic a user logging in.

The basic flow is:

- Navigate to the login page.

- Find the form fields and enter the username and password.

- Click the submit button.

- Manage session cookies to stay logged in as you navigate to other pages.

This process is significantly more complex than scraping public pages and requires secure credential handling.

Caption: Scraping behind a login requires your script to manage authentication and session cookies to access protected content. Source: CrawlKit

What's the Best Programming Language for This?

Python is the crowd favorite due to its extensive ecosystem, with libraries like Requests, BeautifulSoup, and Scrapy that simplify the job.

However, Node.js is also a fantastic choice, especially for JavaScript-heavy sites, thanks to tools like Puppeteer, Playwright, and Cheerio for browser automation. The best language is the one you and your team are most productive with.

How Can I Avoid Getting Blocked?

Getting blocked is common, but you can minimize it by making your scraper act more like a human.

- Rotate Your IP Address: Use a pool of high-quality residential proxies to make requests appear as if they are coming from different real users.

- Use Real User-Agent Strings: Cycle through a list of common browser headers to avoid detection.

- Slow Down: Add random delays between requests to mimic human browsing speed.

- Limit Concurrent Requests: Avoid opening hundreds of connections at once from a single IP.

This is exactly the kind of tedious work that a managed service like CrawlKit handles for you automatically.

What's the Difference Between Scraping and Crawling?

The distinction is simple but often confused.

- A crawler (or spider) discovers. It follows links to map out a website's structure, like Googlebot indexing the web.

- A scraper extracts. It goes to a specific page to pull out targeted information, like product prices or reviews.

You often use them together: first, crawl to find the pages, then scrape to get the data.

How Do You Handle CAPTCHAs?

CAPTCHAs are designed to stop bots and are a major headache for scrapers. You can integrate a third-party CAPTCHA-solving service, but this adds complexity and cost. A better approach is to use a data extraction API with built-in, sophisticated CAPTCHA-solving capabilities.

Can I Scrape Data from an API Instead of HTML?

Yes, and you absolutely should if you can! Many modern websites use APIs to load data into their front end. Open your browser's developer tools, go to the "Network" tab, and watch the requests as you browse. You can often spot the API calls the site makes to fetch data. Hitting that API directly is far more efficient and reliable than parsing fragile HTML, giving you clean, structured JSON from the source.

Next Steps

You now have a solid roadmap for how to extract data from websites, from writing scripts from scratch to using a powerful API. The journey comes down to a choice: the DIY path or the managed path.

Building everything in-house is a great learning experience but comes with the endless headache of maintenance. Websites change, anti-bot systems evolve, and your scripts will break.

The alternative is a developer-first, API-first web data platform like CrawlKit. It lets you skip the infrastructure nightmare and get straight to the data, abstracting away the complexities of proxies, headless browsers, and bot detection. For most developers, this is the smarter, more scalable choice.

Ready to stop building infrastructure and start using data?

Explore Further

- Try the CrawlKit API in Our Interactive Playground: See how fast you can get structured data from any URL with no code required.

- Explore Our Complete Developer Documentation: Dive into detailed guides, code examples, and advanced features like screenshots and schema-based extraction.

- Learn How to Scrape LinkedIn Profiles at Scale: See a real-world example of how to tackle a complex, well-defended target using a managed API.