Meta Title: 12 Best Web Scraping API Tools for Developers (2024) Meta Description: Find the best web scraping API for your needs. We compare 12 top tools on features, developer experience, anti-bot handling, and pricing.

Finding the best web scraping API for your project can be a complex task, with dozens of providers claiming to solve the same problem. This guide cuts through the noise with a direct, developer-focused comparison of the top web scraping APIs available today. We'll dive deep into each solution, moving beyond marketing claims to deliver the practical insights you need.

Table of Contents

- 1. CrawlKit

- 2. Zyte

- 3. Bright Data

- 4. Oxylabs

- 5. Apify

- 6. ScraperAPI

- 7. ScrapingBee

- 8. ZenRows

- 9. WebScrapingAPI

- 10. SerpAPI

- 11. Diffbot

- 12. Rapid (RapidAPI)

- Top 12 Web Scraping APIs Comparison

- Frequently Asked Questions (FAQ)

- Next steps

1. CrawlKit

CrawlKit is a developer-first, API-first web data platform designed to abstract away the entire scraping infrastructure. It converts any webpage into structured JSON through a single API call, handling all proxies, browser rendering, and anti-bot measures automatically. This makes it an exceptionally strong contender for engineering teams who need reliable, production-grade data without managing headless browsers or proxy pools.

The platform is engineered for modern data pipelines. Instead of just returning raw HTML, CrawlKit delivers clean markdown, structured JSON, screenshots, and rich metadata. Its specialized endpoints can scrape, extract data to a custom schema, search, and even handle LinkedIn company/person profiles and app store reviews.

Caption: The CrawlKit API Playground allows for interactive testing of scraping and extraction endpoints. Source: CrawlKit

Caption: The CrawlKit API Playground allows for interactive testing of scraping and extraction endpoints. Source: CrawlKit

Key Features & Use Cases

CrawlKit’s feature set is tailored for developers who prioritize efficiency and reliability. Its automatic handling of JavaScript-heavy Single-Page Applications (SPAs) ensures you get complete, fully-rendered content, a common pain point with simpler HTTP-based scrapers.

Standout Capabilities:

- No Scraping Infrastructure: Proxies and anti-bot measures are completely abstracted away, requiring zero configuration.

- Intelligent Extraction: The

/extractendpoint uses AI to parse content into a custom JSON schema you define, turning unstructured pages into predictable data. - Comprehensive Data Endpoints: Offers dedicated endpoints for general crawling, structured extraction, search engine results, LinkedIn profiles/companies, and full-page screenshots.

- Developer-First Experience: Minimal integration effort with a pure HTTP API, clear documentation, and a generous free tier to start.

1# Example cURL request to extract data with a custom JSON schema

2curl "https://api.crawlkit.sh/v1/extract" \

3 -H "Authorization: Bearer YOUR_API_TOKEN" \

4 -d '{

5 "url": "https://example.com/product/123",

6 "schema": {

7 "title": "h1",

8 "price": ".price",

9 "description": ".description"

10 }

11 }'This makes it one of the best web scraping API options for use cases like competitive intelligence, price monitoring, and automated lead generation.

Pricing Model

CrawlKit uses a transparent, credit-based, pay-as-you-go model. New users can start free with 1,000 credits to test the API. Credits are consumed per request, with costs clearly defined (e.g., /crawl ≈ 1 credit, /extract ≈ 5 credits), and they never expire. This model provides flexibility for projects of all sizes. For more details, you can read the docs or try the playground.

Why It Stands Out

CrawlKit is a powerful tool for engineers who value a clean, API-first approach. By abstracting away the tedious mechanics of web scraping, it allows developers to focus on using the data, not acquiring it.

Pros:

- Minimal Integration Effort: Pure HTTP API and SDKs mean you can get started in minutes.

- High-Quality, Consistent Data: Built-in rendering and anti-bot systems deliver complete and reliable results.

- AI-Powered Extraction: Structured data extraction simplifies post-processing workflows.

- Transparent Pricing: A clear, credit-based model with a free tier makes it easy to get started.

Cons:

- Newer Player: As a more recent entrant, it has a smaller user community compared to decade-old incumbents.

- Focused Feature Set: Primarily focused on scraping and extraction, whereas some platforms are broader automation suites.

2. Zyte — Zyte API

Zyte API is a developer-focused scraping tool that automates the complex parts of web data extraction. It functions as a single smart endpoint that intelligently handles proxy rotation, headless browser rendering, and ban evasion, making it a strong contender for the title of best web scraping API for teams needing a scalable, hands-off solution.

Its core strength lies in its automatic anti-bot handling. Instead of requiring developers to configure proxy types or enable JavaScript rendering, Zyte API analyzes the target website and applies the necessary unblocking techniques on its own. This approach can significantly reduce both development time and the overall cost of a project.

Caption: Zyte's API product page highlights its smart browser and anti-ban features. Source: Zyte

Caption: Zyte's API product page highlights its smart browser and anti-ban features. Source: Zyte

Key Features & Use Case

The "pay for success" model is Zyte API's most significant feature. You are only billed for successful requests (HTTP 200s), which aligns costs directly with results. This is particularly beneficial for large-scale projects where managing failure rates across thousands of requests can be a major budgetary challenge.

Best for:

Large-Scale Data Teams: Ideal for organizations that need to scrape millions of pages without managing a complex infrastructure of proxies and headless browsers.

E-commerce & Price Monitoring: Effectively scrapes product data from heavily protected e-commerce sites.

Integrating with Existing Stacks: Teams already using tools like Scrapy will find a familiar ecosystem, as Zyte is the company behind the popular open-source framework.

Pros: Pay only for successful responses; automated unblocking and rendering reduces operational overhead.

Cons: Per-site pricing tiers can make budget forecasting complex; the free trial credit is limited.

Website: https://www.zyte.com/zyte-api/

3. Bright Data — Web Scraper API, Web Unlocker, Scraping Browser

Bright Data offers an enterprise-grade data collection platform with a suite of tools designed for large-scale, mission-critical operations. Its strength lies in providing multiple, specialized API products like the Web Scraper API and Web Unlocker, catering to organizations that require service level agreements (SLAs), managed services, and a high degree of reliability for unblocking even the most challenging websites. This makes it a top-tier choice for businesses where data accuracy and uptime are paramount.

The platform's core distinction is its breadth of offerings. Instead of a one-size-fits-all API, users can choose the best tool for the job, whether it's the records-based Web Scraper API for structured data or the more powerful Web Unlocker for handling dynamic, heavily protected targets.

Caption: Bright Data offers a wide range of data collection products for enterprise use cases. Source: Bright Data

Caption: Bright Data offers a wide range of data collection products for enterprise use cases. Source: Bright Data

Key Features & Use Case

A key advantage of Bright Data is its built-in, automated unblocking technology, which includes CAPTCHA solving, browser fingerprinting, and content verification. This comprehensive system ensures high success rates, while flexible pricing models (pay-per-result or pay-per-GB) allow organizations to align spending with their data acquisition strategy.

Best for:

Enterprise-Level Operations: Ideal for large corporations that need guaranteed uptime, dedicated support, and SLAs for their data collection infrastructure.

Regulated Industries: Suited for finance, legal, and compliance sectors where data integrity and sourcing are critical.

Complex Unblocking Scenarios: The Web Unlocker and Scraping Browser are highly effective for websites with advanced anti-bot measures.

Pros: Broad product lineup with strong enterprise support and SLA options; multiple pricing models to fit different use cases.

Cons: Higher effective cost for smaller-scale users; the extensive product menu can be complex for first-time buyers to navigate.

4. Oxylabs — Web Scraper API family

Oxylabs provides a comprehensive suite of scraper APIs designed for reliability and scale, backed by one of the largest premium proxy networks in the industry. The platform distinguishes itself with a family of specialized APIs for general web scraping, e-commerce, and SERP data, allowing developers to choose the right tool for the job. Its AI-powered unblocking system and flexible pricing make it a powerful choice for teams needing a robust web scraping API.

This approach gives developers granular control over costs and capabilities, as each API is optimized for specific types of target websites. The inclusion of extensive documentation and SDKs helps accelerate the integration process.

Caption: Oxylabs positions its products as a family of specialized scraper APIs. Source: Oxylabs

Caption: Oxylabs positions its products as a family of specialized scraper APIs. Source: Oxylabs

Key Features & Use Case

A key advantage of Oxylabs is its results-based billing model, where you pay per 1,000 successful results, with pricing tiered by the target website's complexity. This success-based model, combined with an optional JavaScript rendering parameter and automatic CAPTCHA bypassing, ensures you only pay for the data you actually receive.

Best for:

Target-Specific Scraping: Ideal for projects focused on specific domains like e-commerce or search engines, where specialized APIs can provide better performance.

Global Data Collection: Teams that require data from various geographic locations will benefit from Oxylabs’ extensive proxy infrastructure.

Developers Seeking Quick Integration: The availability of SDKs and practical code examples significantly reduces setup time.

Pros: Clear, per-source pricing and scalable plans; mature proxy infrastructure with broad geographic coverage.

Cons: JavaScript-rendered calls are priced higher than standard HTML requests; some advanced features may be reserved for higher-tier plans.

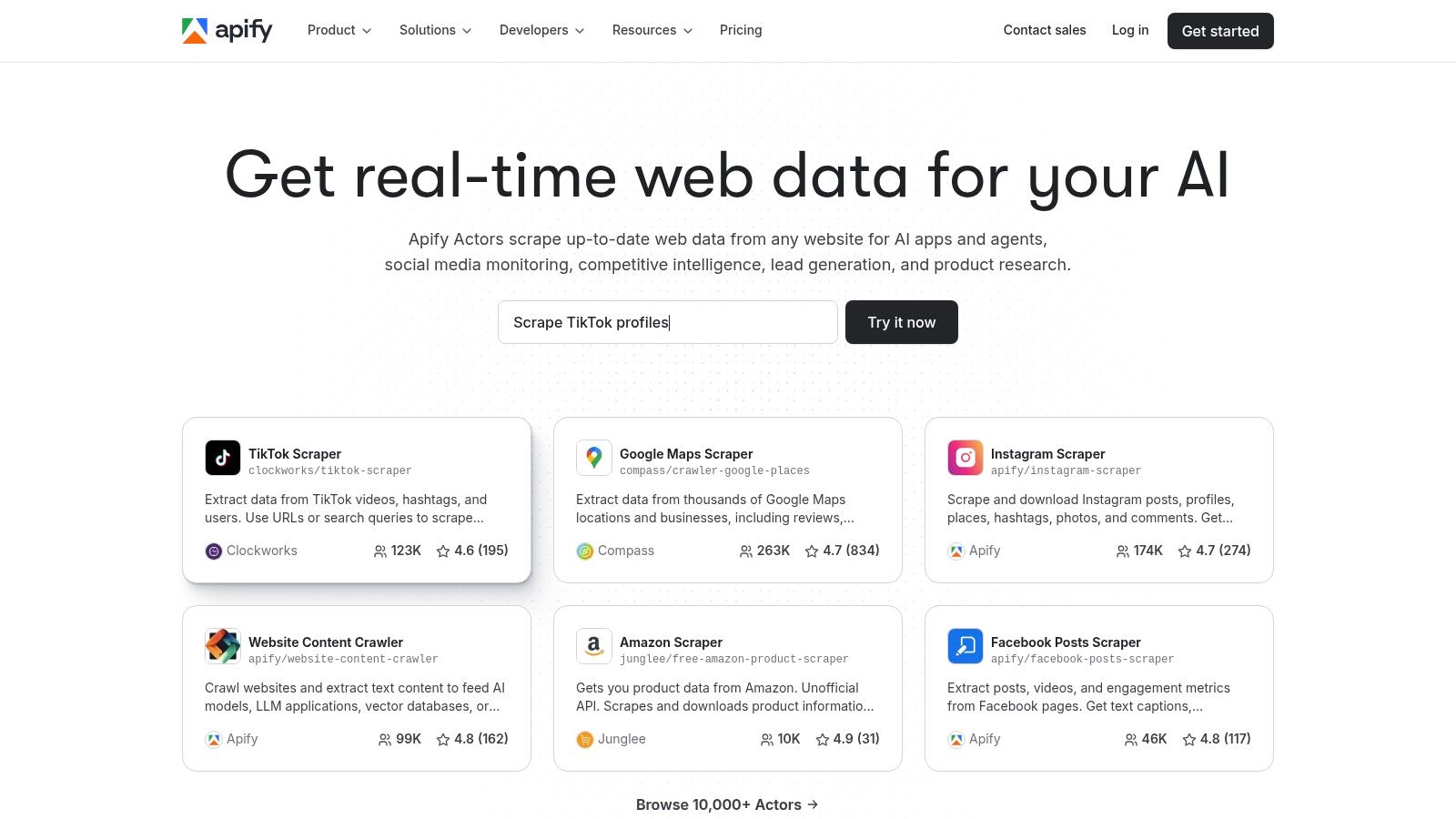

5. Apify — Platform, Actors, Marketplace

Apify is a comprehensive cloud platform that goes beyond a simple API, offering a full ecosystem for web scraping and automation. It combines a powerful API with a vast marketplace of pre-built "Actors" (serverless cloud programs), allowing teams to either build custom solutions or deploy ready-made scrapers for common targets like Google, Instagram, or Amazon.

Its core strength is its flexibility. Developers can write their own scrapers using Apify's SDKs, while non-coders can leverage thousands of public Actors from the Apify Store. This hybrid approach makes it an excellent choice for teams that need a mix of no-code speed and full-code control.

Caption: The Apify platform centers on its marketplace of pre-built scraper "Actors". Source: Apify

Caption: The Apify platform centers on its marketplace of pre-built scraper "Actors". Source: Apify

Key Features & Use Case

The Apify Store is the platform's most distinctive feature. Instead of building every scraper from scratch, you can use a proven, community-vetted Actor, significantly speeding up project timelines. Each Actor runs on the Apify platform, which handles the underlying infrastructure, including proxy management and scaling.

Best for:

Rapid Prototyping: Quickly find and deploy an existing Actor to gather data for a new project without writing any code.

Teams Needing Both No-Code & Code: Ideal for organizations where marketing teams might use pre-built scrapers while developers build custom data pipelines.

Monetizing Scraping Skills: Developers can publish and monetize their own custom Actors on the Apify Store.

Pros: Fast start by using proven Actors from a large marketplace; rich ecosystem with strong documentation and an active community.

Cons: Total cost can be complex, as it includes platform compute plus potential Actor rental fees; Actor quality varies by publisher.

Website: https://apify.com/

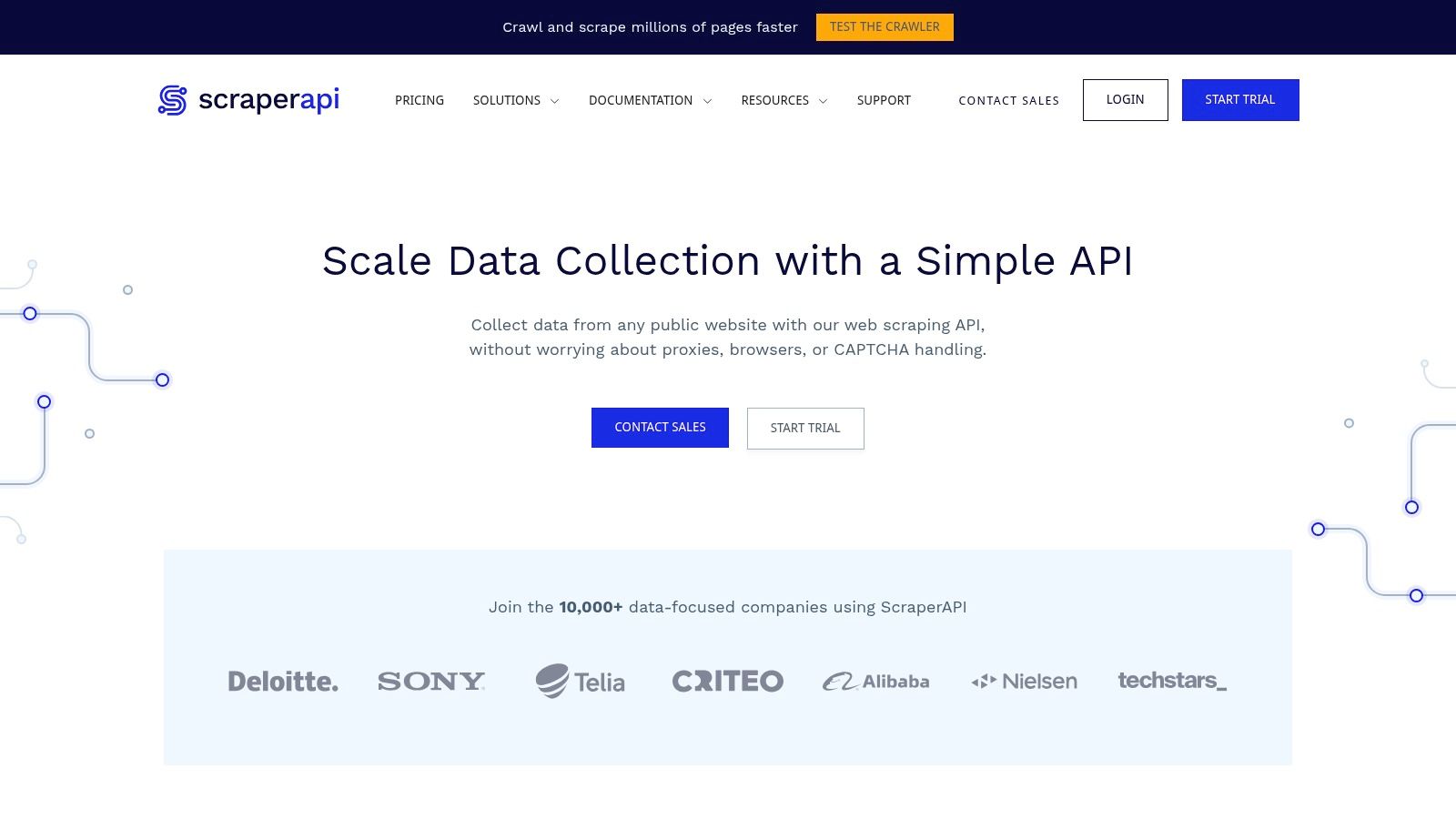

6. ScraperAPI

ScraperAPI offers a general-purpose web scraping solution designed for developers who need a straightforward, drop-in tool to handle proxies, browsers, and CAPTCHAs. It streamlines data extraction by converting a target URL into raw HTML with a single API call, abstracting away the complexities of anti-bot measures. This focus on simplicity and reliability makes it a strong choice for projects that require scale without extensive infrastructure management.

Its core value proposition is its credit-based system, which simplifies pricing and usage tracking. The API automatically rotates a pool of millions of proxies, including residential and mobile IPs on higher-tier plans, and can render JavaScript-heavy pages.

Caption: ScraperAPI provides a simple API for developers to get raw HTML without dealing with blocks. Source: ScraperAPI

Caption: ScraperAPI provides a simple API for developers to get raw HTML without dealing with blocks. Source: ScraperAPI

Key Features & Use Case

ScraperAPI's most practical feature is its simple credits model combined with a clear analytics dashboard. You know exactly how many successful requests you can make, and the concurrency limits are transparently tied to your plan, which helps in scaling operations predictably.

1# Example Python request using ScraperAPI

2import requests

3

4payload = { 'api_key': 'YOUR_API_KEY', 'url': 'https://httpbin.org/ip' }

5r = requests.get('https://api.scraperapi.com', params=payload)

6

7print(r.text)Best for:

Rapid Prototyping: Developers who need to get a scraping project off the ground quickly without configuring proxies or headless browsers.

Mid-Scale Data Extraction: Teams that require a reliable HTML source for e-commerce sites, news portals, or business directories.

Geotargeted Scraping: Useful for tasks like ad verification or scraping localized search results where accessing content from a specific region is critical.

Pros: Straightforward API credits model with a generous trial; competitive mid-market pricing and clear concurrency limits.

Cons: Regional limits on lower tiers (e.g., US/EU only); advanced parsing features may require higher-tier plans.

Website: https://www.scraperapi.com/

7. ScrapingBee

ScrapingBee is a developer-friendly API designed to simplify web scraping by handling common obstacles like headless browsers and proxy rotation. Its straightforward approach allows developers to focus on data extraction rather than infrastructure management, making it an excellent choice for teams that need a reliable, easy-to-integrate solution. The API is particularly well-suited for scraping modern, JavaScript-heavy websites without the complexity of managing your own browser instances.

Its core value proposition is simplicity and predictability. With clear documentation and a clean API surface, developers can get started quickly. ScrapingBee offers specialized endpoints, like its Google Search API, and allows for custom data extraction rules.

Caption: ScrapingBee's branding emphasizes its role in handling complex scraping so developers don't have to. Source: ScrapingBee

Caption: ScrapingBee's branding emphasizes its role in handling complex scraping so developers don't have to. Source: ScrapingBee

Key Features & Use Case

One of ScrapingBee's most appreciated features is its transparent, credit-based pricing model. Users purchase monthly credit packs, and different actions, like enabling JavaScript rendering or using premium proxies, consume a set number of credits. This system provides predictability for budgeting, and the free tier with 1,000 free API calls allows for thorough testing.

Best for:

Startups & Small Teams: The clear pricing and easy integration make it ideal for smaller teams who need to get a project running quickly.

General-Purpose Scraping: Its robust handling of JavaScript-rendered pages and rotating proxies makes it a versatile tool for various targets.

SEO & Marketing Data Collection: The dedicated Google Search API is perfect for automating SERP data collection and rank tracking.

Pros: Transparent credit-based pricing with 1,000 free calls; clean API and excellent documentation for fast integration.

Cons: JavaScript rendering consumes more credits per call, which can increase costs; high concurrency levels require more expensive plans.

Website: https://www.scrapingbee.com/

8. ZenRows — Universal Scraper API, Scraping Browser

ZenRows is an all-in-one web scraping toolkit designed to handle the most challenging anti-bot measures, making it a strong candidate for the best web scraping API when dealing with heavily protected sites. Its core offering is a universal API that integrates an AI-powered unblocker, automatic proxy and user-agent rotation, and headless browser functionality to bypass systems like Cloudflare and Akamai.

The platform’s strength lies in its unified approach. Developers can send a request to a single endpoint and let ZenRows determine the best strategy to retrieve the data, whether that requires a simple proxy or full browser rendering with CAPTCHA solving.

Key Features & Use Case

ZenRows features a pay-per-success model, ensuring you are only charged for successful API calls. For more complex tasks, its Scraping Browser integrates directly with popular automation libraries like Puppeteer and Playwright, allowing you to run existing browser-based scripts through ZenRows' unblocking infrastructure with minimal code changes.

Best for:

Bypassing Advanced Anti-Bot Systems: Its primary use case is successfully scraping websites that employ sophisticated WAFs and JavaScript challenges.

Developers Using Puppeteer/Playwright: Teams can easily route their existing browser automation scripts through ZenRows to add powerful proxy and anti-bot capabilities.

Cost-Optimized Scraping: The ability to tune parameters allows developers to use cheaper, basic requests for simple sites and reserve more expensive requests for protected targets.

Pros: High documented success rates on protected sites; transparent plan structure with a free trial and ongoing free quota.

Cons: Rendered or "protected" requests consume more credits than basic ones; requires parameter tuning to optimize spend.

Website: https://www.zenrows.com/

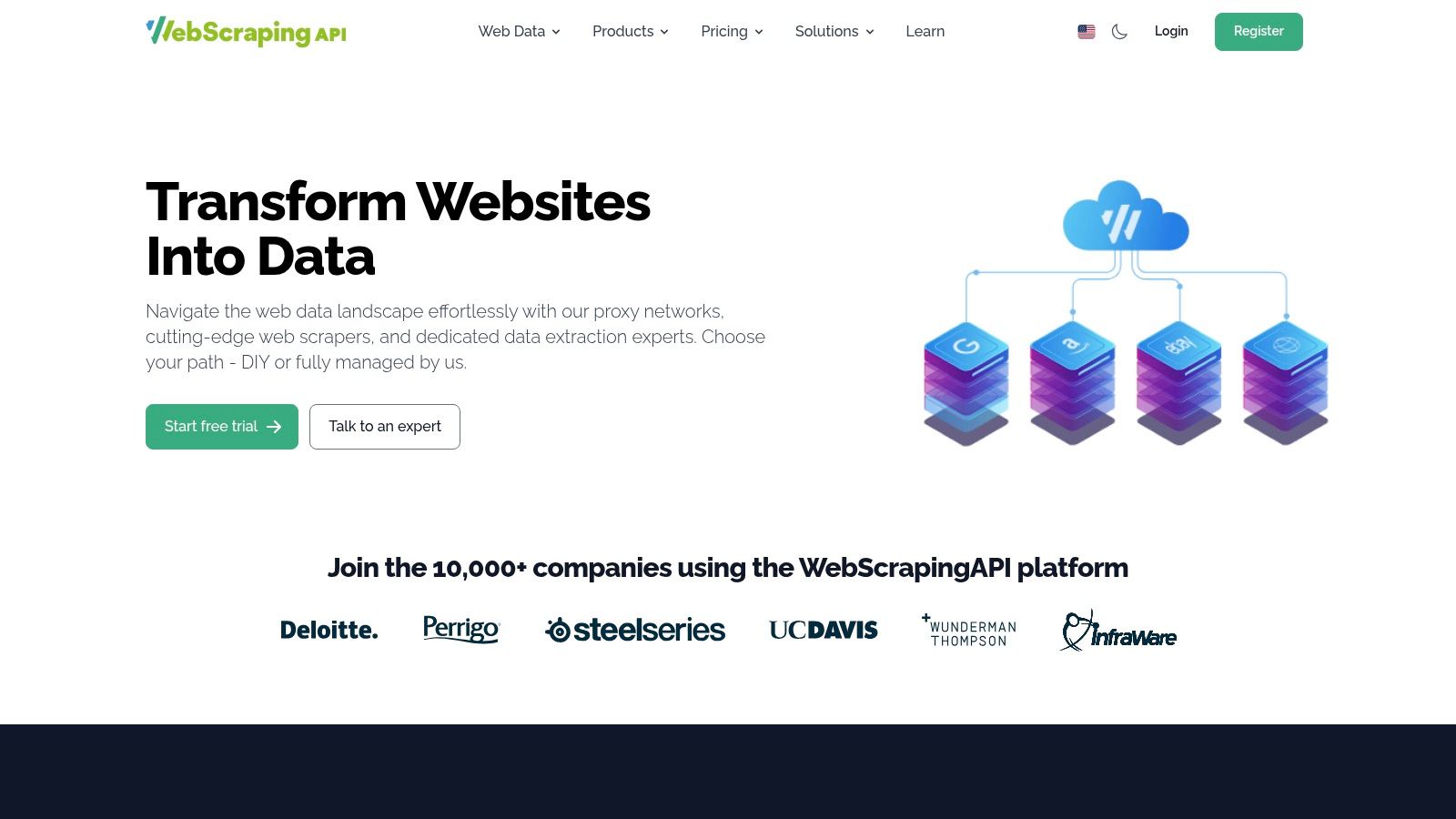

9. WebScrapingAPI

WebScrapingAPI offers a suite of tools that appeal to developers looking for a versatile, all-in-one data extraction platform. It bundles a general-purpose scraping API with specialized endpoints for SERP and e-commerce, residential proxies, and managed services, making it a strong candidate for teams who prefer sourcing multiple scraping solutions from a single vendor.

The platform's key distinction is its accessibility, offering low-cost entry points and clear free trial allowances for each of its products. This allows developers to thoroughly test specific functionalities, like JavaScript rendering or geotargeting, before committing to a paid plan.

Caption: The WebScrapingAPI dashboard gives an overview of usage and available tools. Source: WebScrapingAPI

Caption: The WebScrapingAPI dashboard gives an overview of usage and available tools. Source: WebScrapingAPI

Key Features & Use Case

WebScrapingAPI’s main appeal is its consolidated offering. Instead of integrating a separate proxy provider and a SERP API, a team can manage everything under one account and billing system. Its specialized APIs for Google and Amazon are particularly useful, as they are pre-configured to handle the unique anti-bot measures of these complex targets.

Best for:

Startups & Small Teams: The low entry pricing and generous free trials make it easy to get started without a large initial investment.

Multi-Faceted Projects: Ideal for developers who need to scrape standard websites, search engine results, and e-commerce platforms simultaneously.

SEO & E-commerce Monitoring: The dedicated SERP and Amazon APIs are purpose-built for reliably tracking rankings and product data.

Pros: Multiple specialized APIs and proxy services available from a single vendor; very low starter pricing and clear trial allowances.

Cons: The multi-product menu can be confusing for new users; API credit quotas vary per product and require careful management.

Website: https://www.webscrapingapi.com/

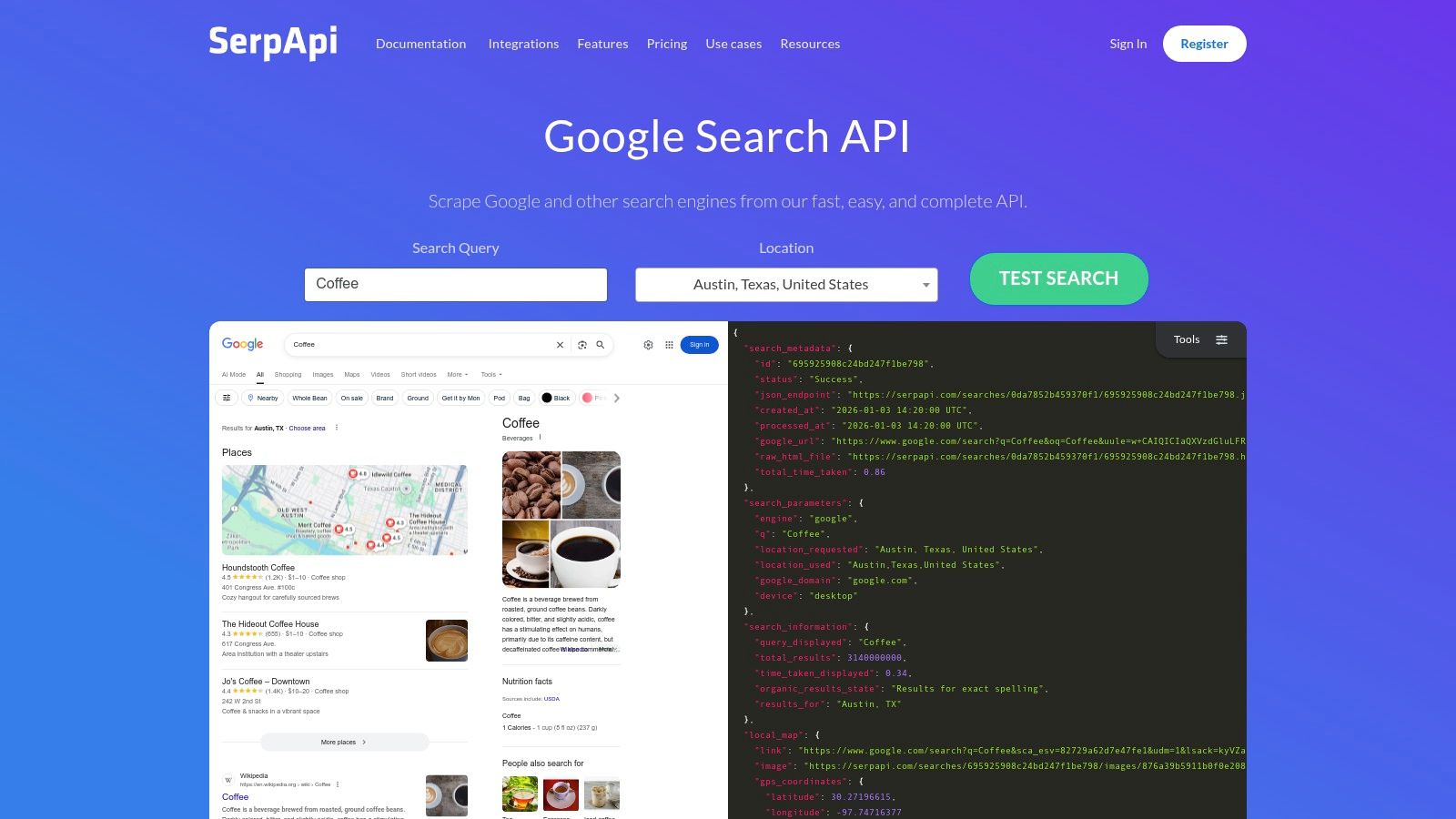

10. SerpAPI — Google and multi-engine SERP API

SerpAPI is a specialized and mature API focused exclusively on scraping search engine results pages (SERPs). Instead of returning raw HTML from any website, it provides real-time, parsed JSON data from a vast array of search engines, including Google, Bing, Baidu, and DuckDuckGo. This focus makes it a top-tier solution for developers who need reliable, structured search data without the overhead of parsing complex SERP layouts themselves.

Its primary strength is its reliability and ease of use for its specific niche. The API handles all the complexities of proxies, CAPTCHA solving, and parsing logic changes from search engines. This allows teams to integrate search data into their applications quickly.

Caption: SerpAPI focuses exclusively on providing structured JSON data from search engines. Source: SerpAPI

Caption: SerpAPI focuses exclusively on providing structured JSON data from search engines. Source: SerpAPI

Key Features & Use Case

A standout feature is the U.S. Legal Shield offered on Production and higher-tier plans. According to their site, this provides a legal guarantee for U.S.-based customers, indemnifying them against claims related to scraping public data from supported search engines. This legal backing, combined with deep geotargeting options, makes it an invaluable asset for SEO and marketing analytics. For comprehensive monitoring, many top SERP Tracking Tools rely on robust data sources like this.

Best for:

SEO & Marketing Agencies: Perfect for rank tracking, keyword research, and competitive analysis by pulling structured data.

Data Analytics Teams: Enables gathering large-scale search trend data for market research and business intelligence.

Risk-Averse Enterprises: The Legal Shield provides a layer of protection that is rare in the industry.

Pros: Consistent and reliable SERP parsing with excellent documentation; U.S. Legal Shield on higher tiers de-risks usage.

Cons: Not a general-purpose web scraper, focused only on search engines; throughput caps on plans may require careful capacity planning.

Website: https://serpapi.com/

11. Diffbot — Automatic Extraction & Knowledge Graph

Diffbot takes a different approach to web data extraction, moving beyond raw HTML to provide structured, semantic data. Instead of requiring developers to write site-specific parsers, it uses AI to automatically identify and extract entities like articles, products, people, and organizations from a URL. This makes it a powerful web scraping API for teams that need clean, normalized data without the overhead of maintaining custom selectors.

Its core value proposition is its ability to turn the unstructured web into a structured database. By using its page-type-specific Extract APIs (e.g., Article API, Product API), you get a consistent JSON object returned, regardless of the target site's layout.

Caption: Diffbot uses AI to automatically extract structured data and build a Knowledge Graph. Source: Diffbot

Caption: Diffbot uses AI to automatically extract structured data and build a Knowledge Graph. Source: Diffbot

Key Features & Use Case

Diffbot's standout feature is its commercial Knowledge Graph, a massive, pre-crawled index of web entities. You can use its Search and Enhance endpoints to enrich existing data, find organizations that match specific criteria, or build comprehensive profiles on people and companies. This transforms the tool from a simple scraper into a data enrichment and intelligence platform.

Best for:

Data Enrichment & Market Intelligence: Ideal for teams needing to build rich datasets on companies, people, and products by leveraging the Knowledge Graph.

Content Aggregation: Automatically extracts and normalizes articles, discussions, and news content from various sources into a unified format.

Semantic Analysis: Best for projects that require understanding the relationships between entities on the web.

Pros: Minimal selector writing required; provides a web-scale Knowledge Graph for enrichment; good for entity and semantic use cases.

Cons: Higher cost per volume than basic scraping APIs; best suited for structured page types, as custom layouts may need tuning.

Website: https://www.diffbot.com/

12. Rapid (RapidAPI) — API Hub/Marketplace

RapidAPI is not a web scraping API itself but rather the world's largest API marketplace where developers can find, test, and integrate thousands of different APIs, including many for web scraping. It acts as a centralized hub, allowing you to compare various scraping providers using a single account, a unified API key, and consolidated billing.

Its main value proposition is streamlining the evaluation process. Instead of signing up for multiple free trials across different services, developers can use RapidAPI's in-browser "API Playground" to test endpoints from various vendors side-by-side. This unified interface makes it a practical starting point for finding the best web scraping API for a specific project.

Caption: RapidAPI serves as a marketplace to discover and manage subscriptions to many different APIs. Source: RapidAPI

Caption: RapidAPI serves as a marketplace to discover and manage subscriptions to many different APIs. Source: RapidAPI

Key Features & Use Case

The platform's strength lies in its discovery and management capabilities. You can search and filter through a vast catalog of scraping APIs based on features, popularity, or pricing. Once you subscribe to an API through the marketplace, you manage all your subscriptions, view analytics, and handle billing from a single dashboard.

Best for:

API Discovery & Comparison: Ideal for developers in the research phase who want to quickly test and compare several scraping APIs.

Project Prototyping: The API Playground allows for rapid, low-friction testing to validate if a specific scraping API meets the project's needs.

Centralized API Management: Teams that use many different third-party APIs benefit from consolidating subscriptions and billing.

Pros: Fast vendor comparison and low-friction trials from one place; helpful when procurement prefers a marketplace channel and unified billing.

Cons: Rapid is not a scraper itself, so quality varies widely between individual API listings; some premium features may be hidden until after subscription.

Website: https://rapidapi.com/

Top 12 Web Scraping APIs Comparison

| Product | Core capabilities | Target audience & use cases | Quality & reliability | Pricing & value proposition |

|---|---|---|---|---|

| CrawlKit | Scrape, Extract to JSON, Search, Screenshots, LinkedIn | Developers, AI engineers, data teams; RAG pipelines, lead gen | Proxies/anti-bot abstracted away; high success rates | Pay-as-you-go; starts free; credits never expire |

| Zyte — Zyte API | Auto anti-bot, managed headless rendering, HTML/rendered responses | Teams needing robust unblocking and one endpoint for all content types | Smart unblocking per request, high rate limits, success-based billing | Pay-per-success by site tier, volume discounts, small trial |

| Bright Data — Web Scraper API | Multiple APIs (records/GB/browser), managed services, datasets | Enterprises, regulated/large-scale operations, teams needing SLAs | Enterprise SLAs, high unblocking success, content verification | Per-1K or per-GB pricing, enterprise plans, higher cost |

| Oxylabs — Web Scraper API family | Suite of APIs (web/e‑commerce/SERP), premium proxies, JS rendering | Teams needing premium proxy pool, per-target pricing, scalable scraping | Broad geographic proxies, success-based billing, JS calls cost more | Per-result billing, free trial, tiered pricing |

| Apify — Platform & Actors | Marketplace of "Actors", SDKs, scheduler, datasets, webhooks | Fast prototyping with prebuilt scrapers, no-code + code control | Large ecosystem, proven Actors, variable Actor quality (check ratings) | Usage-based billing, monthly credit bundles, Actor fees may apply |

| ScraperAPI | URL-to-HTML API, proxies, browser rendering, CAPTCHA handling | Developers seeking a drop-in URL-to-HTML solution at scale | Automatic retries, geotargeting, concurrency scaling, analytics dashboard | Credit-based model, generous trial, competitive mid-market pricing |

| ScrapingBee | Rotating proxies, JS rendering, screenshots, Google Search API | Devs who want simple API, clear docs, predictable monthly credits | Transparent tiers, good docs, 1,000 free test calls, JS uses more credits | Tiered credit packs, clear pricing, higher concurrency on larger plans |

| ZenRows — Universal Scraper API | AI Web Unblocker, proxy rotation, JS rendering, browser integration | Teams prioritizing bypassing modern WAFs and high-success scraping | High documented success rates on protected sites, pay-per-success | Pay-per-success model, free trial/quota, rendered requests cost more |

| WebScrapingAPI | General & specialized APIs (SERP, Amazon), residential proxies | Teams wanting multiple scraping products from one vendor, low-cost entry | Success-based billing, clear trial allowances, per-product quotas | Low starter pricing, product-specific quotas and tiers |

| SerpAPI — SERP API | Real-time parsed SERP JSON, geotargeting, multi-engine coverage | Use cases focused on search results, SERP monitoring, SEO analytics | Consistent uptime, strong docs & libraries, optional U.S. Legal Shield | Tiered plans with throughput caps, pricing by number of queries |

| Diffbot — Extraction & KG | Page-type extractors, Knowledge Graph, Bulk Extract | Teams needing semantic/entity extraction and data enrichment | High-quality structured entities, web-scale Knowledge Graph | Credit-based pricing, higher cost per volume vs basic scrapers |

| Rapid (RapidAPI) | API marketplace, unified key/billing, in-browser testing | Procurement teams, rapid vendor comparison, trialing multiple APIs | Varies by vendor listing; simplifies testing & management | Marketplace billing model; pricing depends on chosen APIs |

Frequently Asked Questions (FAQ)

1. What is a web scraping API? A web scraping API is a service that allows a developer to programmatically retrieve data from a website with a simple HTTP request. The best web scraping APIs handle the complex parts of the process, such as managing rotating proxies, rendering JavaScript, and solving CAPTCHAs, so the developer can focus on using the data.

2. Is using a web scraping API legal?

Web scraping public data is generally considered legal, a stance supported by cases like the hiQ vs. LinkedIn ruling. However, legality can depend on the data being scraped (e.g., copyrighted or personal data), the method used, and the website's terms of service. Always respect robots.txt files and consult legal counsel for specific use cases.

3. Why use a web scraping API instead of building my own scraper? Building a scraper with libraries like Puppeteer or Selenium is feasible for small projects. However, for reliable, large-scale scraping, you need to manage a complex infrastructure of proxy networks, headless browser farms, and systems to bypass anti-bot technologies. A web scraping API abstracts all that complexity away, saving significant development time and maintenance costs.

4. How much does a web scraping API cost? Pricing varies widely. Many services like CrawlKit or ScrapingBee offer a free tier with a set number of monthly credits. Paid plans are often based on usage, such as the number of successful requests, and can range from around $25/month for small projects to thousands for enterprise-level operations. Features like JavaScript rendering or using premium residential proxies typically increase the cost per request.

5. What is the difference between a proxy and a scraping API? A proxy is just an intermediary server that masks your IP address. A full web scraping API is a complete solution that includes a proxy network but also adds headless browser rendering, CAPTCHA solving, automatic retries, and management of user-agents and browser fingerprints to ensure a high success rate.

6. Which API is best for scraping JavaScript-heavy websites? APIs like CrawlKit, Zyte, and ZenRows are excellent choices for JavaScript-heavy sites (Single-Page Applications). They have built-in headless browser rendering that executes the site's JavaScript, ensuring you receive the fully loaded HTML content just as a user would see it in their browser.

7. Can a web scraping API extract data into a structured format like JSON? Yes. While some APIs return raw HTML, more advanced services like CrawlKit and Diffbot offer structured data extraction. They use CSS selectors or AI to parse the HTML and return clean, structured JSON data based on a schema you define, which eliminates the need for post-processing and parsing on your end.

Next steps

The key takeaway is that there is no single "best" solution for everyone; the ideal API is the one that aligns perfectly with your project's technical requirements, scale, budget, and target websites. Your next step is to shortlist two or three top contenders from this list and put them to the test against your actual target domains.

How to Choose Your Web Scraping API: A Practical Checklist

- Define Your Core Use Case: Are you building a price monitoring tool, a lead generation engine, or a data pipeline for an AI model?

- Identify Your Target Sites: List the websites you need to scrape. Note if they are static HTML or dynamic, JavaScript-heavy applications.

- Run a "Proof of Concept" Test: Sign up for the free trials of your top candidates. Test difficult URLs, assess the data quality, and evaluate performance.

- Review Developer Experience (DX): How easy is it to get started? Check the documentation quality, API design, and available tooling like an API playground.

- Project Future Costs: Use the pricing calculators to model your expected costs based on your projected monthly request volume and feature needs.

By methodically working through this checklist, you transform the search for the best web scraping API from a guessing game into an informed, data-driven decision.

Ready to skip the infrastructure setup and start getting clean, structured data immediately? CrawlKit is a developer-first web scraping API designed to handle the complexities of proxies, browser rendering, and anti-bot systems for you. Start Free and make your first 1,000 requests on us.

You might also like:

- A Comprehensive Guide to Web Scraping APIs

- The 8 Best MAP Monitoring Software Tools

- Top 10 SERP Tracking Tools for SEO Professionals