Meta Title: How to Build a Web Crawler: A Practical Developer's Guide Meta Description: Learn how to build a web crawler from scratch. We cover architecture, Python examples, handling JavaScript, and scaling data extraction pipelines.

Learning how to build a web crawler is about turning the web’s unstructured chaos into clean, actionable data. This guide is a practical blueprint, covering everything from core architecture and respecting politeness policies to navigating today's dynamic, JavaScript-heavy sites. We’ll also explore why many developers move from DIY setups to powerful APIs that abstract away the messy infrastructure, letting them focus purely on the data.

Table of Contents

- The Modern Landscape of Web Data Extraction

- Designing Your Crawler's Architecture

- Building a Simple Crawler in Python

- Handling Modern Web Challenges

- Storing and Scaling Your Crawled Data

- Frequently Asked Questions

- Next Steps

The Modern Landscape of Web Data Extraction

A web crawler is the engine that converts raw web content into structured datasets for analysis and application development. Source: Unsplash.

A web crawler is the engine that converts raw web content into structured datasets for analysis and application development. Source: Unsplash.

Building a web crawler today is more complex than just sending a few HTTP requests. The modern web is built on client-side JavaScript, protected by sophisticated anti-bot measures, and governed by unwritten rules of engagement. A simple script that works on a static site will fail against the dynamic content powering most modern applications.

Key Challenges in Web Crawling

The path from a basic script to a robust, production-ready crawler is filled with significant hurdles. Developers must address a mix of technical and ethical considerations to build an effective and respectful tool.

- JavaScript-Rendered Content: Many sites use frameworks like React or Vue, loading data long after the initial HTML page arrives. A simple crawler often sees a blank page. You need a headless browser to execute JavaScript and see what a real user sees.

- Anti-Bot Systems: Websites actively defend their data with CAPTCHAs, IP-based rate limiting, and browser fingerprinting to block automated traffic. Bypassing these requires a distributed network of proxies and sophisticated request management.

- Politeness and Ethics: A good crawler is not a battering ram. It must respect a site’s

robots.txtfile, which outlines rules for bots. Ignoring these rules or crawling too aggressively can get your IP blocked and overload the target server.

DIY vs. Managed Infrastructure

These challenges push many developers to a critical decision: invest time and resources in managing servers, proxy networks, and browser farms, or offload the heavy lifting?

The operational overhead often becomes a distraction. This is where a developer-first, API-first platform like CrawlKit becomes invaluable. It completely abstracts away the scraping infrastructure, so you can focus on the data. Proxies, browser rendering, and anti-bot systems are handled automatically. You make a simple API call and get clean JSON data from any website. You can start free and see the difference.

You can check out the huge range of web scraping use cases that are made possible by this streamlined approach.

Designing Your Crawler's Architecture

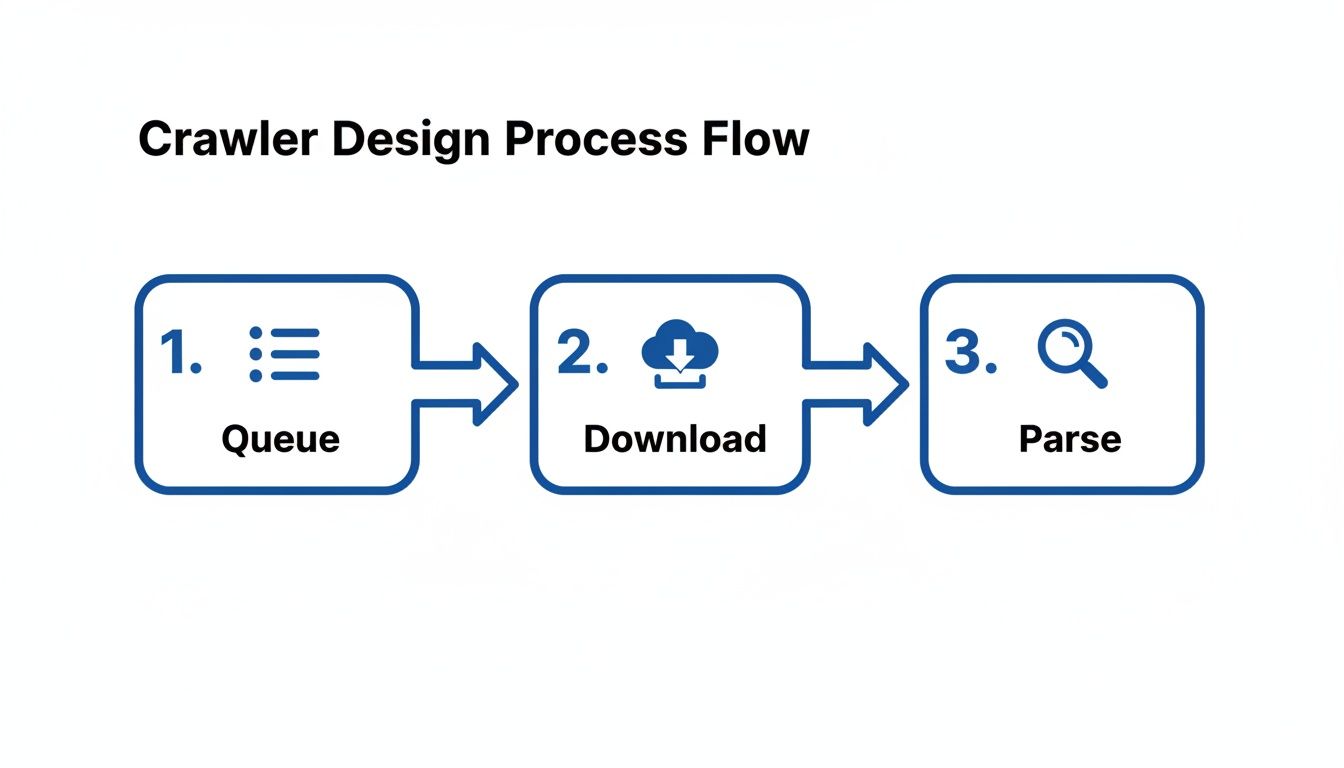

The core crawling process involves managing a URL queue, downloading content, and parsing it for data and new links. Source: Wikimedia Commons.

The core crawling process involves managing a URL queue, downloading content, and parsing it for data and new links. Source: Wikimedia Commons.

Before writing any code, you need a solid blueprint. A good design distinguishes an efficient crawler from one that constantly breaks. At its heart, every web crawler operates in a continuous loop between four key components.

- URL Frontier: This is the brain of the operation. It manages a queue of URLs to be crawled, starting with an initial "seed" list and expanding as it discovers new links.

- Downloader: This workhorse takes a URL from the frontier and makes an HTTP request to fetch the raw HTML content.

- Parser: Once the content is downloaded, the parser scans the HTML to extract the target data and discover new links to add back to the URL frontier.

- Data Store: This is where your extracted information is saved. It can be a simple CSV file or a structured database, depending on your needs.

Monolithic vs. Distributed Architecture

The scale of your project is the single biggest factor in choosing your architecture. For small jobs, like crawling a single website, a monolithic architecture is best. Everything runs on one machine. It’s simple, easy to manage, and perfect for learning or targeted data tasks.

When you need to crawl millions of pages, a monolithic setup will grind to a halt. That’s when you need a distributed architecture. By splitting components across multiple servers, you can scale horizontally, processing pages in parallel for greater speed and resilience.

Comparing Monolithic and Distributed Crawler Architectures

This table breaks down the pros and cons of monolithic and distributed architectures to help you decide which path is right for your project's scale and complexity.

| Feature | Monolithic Crawler | Distributed Crawler |

|---|---|---|

| Complexity | Low. Easy to set up and manage. | High. Requires message queues, coordination, and complex deployments. |

| Scalability | Limited. Bound by the resources of a single machine. | High. Can scale horizontally by adding more machines to the cluster. |

| Cost | Low initial cost. Can run on a single server or laptop. | Higher cost due to multiple servers and infrastructure management. |

| Performance | Slower. Sequential processing creates bottlenecks. | Fast. Parallel processing allows for high throughput. |

| Fault Tolerance | Low. A single point of failure can halt the entire crawl. | High. If one node fails, others can continue working. |

| Best For | Small-scale projects, single-site crawls, and proof-of-concepts. | Large-scale crawling, high-volume data extraction, and production systems. |

Ultimately, the choice comes down to your project's goals. Start simple and evolve as your needs grow.

Core Design Principles for Respectful Crawling

A great crawler is not just fast—it's also a good internet citizen. Building politeness into your architecture from day one is a requirement.

First, your crawler must always check and obey a website's robots.txt file. This file outlines rules for bots, specifying which parts of the site are off-limits. Ignoring it is the fastest way to get your IP address banned.

Second, implement a crawl delay. Hammering a server with rapid-fire requests can slow it down for human users and get you blocked. A simple delay between requests to the same domain shows respect. Some robots.txt files even specify a Crawl-delay directive to follow.

State Management and Avoiding Duplicates

As your crawler explores the web, it will find the same URLs repeatedly. Re-crawling and re-processing the same content is a massive waste of resources.

To prevent this, you need solid state management. The most common method is to keep a set or database of every URL you've already seen. Before adding a new URL to the queue, you check against this "seen" list. This simple step ensures every page is processed only once, making your crawler dramatically more efficient. When you're ready to start parsing, understanding the structure of raw HTML responses is fundamental.

Building a Simple Crawler in Python

Let's turn that architectural blueprint into code. The best way to understand how a crawler works is to build a simple one. We'll use Python, the go-to language for this work, along with the requests and BeautifulSoup libraries.

Our script will crawl a single, static website, find all internal links, and maintain a queue of where to go next. This is the seed from which more complex crawlers grow.

Getting Your Python Environment Ready

First, you need the right tools. If you haven't used them before, install the requests and BeautifulSoup libraries. Open your terminal and run these commands:

1pip install requests

2pip install beautifulsoup4Once installed, you're ready to start requesting pages and parsing their content.

The Core Logic: Fetch, Parse, Repeat

Now, let's write a Python script to crawl a single site. The goal is to start with one URL and discover every other page on the same domain. To avoid getting stuck in loops, we'll use a list for URLs to visit and a set for URLs we've already visited.

Here is a basic implementation:

1import requests

2from bs4 import BeautifulSoup

3from urllib.parse import urljoin, urlparse

4

5def basic_crawler(start_url):

6 urls_to_visit = [start_url]

7 visited_urls = set()

8

9 while urls_to_visit:

10 current_url = urls_to_visit.pop(0)

11 if current_url in visited_urls:

12 continue

13

14 try:

15 response = requests.get(current_url, timeout=5)

16 visited_urls.add(current_url)

17 print(f"Crawling: {current_url}")

18

19 soup = BeautifulSoup(response.content, 'html.parser')

20 for link in soup.find_all('a', href=True):

21 absolute_link = urljoin(current_url, link['href'])

22

23 # Only crawl links on the same domain

24 if urlparse(absolute_link).netloc == urlparse(start_url).netloc:

25 if absolute_link not in visited_urls:

26 urls_to_visit.append(absolute_link)

27 except requests.RequestException as e:

28 print(f"Failed to fetch {current_url}: {e}")

29

30# Example of how you'd run it:

31# basic_crawler('https://your-target-website.com')This script gives you a function that manages a queue (urls_to_visit) and tracks its history (visited_urls), providing a solid starting point.

Being a Good Web Citizen

The script we wrote works, but it's aggressive. It will hit the target server as fast as possible, which can get your IP address blocked. Let's add a "politeness" delay. We'll use Python's built-in time module.

1import time

2

3# Inside your crawler's `while` loop, after a successful request:

4time.sleep(1) # Pause for 1 second before the next requestThis one-second pause makes a big difference. It signals that your automated script is respectful of the server's resources. For most small sites, a 1–2 second delay is a safe bet.

As you can see, building and maintaining this kind of infrastructure quickly becomes a full-time job. This is where an API-first platform like CrawlKit is a lifesaver. It is built to handle all the messy parts—proxy rotation, bot detection, and politeness policies—for you. You can start for free with CrawlKit and get clean, structured JSON data back from a simple API call, letting you focus on the data, not the plumbing.

Handling Modern Web Challenges

Modern web security, including JavaScript challenges and CAPTCHAs, requires crawlers to use more advanced techniques like headless browsers. Source: Pexels.

Modern web security, including JavaScript challenges and CAPTCHAs, requires crawlers to use more advanced techniques like headless browsers. Source: Pexels.

Our simple HTTP-based crawler will quickly hit a wall on the modern web. Today's internet is dynamic, powered by JavaScript frameworks and shielded by anti-bot measures. Learning how to build a web crawler that thrives in this environment requires a much smarter approach.

Navigating JavaScript-Rendered Content

Many modern sites use client-side rendering (CSR). When a basic crawler hits one of these pages, the server often sends back a nearly empty HTML file. The real content is loaded later by JavaScript running in the browser.

To get the real content, you need a headless browser. Tools like Puppeteer (Node.js) or Playwright (Python, Node.js) let you control a real browser engine programmatically. This gives your crawler superpowers:

- Execute JavaScript: Wait for the page to fully load, just like a human.

- Interact with elements: Click buttons, fill out forms, or scroll to trigger lazy-loaded content.

- Capture the final HTML: Grab the complete, rendered HTML for parsing.

Here’s a quick cURL example showing how an API like CrawlKit can render a JavaScript-heavy page for you, saving you from managing headless browsers yourself.

1curl "https://api.crawlkit.sh/v1/scrape" \

2 -H "Authorization: Bearer YOUR_API_KEY" \

3 -d '{

4 "url": "https://example-react-app.com",

5 "javascript": true

6 }'This single API call does the work of an entire fleet of headless browsers. Try the CrawlKit Playground for free and see how easy data extraction can be.

The Cat-and-Mouse Game of Anti-Bot Systems

Once you've mastered JavaScript rendering, the next challenge is dealing with websites that actively block automated traffic. Websites use various tactics to spot bots:

- IP Rate Limiting: Blocking any IP address that sends too many requests.

- CAPTCHAs: Puzzles that are easy for humans but difficult for bots.

- Browser Fingerprinting: Analyzing browser details to flag automated clients.

The key to bypassing anti-bot systems is to avoid predictable patterns. The most crucial tool is a proxy server, which hides your crawler's real IP address. By rotating through a large pool of proxies, you can spread requests across many IPs, making it much harder for a site to block you.

Managing a proxy pool is a full-time job. This is the kind of undifferentiated heavy lifting that developer-first platforms like CrawlKit eliminate. With proxies and anti-bot systems abstracted away, you can focus on the data, not the bot detection arms race.

Storing and Scaling Your Crawled Data

Effective data storage solutions, from simple files to distributed databases, are critical for managing and scaling a web crawling project. Source: Getty Images.

A crawler is only as good as the data it produces. The final—and most critical—steps are storing your findings and scaling your operation without drowning in infrastructure. These "last-mile" problems often determine whether you end up with a valuable asset or a maintenance headache.

Selecting the Right Data Storage

You need to decide where to put the collected data. This choice impacts how you'll query and analyze it.

- CSV (Comma-Separated Values): For simple, flat data, a CSV file is often sufficient. It's lightweight, universally compatible, and easy to work with.

- JSON (JavaScript Object Notation): For nested or complex structures, JSON is the industry standard. Its hierarchical format is perfect for representing complex relationships.

- Databases: For any serious, large-scale crawl, a database is necessary.

- Relational (e.g., PostgreSQL): Best for highly structured data where integrity is paramount.

- NoSQL (e.g., MongoDB): Perfect for semi-structured data that might change over time.

- Cloud Storage (e.g., Amazon S3): Ideal for archiving raw HTML or large files like screenshots.

The Scaling Challenge: Infrastructure vs. APIs

As your crawling needs grow, you'll hit a wall. Suddenly, you're not just a data engineer; you're a full-time infrastructure manager. This is when many teams switch from DIY to a managed, API-first solution.

The growth of the web crawling services market shows why so many engineers now prefer a dedicated API over maintaining self-hosted systems.

Developer-first platforms like CrawlKit abstract this complexity away. Instead of managing proxy rotation and solving CAPTCHAs, you just make a single API call. With an API, the entire distributed system of headless browsers and proxies is handled for you. For ambitious projects like using web data for AI training data collection, this is almost always the most practical path.

Here’s a cURL example showing how to get structured JSON from a page, letting the API handle the heavy lifting of extraction.

1curl "https://api.crawlkit.sh/v1/extract" \

2 -H "Authorization: Bearer YOUR_API_KEY" \

3 -d '{

4 "url": "https://example.com/products/123",

5 "fields": {

6 "productName": {"selector": "h1"},

7 "price": {"selector": ".product-price"}

8 }

9 }'This approach lets you scale your data extraction on demand without ever touching a server or managing a headless browser.

Frequently Asked Questions

Is building and running a web crawler legal?

Yes, but how you crawl matters. The guiding principle is to access publicly available data without harming the website. Always check the robots.txt file and Terms of Service. Cases like hiQ Labs v. LinkedIn have set precedents favoring crawling public data, but aggressive crawling can lead to legal issues. Avoid scraping copyrighted material for redistribution or personal data without consent.

How do I make my web crawler faster?

To improve speed, move from sequential to concurrent requests.

- Multithreading: Run multiple download threads in parallel within the same process.

- Asynchronous Programming: Use libraries like Python's

asyncioto handle multiple requests without waiting for each one to complete. - Distributed Architecture: Run multiple crawler instances across different machines for large-scale operations.

What is the difference between a web crawler and a web scraper?

Although often used interchangeably, they have distinct roles.

- A web crawler (or spider) discovers and indexes URLs, building a map of a site.

- A web scraper extracts specific data (like prices or text) from a page's HTML. In practice, most projects combine both functions: the crawler finds the pages, and the scraper extracts the data.

How can I avoid getting blocked while crawling?

To avoid getting blocked, your crawler needs to act more like a human.

- Use Rotating Proxies: Spreading requests across many IP addresses is the most effective strategy.

- Set a Realistic User-Agent: Mimic a common browser instead of using a default library agent.

- Respect

robots.txt: Always follow the rules, especiallyCrawl-delaydirectives. - Handle Cookies: Managing sessions correctly makes your crawler look like a legitimate user.

- Use a Headless Browser: Render JavaScript-heavy sites completely to mimic human interaction.

What data format should I use to store crawled data?

The right format depends on your use case.

- CSV: Ideal for simple, flat data that can be used in spreadsheets.

- JSON: Best for structured or hierarchical data.

- Database: Necessary for large-scale projects requiring complex queries (e.g., PostgreSQL for structured data, MongoDB for semi-structured data).

How do crawlers handle duplicate content and crawl traps?

Efficient crawlers avoid re-doing work and getting stuck in infinite loops.

- Duplicate Handling: Crawlers maintain a record of visited URLs (using a set, database, or Bloom filter) to avoid re-crawling pages.

- Crawl Trap Avoidance: To avoid traps like endless calendar pages, crawlers can set a maximum crawl depth, limit the number of URLs per domain, or detect and ignore repetitive URL patterns.

Why is a developer-first, API-first platform better than DIY?

Building and maintaining your own crawling infrastructure is complex and costly. You have to manage servers, rotating proxies, headless browser farms, and constantly adapt to new anti-bot techniques. An API-first platform like CrawlKit abstracts all this away. You get reliable data via a simple API call, allowing you to focus on building your product instead of managing infrastructure.

How can I start building a web crawler for free?

You can start by writing a simple Python script using libraries like requests and BeautifulSoup. For more advanced needs, platforms like CrawlKit offer a generous free tier that allows you to scrape thousands of pages per month without managing any infrastructure. This is a great way to build a proof-of-concept or power a small project.

Next steps

- Learn About Raw HTML Extraction: Explore how to get clean HTML data from any URL.

- See Real-World Applications: Discover powerful use cases for web scraping.

- Get Hands-On: Try the CrawlKit Playground for free and see how easy data extraction can be.