Meta Title: How to Create an API From Scratch: A Developer's Guide Meta Description: Learn how to create an API from the ground up. This step-by-step guide covers API design, security, testing, deployment, and best practices.

Creating an API is one of the most valuable skills for a modern developer, acting as the connective tissue for countless applications. But building a great API—one that's secure, scalable, and easy to use—starts with a solid plan, not a single line of code. This guide walks you through the entire process, from initial blueprint to a production-ready service.

Table of Contents

- Laying the Groundwork for a Bulletproof API

- Building Your Endpoints and Core Logic

- Securing Your API With Modern Authentication

- Enriching Your Data Using Other APIs

- From Testing to Deployment and Beyond

- Common Questions About Creating an API

Laying the Groundwork for a Bulletproof API

Before writing any code, the success of your API depends on a solid blueprint. A well-designed plan is the difference between an intuitive, easy-to-use API and one that causes endless headaches.

First, define the API’s core purpose and audience.

- What problem does it solve? Is it for a mobile app, an internal data processing tool, or a public service for other developers?

- Who is the end user? The needs of an internal team differ greatly from external partners.

- What are the must-have features? Map out the core functionality needed to deliver value and avoid feature creep.

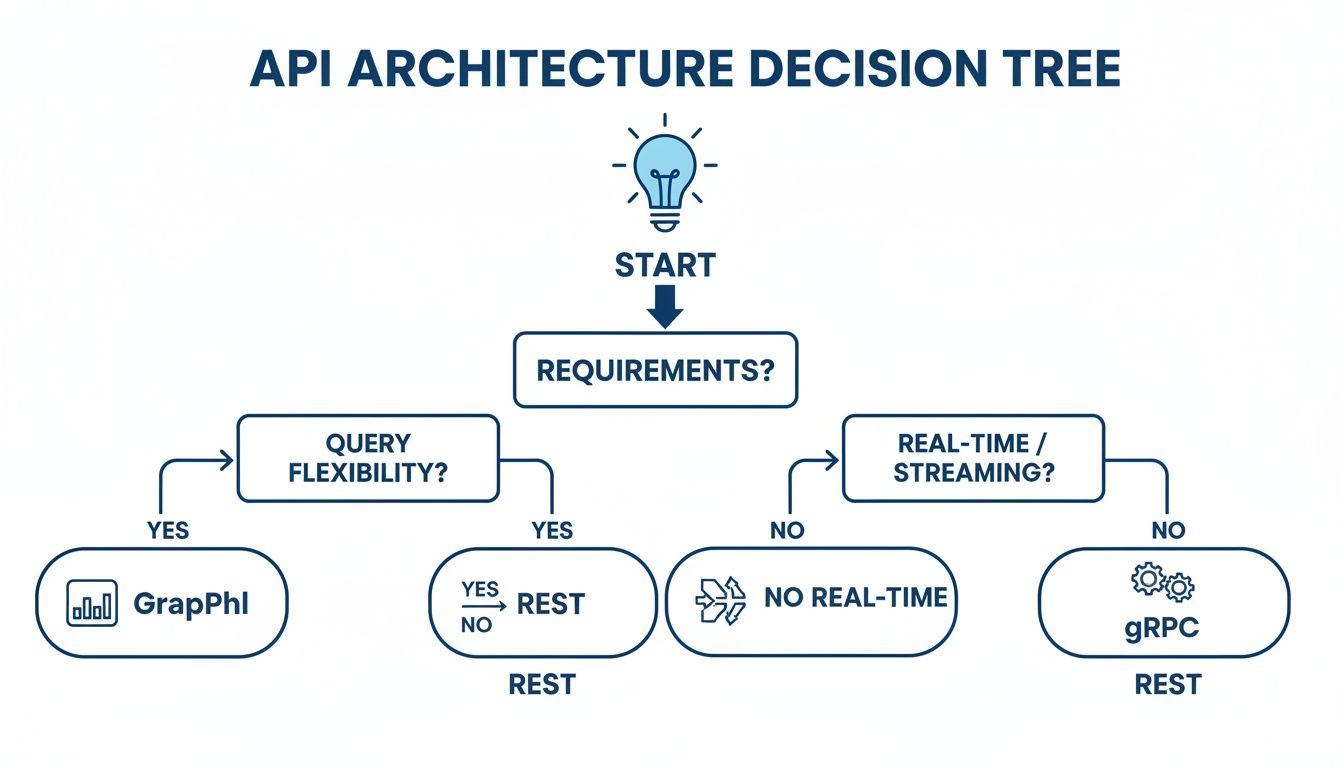

With a clear purpose, the next critical decision is the architectural style. This choice dictates how developers will interact with your API and how it moves data. The three dominant styles are REST, GraphQL, and gRPC.

Caption: This decision tree helps visualize which API architecture best fits your project's needs, from simple CRUD operations to high-performance microservices.

Source: CrawlKit Blog

Caption: This decision tree helps visualize which API architecture best fits your project's needs, from simple CRUD operations to high-performance microservices.

Source: CrawlKit Blog

Choosing Your API Architectural Style

Each style has unique strengths. REST is often the default for standard CRUD operations, GraphQL excels with complex data, and gRPC is built for high-performance internal communication. To learn more, review these crucial API design best practices.

| Feature | REST | GraphQL | gRPC |

|---|---|---|---|

| Data Fetching | Multiple endpoints for different resources | Single endpoint with client-specified queries | Service methods with predefined request/response types |

| Performance | Can lead to over-fetching or under-fetching | Highly efficient; clients get exactly what they ask for | Very high performance with binary serialization (Protobuf) |

| Coupling | Loosely coupled | Decoupled client and server evolution | Tightly coupled through contract-based .proto files |

| Use Case | Public APIs, simple resource models, CRUD operations | Mobile apps, complex frontends, microservices needing flexibility | Internal microservices, streaming, real-time communication |

| Data Format | Typically JSON | JSON | Protocol Buffers (binary) |

There is no single "best" choice—only the best choice for your specific requirements.

Data Modeling and Resource Planning

After choosing an architecture, model your data by identifying the main "nouns" of your system. These become your API's resources. For an e-commerce platform, resources would include users, products, orders, and reviews.

Next, map the relationships between resources. An order belongs to a user and contains multiple products. This conceptual map translates directly into API endpoints, like /users/{userId}/orders.

Designing these logical connections is fundamental. Much like building efficient data pipelines for analytics and operations, the goal is a clean, predictable information flow that makes the API intuitive for developers.

Building Your Endpoints and Core Logic

With the blueprint ready, it’s time to turn plans into functional code. This involves setting up a server, defining routes, and writing the logic that handles requests and returns data. The goal is to create a solid foundation you can build upon confidently.

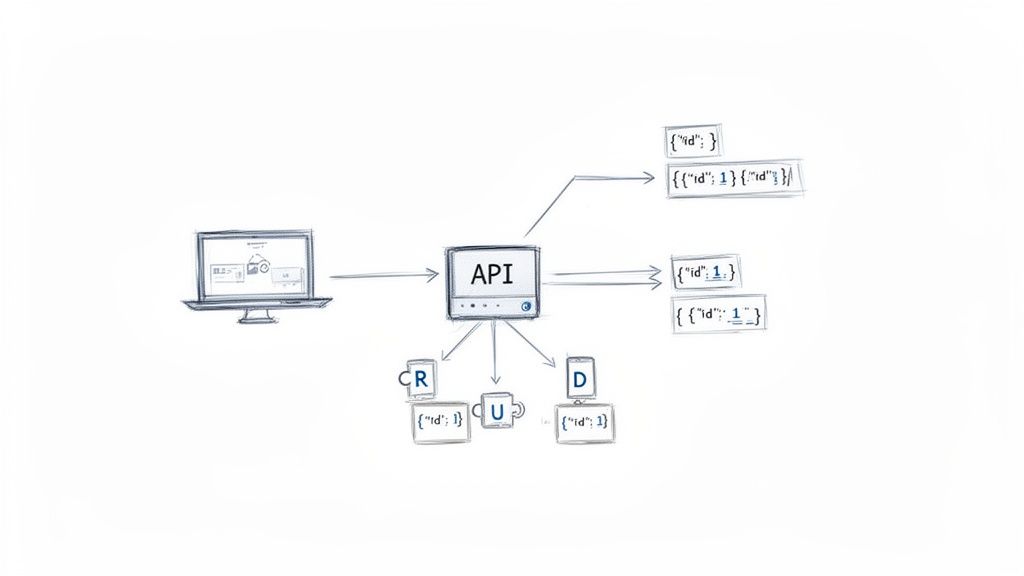

Caption: This diagram shows how an API acts as an intermediary, taking in HTTP requests for actions like creating or reading data and returning structured responses.

Source: CrawlKit Blog

Caption: This diagram shows how an API acts as an intermediary, taking in HTTP requests for actions like creating or reading data and returning structured responses.

Source: CrawlKit Blog

Setting Up Your Development Environment

A web framework handles low-level HTTP routing, letting you focus on your API's logic. Two popular, lightweight choices are Express.js for Node.js and Flask for Python.

For a Node.js project, initialize your project and install Express:

1npm init -y

2npm install expressFor Python, set up a virtual environment and install Flask:

1python -m venv venv

2source venv/bin/activate

3pip install FlaskImplementing CRUD Operations

The core of most RESTful APIs is a set of endpoints for CRUD (Create, Read, Update, Delete) operations. For an API managing blog posts, your endpoints would map to these actions:

- Create:

POST /posts - Read:

GET /postsandGET /posts/{id} - Update:

PUT /posts/{id} - Delete:

DELETE /posts/{id}

Here is a minimal GET endpoint in Node.js and Express that returns a list of posts.

1const express = require('express');

2const app = express();

3const port = 3000;

4

5// Simple in-memory array for demonstration

6const posts = [

7 { id: 1, title: 'My First API Post', content: 'Hello, world!' },

8 { id: 2, title: 'API Design Tips', content: 'Keep it simple.' }

9];

10

11// Endpoint to return all posts

12app.get('/posts', (req, res) => {

13 res.json(posts);

14});

15

16app.listen(port, () => {

17 console.log(`API server listening at http://localhost:${port}`);

18});When a client sends a GET request to /posts, the server responds with the posts array as JSON. Handling JSON correctly is critical; for instance, understanding how to convert a Python string to JSON is a fundamental skill for reliable endpoints.

Validating Requests and Handling Errors

Always assume incoming client data is untrustworthy. Request validation ensures that data sent to your API meets requirements before you process it. If a user tries to create a post without a title, your API should reject the request with a clear error message.

When errors occur, respond with appropriate HTTP status codes:

- 400 Bad Request: For invalid client input, like a missing field.

- 404 Not Found: When a requested resource does not exist.

- 500 Internal Server Error: For unexpected server-side problems.

Clear validation and error handling are fundamental to building a trustworthy, developer-friendly API.

Securing Your API With Modern Authentication

Building an API without security is like leaving your front door unlocked. It is a non-negotiable requirement for protecting your users, data, and service. Modern authentication and authorization are the bedrock of any trustworthy API.

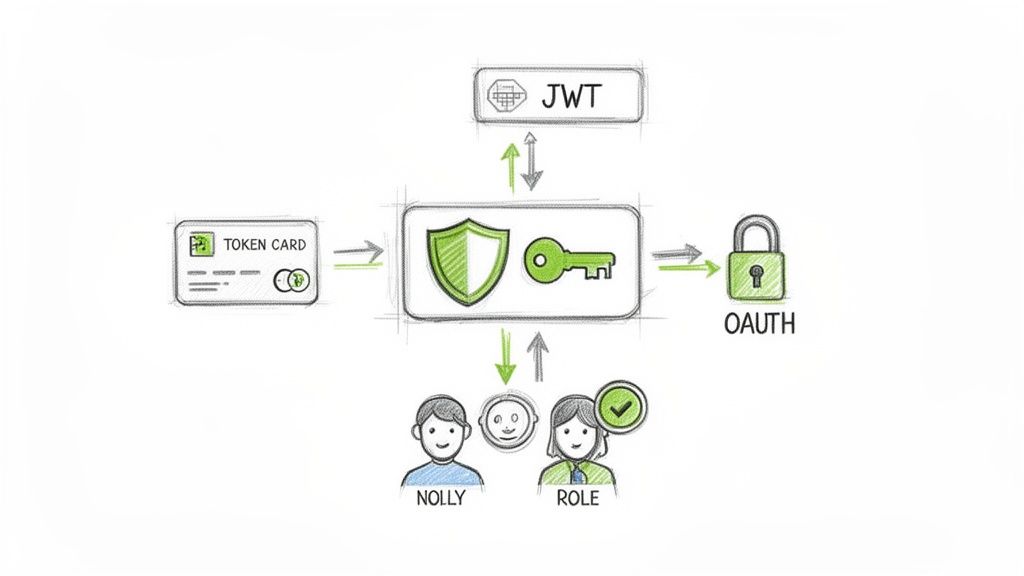

Caption: This flow chart visualizes how a user's request is validated through authentication (who they are) and authorization (what they can do) before granting access.

Source: CrawlKit Blog

Caption: This flow chart visualizes how a user's request is validated through authentication (who they are) and authorization (what they can do) before granting access.

Source: CrawlKit Blog

Every request should pass two gates: authentication confirms who is making the request, and authorization determines what they are allowed to do.

Choosing the Right Authentication Strategy

Authentication verifies a user's identity. Common methods include:

- API Keys: A unique key is included in request headers. This is a great fit for server-to-server communication or simple internal tools.

- OAuth 2.0: The industry standard for delegated authorization. It lets users grant a third-party app limited access to their data without sharing their password (e.g., "Log in with Google").

- JSON Web Tokens (JWT): A compact, self-contained method for securely transmitting information. After login, the server issues a signed token that the client includes in future requests, allowing for stateless identity verification.

For a complete overview, explore these top API security best practices.

From Authentication to Authorization

Once a user is authenticated, authorization determines their permissions. Role-Based Access Control (RBAC) is an effective way to manage this. You define roles—like admin, editor, or viewer—and assign specific permissions to each.

For example, an admin may have full CRUD access, while a viewer can only read data. This approach simplifies permission management. You can see a real-world example in how CrawlKit handles its own authentication and authorization for API access.

Essential Security Layers

A secure API needs additional layers of defense:

- Rate Limiting: Set a cap on how many requests a user can make in a given time period (e.g., 100 requests/minute) to prevent brute-force attacks and abuse.

- HTTPS Everywhere: Always use HTTPS to encrypt data in transit. Sending sensitive information like API keys or user data over unencrypted HTTP is a major security risk.

Enriching Your Data Using Other APIs

An API becomes truly powerful when it connects with other services to provide richer data. Instead of only serving static data from your database, you can call external APIs to offer dynamic, real-time information without having to maintain all the data yourself.

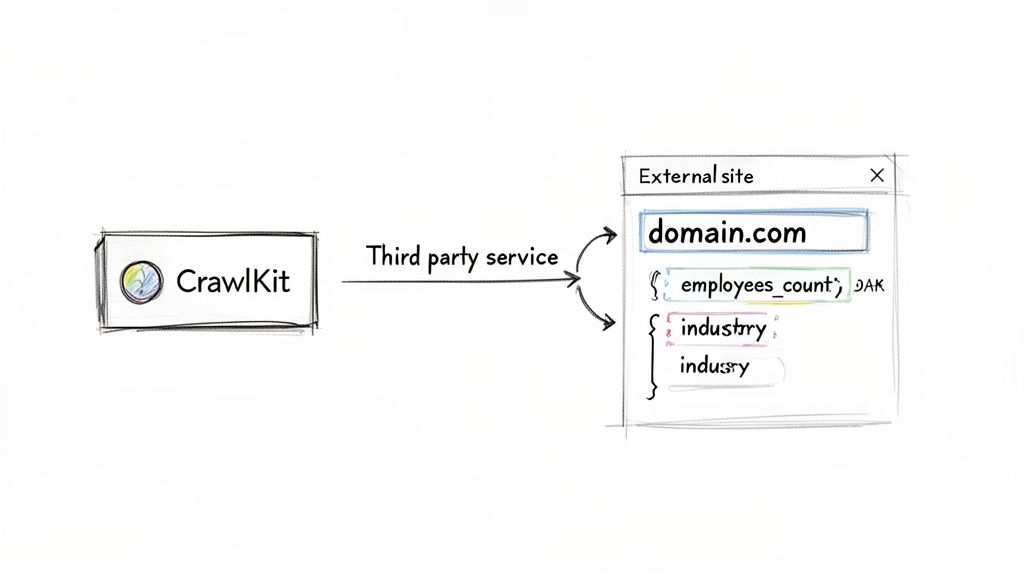

A Practical Example: Company Data Enrichment

Imagine you're building an endpoint to provide company profiles. Your database might only contain a company's domain, but users want more: employee count, industry, and location.

Instead of building a complex web scraping system, a smarter approach is to use a specialized third-party API. CrawlKit is a developer-first, API-first web data platform designed for this. It handles all the scraping infrastructure, proxies, and anti-bot measures, turning a difficult engineering problem into a single API call. You can start free, with no infrastructure to manage.

Integrating a Third-Party API

Let's build an endpoint: /enrich-company?domain=crawlkit.sh. It will take a domain, use CrawlKit to fetch LinkedIn profile data, and return key details.

First, you can test the CrawlKit API with a simple cURL command.

1curl "https://api.crawlkit.sh/v1/extract/linkedin/company" \

2 -H "Authorization: Bearer YOUR_CRAWLKIT_API_KEY" \

3 -d '{ "url": "https://www.linkedin.com/company/crawlkit/" }'This abstracts away complex infrastructure, a model that mirrors trends in major industries. For example, the US Active Pharmaceutical Ingredient market is expected to hit USD 83.16 billion by 2035, driven by companies relying on specialized suppliers—much like the modern API economy. You can read more in the full market report.

A successful request returns clean, structured JSON.

Caption: A sample JSON response from the CrawlKit API, providing structured data like employee count and industry, ready for integration.

Source: CrawlKit Documentation

Caption: A sample JSON response from the CrawlKit API, providing structured data like employee count and industry, ready for integration.

Source: CrawlKit Documentation

Serving the Enriched Data From Your API

The final step is to parse this JSON, select the fields you want to expose, and serve it from your own API endpoint. This is a classic example of effective API-driven dataset enrichment, where you combine your data with external sources to create a more valuable product.

You can start exploring these workflows by experimenting with the CrawlKit Playground.

From Testing to Deployment and Beyond

Shipping a dependable API requires rigorous testing, a smooth deployment process, and automation to tie it all together. This phase builds confidence that your API works, can handle real-world traffic, and won't break with future updates.

A Layered Testing Strategy

A robust API is built on a layered testing foundation to catch bugs at every level.

- Unit Tests: Small, fast tests that verify individual functions or components work in isolation.

- Integration Tests: Checks that different parts of your system work together correctly, such as an endpoint triggering a database query.

- End-to-End (E2E) Tests: Simulates a complete user workflow from start to finish to ensure the entire system is cohesive.

Modern Deployment and Automation

Modern best practices rely on containerization and automation for repeatable, reliable deployments.

Containerization with Docker packages your application and its dependencies into a single, portable container, eliminating "it worked on my machine" issues.

An orchestrator like Kubernetes manages your containers at scale, handling load balancing, auto-scaling, and self-healing.

Caption: This example CI/CD pipeline from GitHub Actions illustrates how every code change automatically triggers jobs for building, testing, and deploying, ensuring code quality. Source: GitHub Actions

Building a CI/CD Pipeline

Continuous Integration and Continuous Deployment (CI/CD) automates your entire release process. A CI/CD pipeline acts as your API's automated quality control, enforcing standards and turning deployment into a low-risk, repeatable event.

Here is a typical workflow:

- A developer pushes code to a Git repository.

- A CI server detects the change, builds the app, and runs all tests.

- If tests pass, the app is packaged into a Docker container.

- The new container is pushed to a registry.

- The CD system deploys the updated container to production.

Automating this flow is fundamental to shipping better software faster. You can explore how automated data extraction fits into modern workflows by using the CrawlKit API Playground.

Common Questions About Creating an API

As you build, several common questions will arise. Addressing them early on will save you from major headaches later.

What is the difference between REST and GraphQL?

REST is an architectural style that treats everything as a resource, using standard HTTP methods (GET, POST, DELETE). It is predictable and caches well but can lead to over-fetching (too much data) or under-fetching (requiring multiple calls).

GraphQL is a query language for APIs that lets the client request exactly the data it needs in a single call, solving the fetch-inefficiency problem.

- Use REST for: Simpler, resource-driven APIs and public services where web standards and caching are priorities.

- Use GraphQL for: Complex applications with interconnected data, especially mobile apps where bandwidth is a concern.

How do I choose the right HTTP status codes?

Proper status codes provide standardized feedback. Use them correctly to build a professional API.

- 2xx (Success):

200 OK(successful request),201 Created(new resource created),204 No Content(success with no body to return). - 4xx (Client Error):

400 Bad Request(invalid input),401 Unauthorized(authentication required),403 Forbidden(authenticated but no permission),404 Not Found(resource does not exist). - 5xx (Server Error):

500 Internal Server Error(unexpected server problem). Always include a clear, human-readable error message in the response body.

What is API versioning and why is it important?

Your API will inevitably change. Versioning allows you to introduce breaking changes without disrupting existing users. By creating a new version (e.g., /v2/users), you let clients continue using the stable /v1/ while they migrate. This provides a predictable upgrade path. Common strategies include versioning in the URL path, as a query parameter, or in a request header.

What is the best way to document an API?

An undocumented API is a useless one. The industry standard is the OpenAPI Specification (formerly Swagger). Writing a single OpenAPI file (in YAML or JSON) allows you to:

- Define every endpoint, parameter, and response.

- Automatically generate interactive documentation with tools like Swagger UI or ReDoc.

- Generate client SDKs in multiple languages.

Should I build a monolith or use microservices?

A monolith is a single, all-in-one application. It is simpler to build, test, and deploy, making it ideal for startups, small teams, and MVPs.

Microservices break an application into small, independent services. This offers great scalability but introduces significant operational complexity. A common strategy is to start with a well-structured monolith and peel off services into microservices as the application and team grow.

How can I improve my API's performance?

A slow API can cripple user experience. High-impact improvements include:

- Caching: Use an in-memory cache like Redis for data that doesn't change often to avoid database hits.

- Database Optimization: Add indexes to frequently queried columns and analyze query performance.

- Pagination: Never return an entire database table in one response. Paginate lists to keep responses small and predictable.

- Asynchronous Jobs: For slow tasks like sending emails, return a

202 Acceptedresponse immediately and offload the work to a background job queue like RabbitMQ.

What's the difference between authentication and authorization?

Authentication is the process of verifying who a user is (e.g., via a password, API key, or token). It answers the question, "Are you who you say you are?"

Authorization is the process of determining what an authenticated user is allowed to do. It answers the question, "Do you have permission to access this resource?"

How do I handle rate limiting?

Rate limiting is crucial for preventing abuse and ensuring your API remains available for all users. It restricts the number of requests a client can make in a given time period. You can implement it using algorithms like the token bucket or leaky bucket, often with the help of an in-memory store like Redis to track request counts per user or IP address.

Building powerful API features often involves integrating with external data sources. With CrawlKit, you can enrich your own data with fresh information from any website without managing scraping infrastructure. The developer-first, API-first platform abstracts away proxies and anti-bot challenges, letting you focus on your core logic. Try the Playground for free to see how easy it is to turn any web page into structured JSON.

Next steps

Now that you have a solid understanding of the entire API lifecycle, you can start applying these principles to your own projects.