Meta Title: How to Build Data Pipelines: A Modern Developer's Guide Meta Description: Learn how to build data pipelines with our step-by-step guide. We cover web data ingestion, transformation, storage, and orchestration for modern engineers.

Learning how to build data pipelines is essential for turning raw, messy information into a strategic asset. A data pipeline is an automated system that ingests raw data, transforms it into a useful format, and loads it into a destination for analysis or model training. This guide provides a developer-focused blueprint for building resilient, scalable systems that power modern applications.

Table of Contents

- What Are the Core Stages of a Data Pipeline?

- Step 1: Mastering Web Data Ingestion

- Step 2: Transforming Raw Data into Actionable Insights

- Step 3: Choosing the Right Data Storage

- Step 4: Orchestrating and Monitoring for Reliability

- Frequently Asked Questions

- Next Steps

What Are the Core Stages of a Data Pipeline?

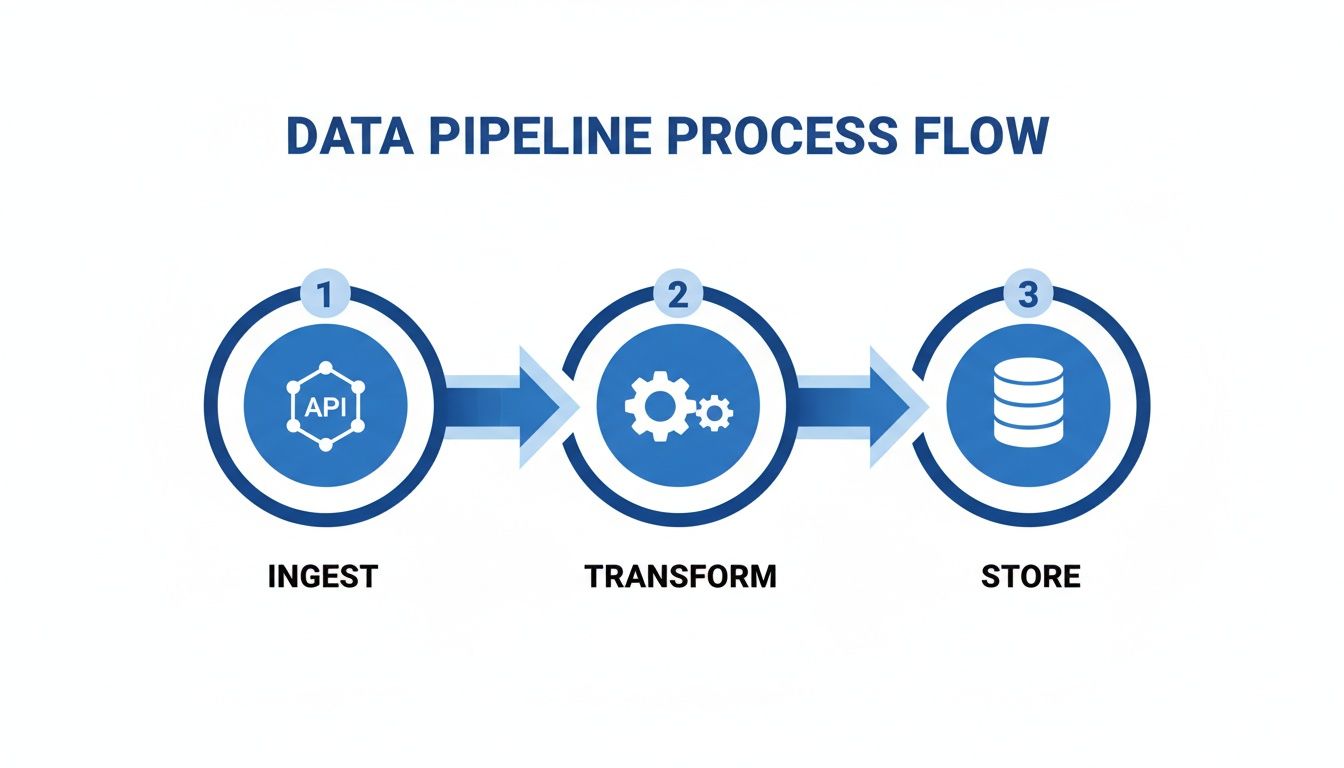

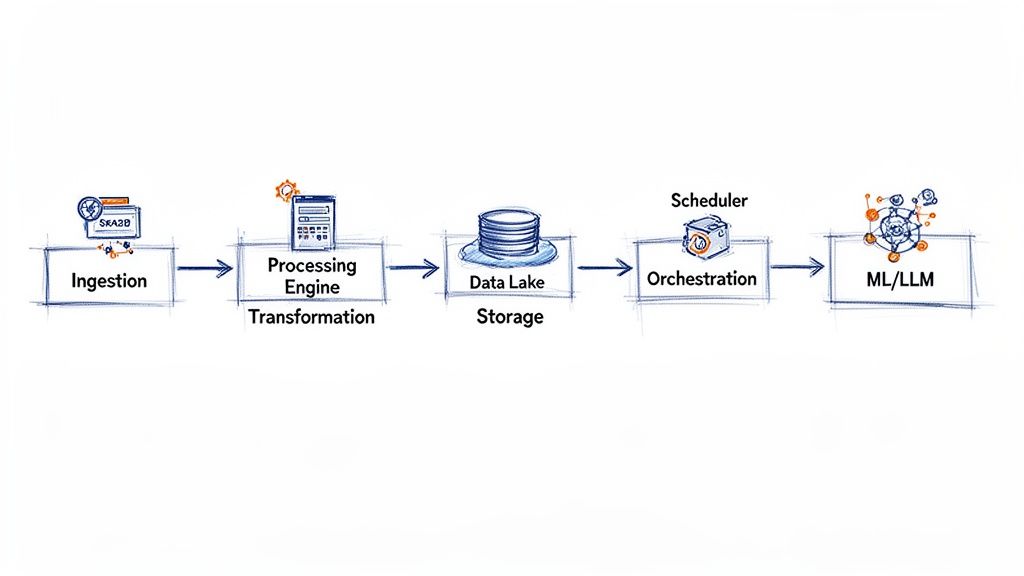

Before writing a single line of code, it's crucial to understand the foundational stages and implement solid data governance best practices to ensure data integrity from the start. A modern pipeline follows three fundamental steps: Ingestion, Transformation, and Storage. This simple flow provides a clear mental map of how raw inputs become valuable outputs.

Caption: The three core stages of a data pipeline: Ingest, Transform, and Store. Source: Outrank.

Caption: The three core stages of a data pipeline: Ingest, Transform, and Store. Source: Outrank.

The demand for these skills has exploded. The global data pipeline market is projected to grow from $10.01 billion in 2024 to an estimated $43.61 billion by 2032. This reflects the urgent need for engineers who can build reliable systems that feed clean data to downstream applications.

The key components we'll cover are:

- Ingestion: Getting data from various sources, particularly complex ones like public websites.

- Transformation: The art of cleaning, validating, and structuring raw data.

- Storage: Choosing the right data lake or warehouse for your specific needs.

- Orchestration: Automating and monitoring the entire workflow for reliability.

For many teams, the first stage—ingestion—is the most complex, especially when dealing with unpredictable web data. This is where a developer-first tool like CrawlKit becomes essential. As an API-first web data platform, it abstracts away the entire scraping infrastructure, so you can start free and collect data with a simple API call.

Step 1: Mastering Web Data Ingestion

Getting data into your system is the first, and often trickiest, hurdle. When figuring out how to build data pipelines for web data, you immediately face anti-bot systems, dynamic JavaScript sites, and messy HTML. The success of every downstream step hinges on getting this initial ingestion phase right.

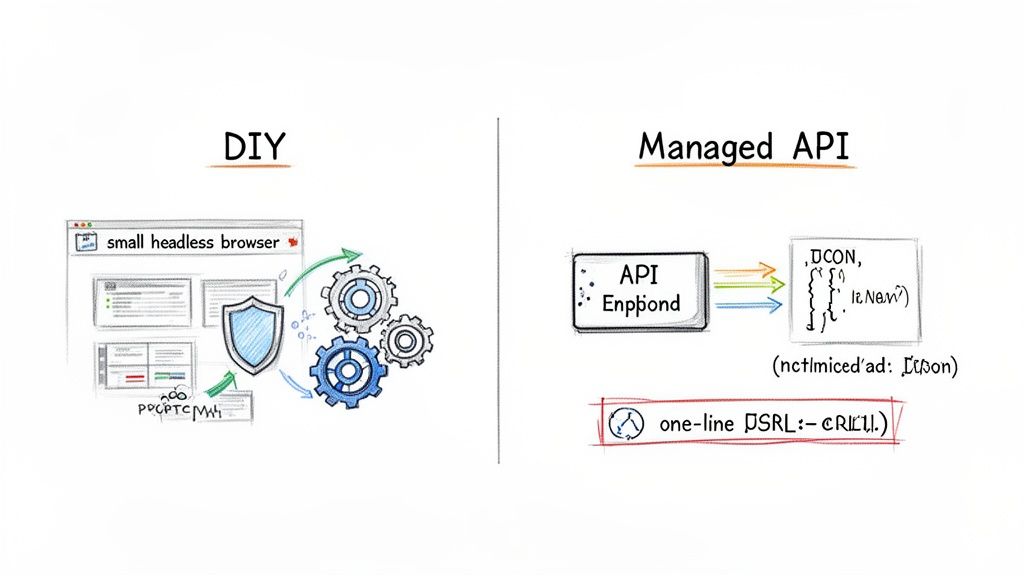

You have a fundamental choice: build your own web scraping infrastructure or use a managed, API-first solution. The DIY path is a constant, uphill battle.

The Hidden Costs of DIY Scraping

Building your own scraping infrastructure is a massive engineering commitment that distracts from your core product. You’re signing up for:

- Proxy Management: Sourcing, vetting, and managing a massive, rotating pool of residential and datacenter proxies to avoid IP blocks.

- CAPTCHA Solving: Integrating with CAPTCHA-solving services to bypass protections from providers like Cloudflare and Akamai.

- Headless Browser Maintenance: Running a fleet of resource-intensive headless browsers (like Puppeteer or Playwright) to render modern JavaScript-heavy websites.

This process often feels like you're fighting the web just to get the data you need.

Caption: A clean data source at the start of the pipeline is non-negotiable for downstream success. Source: Outrank.

Caption: A clean data source at the start of the pipeline is non-negotiable for downstream success. Source: Outrank.

The API-First Approach to Ingestion

A saner path is using a developer-first web data platform. This approach abstracts away the infrastructure headache. Instead of wrestling with proxies, you make a simple API call.

CrawlKit was designed for exactly this. As an API-first platform, it handles proxies, anti-bot systems, and browser rendering for you. You specify the URL and the data you need, and it returns clean, structured JSON.

Here's how easily you can get structured data from a target site with a simple cURL command:

1curl -X POST "https://api.crawlkit.sh/v1/extract" \

2 -H "Authorization: Bearer YOUR_API_KEY" \

3 -H "Content-Type: application/json" \

4 -d '{

5 "url": "https://example.com/products/123",

6 "fields": {

7 "title": {"selector": "h1"},

8 "price": {"selector": ".product-price"}

9 }

10 }'This single API call replaces thousands of lines of code and countless hours of infrastructure maintenance. By starting with clean JSON, you simplify later transformation stages and let your team focus on deriving value from data, not just fighting to collect it.

Explore the CrawlKit Playground to start free and see how it works, or check out our comprehensive guide on how to web scrape with Python.

Step 2: Transforming Raw Data into Actionable Insights

Raw web data is rarely useful out of the box. The transformation stage is where you forge messy inputs into the clean, reliable fuel that powers your analytics and machine learning models. Starting with structured JSON from an API-first service like CrawlKit gives you a massive head start.

The Core Tasks of Data Transformation

Transformation involves a series of operations to improve data quality and consistency.

- Schema Validation: Ensure incoming data matches the expected structure. Does the

pricefield exist? Isreview_counta number? Catching issues early prevents bad data from poisoning downstream systems. - Data Type Casting: Convert data to the correct format. Prices like

"$99.99"should become numeric types, and dates should be standardized to a consistent format like ISO 8601. - Deduplication: Remove duplicate records, which are common when scraping product listings or search results across multiple pages.

- Enrichment: Increase the value of your data by adding information from other sources, like using a geolocation service to add coordinates based on a scraped address.

Caption: Starting with a managed API lets you focus on high-value transformation instead of basic parsing. Source: Outrank.

Caption: Starting with a managed API lets you focus on high-value transformation instead of basic parsing. Source: Outrank.

Practical Data Cleaning with Python and Pandas

Python's Pandas library is the industry standard for data wrangling. Here’s a quick Python function for cleaning a messy price field and casting a count to the correct data type. If you need a refresher, check out our guide on Python strings and JSON.

1import pandas as pd

2import re

3

4def clean_product_data(df):

5 """Cleans price and converts sold_count to a numeric type."""

6 # Remove currency symbols and commas, then convert to float

7 df['price'] = df['price'].apply(

8 lambda x: float(re.sub(r'[^\d.]', '', str(x))) if x else 0.0

9 )

10 # Convert sold_count to integer, handling non-numeric values

11 df['sold_count'] = pd.to_numeric(df['sold_count'], errors='coerce').fillna(0).astype(int)

12 return dfThis snippet programmatically turns messy, real-world data into a clean, standardized format ready for analysis.

ETL vs. ELT: Choosing the Right Approach

A key architectural decision is when to perform transformations.

- ETL (Extract, Transform, Load): The traditional model. You extract data, transform it on a separate processing server, and then load the clean data into your warehouse.

- ELT (Extract, Load, Transform): The modern, cloud-native approach. You extract raw data and load it directly into a powerful cloud data warehouse like Snowflake or Google BigQuery. All transformation logic is then executed inside the warehouse.

The rise of ELT is tied to the compute power of modern data warehouses. Tools like dbt (Data Build Tool) have become a cornerstone of the modern data stack, allowing you to run transformation logic using simple SQL SELECT statements.

How to choose?

- Go with ETL if transformations are computationally expensive or if you have strict compliance requirements to clean sensitive data before it lands in your warehouse.

- Choose ELT for most modern use cases. It’s more flexible, scales better, and gives analysts direct access to the raw data for exploration.

Step 3: Choosing the Right Data Storage

Once transformed, your data needs a place to live. This choice dictates your pipeline’s speed, scalability, and the types of analysis you can perform.

Data Lakes vs. Data Warehouses

A data lake, like Amazon S3 or Google Cloud Storage, is a centralized repository for vast volumes of raw data in its native format. Its flexibility makes it a perfect landing zone for the "load" step in an ELT workflow.

A data warehouse is a highly optimized database designed for fast querying and business intelligence. Platforms like Snowflake, Google BigQuery, and Amazon Redshift excel at handling clean, structured data for reporting and dashboards.

The Rise of the Lakehouse

A hybrid model called the data lakehouse combines the low-cost, flexible storage of a data lake with the powerful querying and management features of a warehouse. Platforms like Databricks and Snowflake are at the forefront, allowing you to run warehouse-style queries directly on data in object storage. This simplifies architecture and reduces data duplication.

Caption: A unified lakehouse architecture allows different workloads to run on the same data foundation. Source: Snowflake Official Website

A Practical Checklist for Making Your Choice

- Data Structure: Is your data mostly structured or a mix of unstructured formats? A lake loves variety; a warehouse demands structure.

- Primary Use Case: Do you need a sandbox for data scientists or a fast engine for BI dashboards? The first points to a lake, the second to a warehouse.

- Budget: Object storage for data lakes is significantly cheaper for storing petabytes of data than a high-performance warehouse.

- Scalability: Where do you see your data volume in three years? Both cloud warehouses and lakes scale well, but their pricing models differ.

- Query Speed: For interactive, low-latency analytics, a purpose-built data warehouse almost always outperforms querying a data lake directly.

Thinking through these factors helps you build on a storage foundation that solves today's problems and is ready for future needs. A solid pipeline is crucial for ambitious projects like creating an internal knowledge base, where you must store and access diverse data types efficiently. You can read more about using web data for knowledge base creation to see this in action.

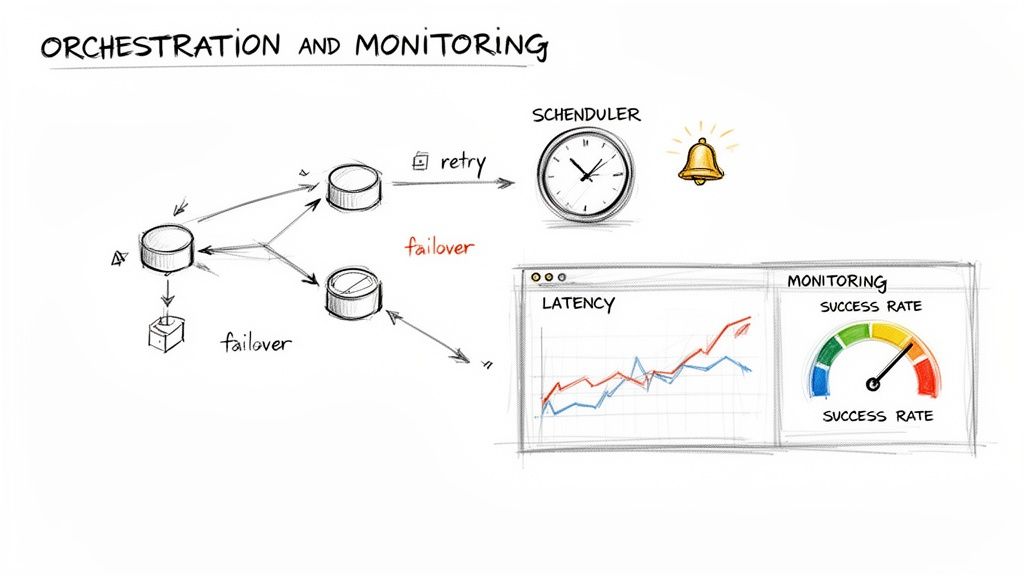

Step 4: Orchestrating and Monitoring for Reliability

A data pipeline isn't "set it and forget it." It requires robust orchestration to automate workflows and constant monitoring to ensure health. This automation separates a collection of fragile scripts from a reliable, self-healing system.

Choosing Your Workflow Orchestrator

A workflow orchestrator is the conductor of your data pipeline. It schedules jobs, manages dependencies, retries failed tasks, and alerts you when something is broken. Two popular open-source options are Apache Airflow and Prefect.

- Apache Airflow: The powerful and flexible incumbent. Workflows are defined as Directed Acyclic Graphs (DAGs) in Python, offering complete programmatic control.

- Prefect: A modern take on orchestration, designed to be more intuitive with a slick UI and support for dynamic workflows.

The core philosophy of both is workflow as code. This allows you to version control, test, and collaborate on your pipelines like any other software. Here's a simple Airflow DAG in Python:

1from airflow import DAG

2from airflow.operators.bash import BashOperator

3from datetime import datetime

4

5with DAG(

6 dag_id='simple_web_data_pipeline',

7 start_date=datetime(2023, 1, 1),

8 schedule_interval='@daily',

9 catchup=False

10) as dag:

11 extract_data = BashOperator(

12 task_id='extract_from_api',

13 bash_command='curl -X POST "https://api.crawlkit.sh/v1/extract" ... > /tmp/data.json'

14 )

15 transform_data = BashOperator(

16 task_id='run_transform_script',

17 bash_command='python /opt/airflow/scripts/transform.py'

18 )

19 extract_data >> transform_data # This defines the dependencyThe line extract_data >> transform_data tells Airflow that the transform task can only run after the extract task succeeds. For a more advanced first step, our guide on how to build a web crawler shows how data extraction can fit into a larger orchestrated pipeline.

The Pillars of Pipeline Observability

Orchestration is about doing the work; observability is about knowing how it's going. A solid monitoring strategy comes down to three key areas:

- Operational Metrics: Track job success/failure rates, task duration, and resource usage (CPU, memory). This is your first line of defense.

- Data Latency & Freshness: Measure the time between an event occurring at the source and the data being ready for use.

- Data Quality Scores: Monitor for null counts, schema drift, and validation pass rates. A dip in quality scores is an early warning of "data rot."

Caption: A monitoring dashboard provides a single pane of glass into your pipeline's health. Source: Outrank

A popular open-source stack for monitoring is Prometheus + Grafana. Prometheus scrapes and stores time-series metrics from your pipeline tasks, and Grafana visualizes them in interactive dashboards, giving you critical real-time visibility.

Frequently Asked Questions

Caption: A robust pipeline includes orchestration and monitoring to ensure reliability and performance. Source: Outrank

Caption: A robust pipeline includes orchestration and monitoring to ensure reliability and performance. Source: Outrank

What is the difference between ETL and ELT?

The main difference is when transformation occurs. In ETL (Extract, Transform, Load), data is transformed before being loaded into a data warehouse. In ELT (Extract, Load, Transform), raw data is loaded directly into a modern cloud warehouse like Snowflake or BigQuery, and transformations happen inside the warehouse, leveraging its massive processing power. ELT is the dominant approach today.

How do I handle dynamic, JavaScript-heavy websites?

Scraping sites built with React or Angular requires a headless browser to render JavaScript. Managing this infrastructure yourself is a maintenance nightmare. A developer-first platform like CrawlKit abstracts this away. You make a single API call, and CrawlKit handles the headless browser rendering, returning structured JSON without you needing to manage any scraping infrastructure.

What are the most important pipeline metrics to monitor?

Focus on three key areas:

- Operational Health: Job success/failure rates, task duration, and resource usage.

- Data Quality: Null counts, schema drift, and record completeness.

- Performance: Data latency (freshness) and throughput (volume processed).

Should I build a data pipeline from scratch or use managed services?

A hybrid approach leaning on managed services is almost always the smartest path. Building everything from scratch means becoming an expert in proxies, infrastructure, and orchestrator maintenance. Using managed services like CrawlKit for ingestion, a cloud data warehouse for storage, and a managed orchestrator lets your team focus on business logic instead of reinventing the wheel.

How can I ensure my pipeline is fault-tolerant?

Use a workflow orchestrator like Airflow or Prefect. These tools provide automatic retries with exponential backoff for transient errors, smart dependency management to prevent cascading failures, and robust alerting. This automation keeps small issues from becoming full-blown outages.

What are the main components of a data pipeline?

A data pipeline consists of a source (e.g., an API or database), an ingestion process, a transformation engine to clean and structure the data, a storage destination like a data warehouse or data lake, and an orchestration tool to automate and monitor the entire workflow.

Is SQL used in data pipelines?

Yes, SQL is a cornerstone of modern data pipelines, especially in ELT workflows. Tools like dbt allow engineers to write transformation logic using SQL directly within the data warehouse. SQL is also the primary language for querying data for analysis once it's in the warehouse.

Why is data ingestion the most challenging step?

Data ingestion, particularly from external web sources, is challenging due to unpredictable source formats, anti-bot protections, rate limiting, and the need to render JavaScript. A failure at this first step compromises the entire pipeline, which is why using a reliable, API-first ingestion tool is critical.

Next Steps

You now have the blueprint for building a production-ready data pipeline, from web data ingestion to reliable orchestration. The key is to choose tools that abstract away infrastructure, allowing your team to focus on shaping data to solve business problems, not on managing proxies or headless browsers.

Get the ingestion piece right from the start with a clean, reliable data source, and you'll prevent a world of hurt downstream.

Keep the Momentum Going

Ready to dive deeper? These guides provide more hands-on examples and advanced techniques for feeding high-quality, structured web data into your applications and models.

- A Developer's Guide to Web Scraping with APIs: Graduate from simple scripts to a scalable API for reliable data extraction.

- How to Turn Any Website into a Structured JSON API: A practical walkthrough for transforming messy HTML into clean, queryable JSON.

- Preparing Web Data for Large Language Models (LLMs): Learn the specific cleaning and structuring techniques required to get web data ready for model training and RAG.