Meta Title: How to Web Scrape with Python: A Practical Step-by-Step Guide Meta Description: Learn how to web scrape with Python using Requests, BeautifulSoup, and Playwright. This guide covers static and dynamic sites, scaling, and best practices.

Learning how to web scrape with Python opens up a massive ecosystem of libraries purpose-built for data extraction. Whether you need to gather product prices, monitor social media trends, or fuel an AI model, Python provides a powerful and accessible toolkit. This guide will walk you through the essential libraries and best practices, from simple static pages to complex, JavaScript-heavy websites.

Table of Contents

- Why Python Is the Go-To for Web Scraping

- Scraping Static Sites with Requests and BeautifulSoup

- Tackling Dynamic JavaScript Sites with Playwright

- Scaling Your Crawlers with the Scrapy Framework

- Scraping Ethically and Avoiding Blocks

- Using a Web Scraping API to Simplify Everything

- Frequently Asked Questions (FAQ)

- Next Steps

Why Python Is the Go-To for Web Scraping

Python is consistently the top choice for web scraping projects for two main reasons: its straightforward syntax and an incredible collection of specialized libraries.

Python’s clean, readable code means you can build maintainable scrapers in fewer lines than almost any other language. This makes web scraping accessible to data scientists, SEO specialists, and anyone who needs to gather web data without getting bogged down in boilerplate code. The community support is also massive, with endless tutorials and pre-built solutions for nearly any scraping problem imaginable.

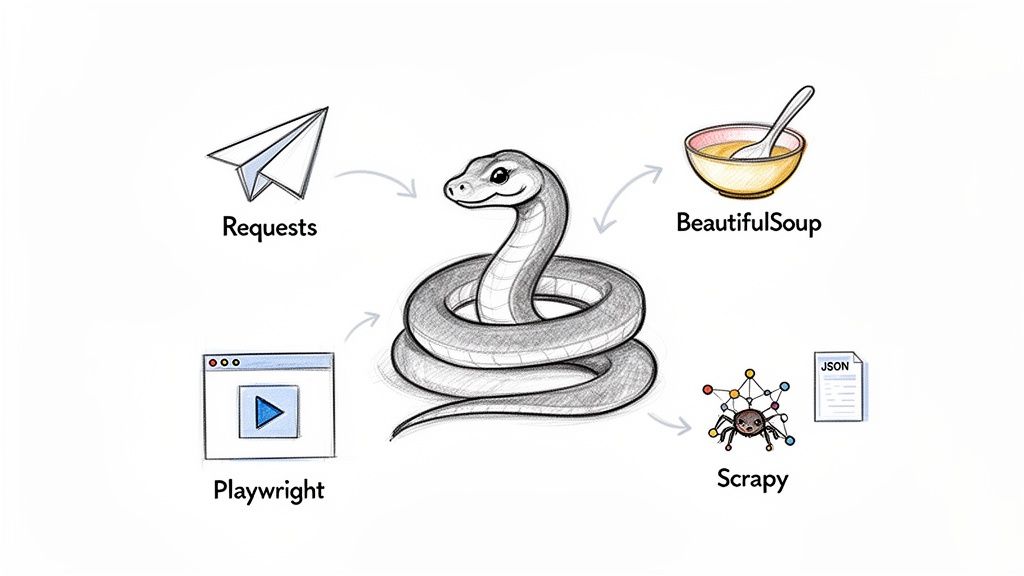

Python's library ecosystem provides a tool for every web scraping challenge. Image Source: CrawlKit.

The Core Library Stack

At the heart of Python's scraping world are a few essential libraries, each with a distinct job. Once you understand how they work together, you'll have a complete toolkit for tackling almost any site.

Here’s a quick breakdown of the core tools and what they're best for:

| Library | Primary Use Case | Best For |

|---|---|---|

| Requests | Making HTTP requests to fetch a webpage's content. | Simple, static HTML pages where no JavaScript rendering is needed. |

| BeautifulSoup | Parsing and navigating messy HTML/XML documents. | Extracting specific elements from raw HTML using tags, IDs, or classes. |

| Playwright/Selenium | Automating a real web browser to render JavaScript. | Dynamic sites, single-page applications (SPAs), and content that loads on scroll. |

| Scrapy | A complete framework for building scalable web crawlers. | Large-scale projects, crawling entire websites, and managing complex data pipelines. |

These libraries form a powerful, layered stack. You start with Requests to get the raw HTML, then pass it to BeautifulSoup to make sense of it. When a site gets tricky and loads its content with JavaScript, you bring in a browser automation tool like Playwright to render the page just like a real user would. For projects that need to crawl thousands of pages, Scrapy handles all the heavy lifting of asynchronous requests, data processing, and exporting.

From Raw HTML to Structured Data

Ultimately, scraping isn't about collecting messy HTML—it's about pulling out clean, structured data. This is where Python excels. You can parse a chaotic webpage and instantly organize the important bits into a clean JSON format.

This structured data is the fuel for databases, analytics dashboards, and machine learning models. Clean, reliable data is the foundation for a huge range of applications, from SEO monitoring to AI pipelines. You can explore a variety of web data use cases for SEO and AI pipelines to see what's possible.

Beyond just scraping, Python’s ecosystem extends into highly advanced fields like Python coding AI, providing a seamless transition from data collection to complex analysis and application. This synergy makes it an incredibly versatile choice for any data-focused project.

Scraping Static Sites with Requests and BeautifulSoup

If you’re just starting out with web scraping in Python, the combination of requests and BeautifulSoup is the classic place to begin. This powerful duo is your go-to for static websites—pages where all the content is baked directly into the initial HTML.

Think of simple blogs, news articles, or basic e-commerce listings. If you can right-click, "View Page Source," and see the data you want right there in the text, this method will work perfectly.

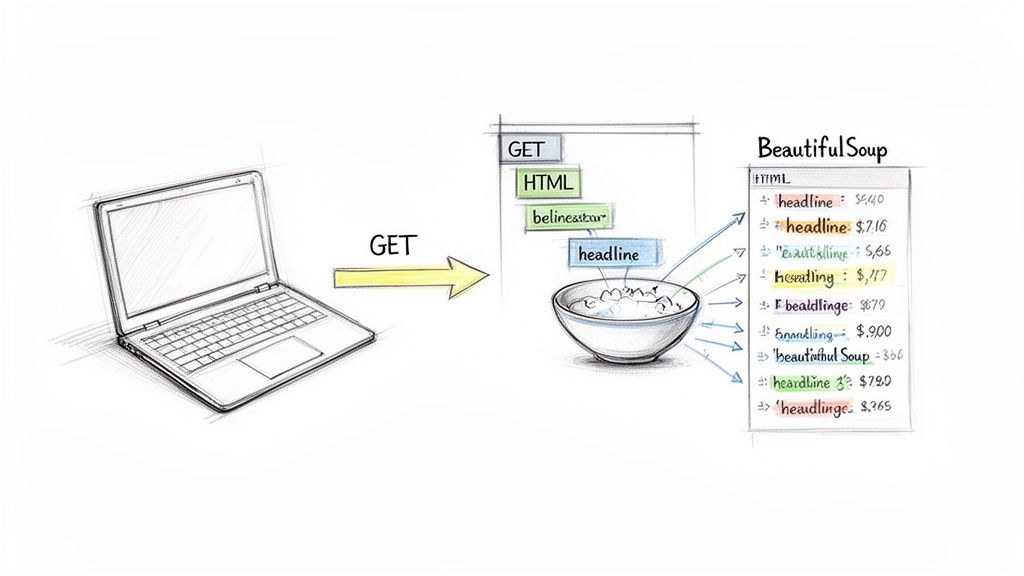

The process is refreshingly simple. First, the requests library acts like a browser, firing off an HTTP request to grab the site's raw HTML. Once you have that, BeautifulSoup steps in to turn that jumble of code into a structured, searchable object.

The fundamental process of static scraping involves fetching HTML and parsing it to extract data. Image Source: CrawlKit.

Fetching the Page Content

Before you can parse anything, you need to get the HTML. The requests library handles this beautifully. You'll need to install both libraries first, which you can do with a quick pip command:

1pip install requests beautifulsoup4With that done, you can send a GET request to your target URL. A successful request gives you back a Response object, which holds everything the server sent back, including the all-important HTML content and a status code. Look for a status code of 200—that means "OK."

Here’s what that looks like in a script:

1import requests

2

3url = 'https://books.toscrape.com/'

4response = requests.get(url)

5

6# Always a good idea to check if the request was successful

7if response.status_code == 200:

8 html_content = response.text

9 print("Successfully fetched the page content!")

10else:

11 print(f"Failed to fetch page. Status code: {response.status_code}")This small script is the foundation of any static scraping project. It gives you the raw material—the HTML—to work with. For more complex jobs, you can use a dedicated service to fetch raw HTML data with an API and offload proxy management and anti-bot measures.

Parsing HTML with BeautifulSoup

Once you have the HTML, it's BeautifulSoup's time to shine. It takes that raw string of HTML and transforms it into a navigable Python object, often called a "soup" object. This lets you move through the document's structure and find elements by their tags, classes, or IDs.

Key Takeaway: At its heart, web scraping is about pattern recognition. You inspect a website's HTML, find the unique selectors that wrap the data you want (like

class="price_color"orid="product_title"), and then tell your script to grab everything that matches that pattern.

Let's expand our script to actually parse the HTML and pull out the titles and prices for all the books on the homepage. To figure out the right selectors, you'll need to use your browser's Developer Tools (right-click on an element and choose "Inspect").

On books.toscrape.com, a quick inspection reveals:

- Each book is contained in an

<article>tag with the classproduct_pod. - The title is inside an

<h3>tag. - The price is wrapped in a

<p>tag with the classprice_color.

Now we can put it all together in a complete script:

1import requests

2from bs4 import BeautifulSoup

3import json

4

5url = 'https://books.toscrape.com/'

6response = requests.get(url)

7soup = BeautifulSoup(response.text, 'html.parser')

8

9books_data = []

10

11# Find all the article elements that act as containers for the books

12book_articles = soup.find_all('article', class_='product_pod')

13

14for article in book_articles:

15 # Dig into each article to find the title and price

16 title = article.h3.a['title']

17 price = article.find('p', class_='price_color').text

18

19 books_data.append({

20 'title': title,

21 'price': price

22 })

23

24# Use json.dumps for a clean, readable output

25print(json.dumps(books_data, indent=2))Run this code, and it will fetch the page, parse the content, and print a nicely structured list of book titles and their prices. This is the fundamental technique that powers countless scraping projects.

Tackling Dynamic JavaScript Sites with Playwright

Simple HTTP requests are great for static HTML, but they hit a wall with modern web apps. If a site loads content with JavaScript—think infinite scroll, interactive dashboards, or single-page applications (SPAs)—the data you want isn't in the initial HTML. This is where browser automation becomes a non-negotiable skill for anyone serious about how to web scrape with Python.

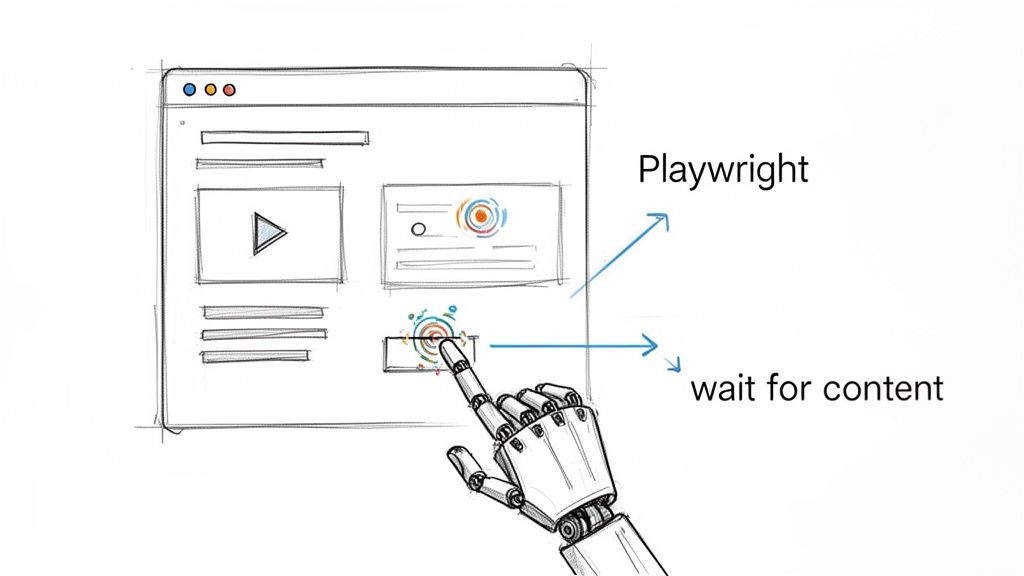

Instead of just grabbing a static file, we need a tool that can launch and control a real web browser. It has to wait for all the JavaScript to execute, render the page completely, and then grab the final HTML. This is exactly what tools like Playwright and Selenium were built for. While Selenium has a long history, Playwright’s modern, async-first design often makes it faster and more reliable.

Browser automation tools like Playwright interact with dynamic websites just like a human user would. Image Source: CrawlKit.

Getting Started with Playwright

Setting up Playwright is simple. First, you install the Python library, then run a single command to download the browsers it needs (Chromium, Firefox, and WebKit).

1# Install the Playwright library

2pip install playwright

3

4# Download the necessary browser binaries

5playwright installThe concept behind Playwright is straightforward: your Python script launches a browser instance, tells it which URL to visit, and then gives it a series of instructions. You can simulate clicks, fill out forms, and—most importantly—wait for specific elements to appear on the page before you try to scrape them.

Waiting for Dynamic Content to Load

Let's say you're scraping a product page where reviews are fetched from an API after the main page has already loaded. If your scraper grabs the HTML immediately, that review section will be empty. Playwright elegantly solves this by letting you pause your script until a specific CSS selector is visible in the DOM.

Here’s a quick Python snippet showing this in action. It navigates to a URL and waits for a container with the ID #reviews-container to show up before it does anything else.

1import asyncio

2from playwright.async_api import async_playwright

3

4async def scrape_dynamic_content(url):

5 async with async_playwright() as p:

6 browser = await p.chromium.launch()

7 page = await browser.new_page()

8 await page.goto(url)

9

10 # The crucial step: wait for the element to appear.

11 # This will wait up to 30 seconds by default.

12 await page.wait_for_selector("#reviews-container")

13

14 # Now that we know the content is loaded, we can extract it.

15 content = await page.content()

16 await browser.close()

17 return content

18

19# To run the async function:

20# html = asyncio.run(scrape_dynamic_content("https://example.com/products/123"))This event-driven approach is a game-changer for scraping dynamic sites. When scraping dynamic sites, you'll inevitably run into various client-side navigation techniques, like different JavaScript forward URL methods. Playwright handles these seamlessly by tracking the browser's actual state. For developers looking for more advanced patterns, our guide on how to extract data from websites dives into more complex scenarios.

Scaling Your Crawlers with the Scrapy Framework

When your project graduates from scraping a single page to tackling an entire website, simple scripts using requests or Playwright start to feel clunky. This is where you need a proper framework to web scrape with Python at scale, and Scrapy is the industry standard for building fast, powerful, and maintainable web crawlers.

Instead of manually juggling asynchronous requests, cleaning data, and wiring up export logic, Scrapy gives you a robust, batteries-included structure. It handles all the low-level networking headaches, letting you focus purely on the extraction logic.

Scrapy provides the architectural blueprint for scalable crawling projects. Image Source: CrawlKit.

Understanding Scrapy's Core Components

Scrapy’s power lies in its elegant architecture. It might look intimidating at first, but it boils down to a few key components that work together.

- Spiders: These are the heart of your project. A spider is a Python class you write to crawl a specific website. You tell it where to start, how to follow links, and how to parse the data it finds.

- Items: Think of Items as structured containers for your scraped data. They're like Python dictionaries but with a defined schema, which ensures your data output is consistent and clean.

- Pipelines: After a spider extracts an Item, that data gets passed into the Item Pipeline. This is where you can process it—cleaning messy HTML, validating fields, checking for duplicates, and saving the result to a database.

- Selectors: Scrapy comes with its own blazing-fast selectors built on

lxml, letting you pull data using CSS selectors or XPath expressions.

This modular design makes Scrapy effective and your code easier to write, debug, and maintain.

Building a Practical Blog Spider

Let's build a small but practical Scrapy spider that crawls a blog, grabs the title and author from every article, and follows pagination links. First, install Scrapy and generate a new project structure.

1pip install scrapy

2scrapy startproject blog_scraper

3cd blog_scraperNow, create a new spider file inside the spiders directory called toscrape_spider.py.

1import scrapy

2

3class BlogSpider(scrapy.Spider):

4 name = "blog"

5 start_urls = ["http://quotes.toscrape.com/"]

6

7 def parse(self, response):

8 # Extract data from the current page

9 for quote in response.css("div.quote"):

10 yield {

11 "text": quote.css("span.text::text").get(),

12 "author": quote.css("small.author::text").get(),

13 }

14

15 # Find the 'Next' button link and tell Scrapy to follow it

16 next_page = response.css("li.next a::attr(href)").get()

17 if next_page is not None:

18 yield response.follow(next_page, self.parse)To run it, open your terminal in the project's root directory and use this command:

scrapy crawl blog -o quotes.json

This tells Scrapy to run the spider named "blog" and dump all the extracted data into quotes.json. The spider starts at the start_urls, scrapes the quotes, finds the "Next" page link, and automatically follows it, repeating the process until there are no more pages. If you want to dive deeper, our detailed guide on how to build a web crawler covers more advanced patterns.

Scraping Ethically and Avoiding Blocks

Nothing torpedoes a web scraping project faster than a blocked IP. When you're learning how to web scrape with Python, it’s essential to realize that long-term success hinges on scraping responsibly. The goal is simple: make your scraper behave less like an aggressive bot and more like a considerate human user.

This approach isn’t just about being a good internet citizen; it's a practical strategy to keep your data pipelines running. An overly aggressive scraper can hammer a server and all but guarantee you'll be blocked.

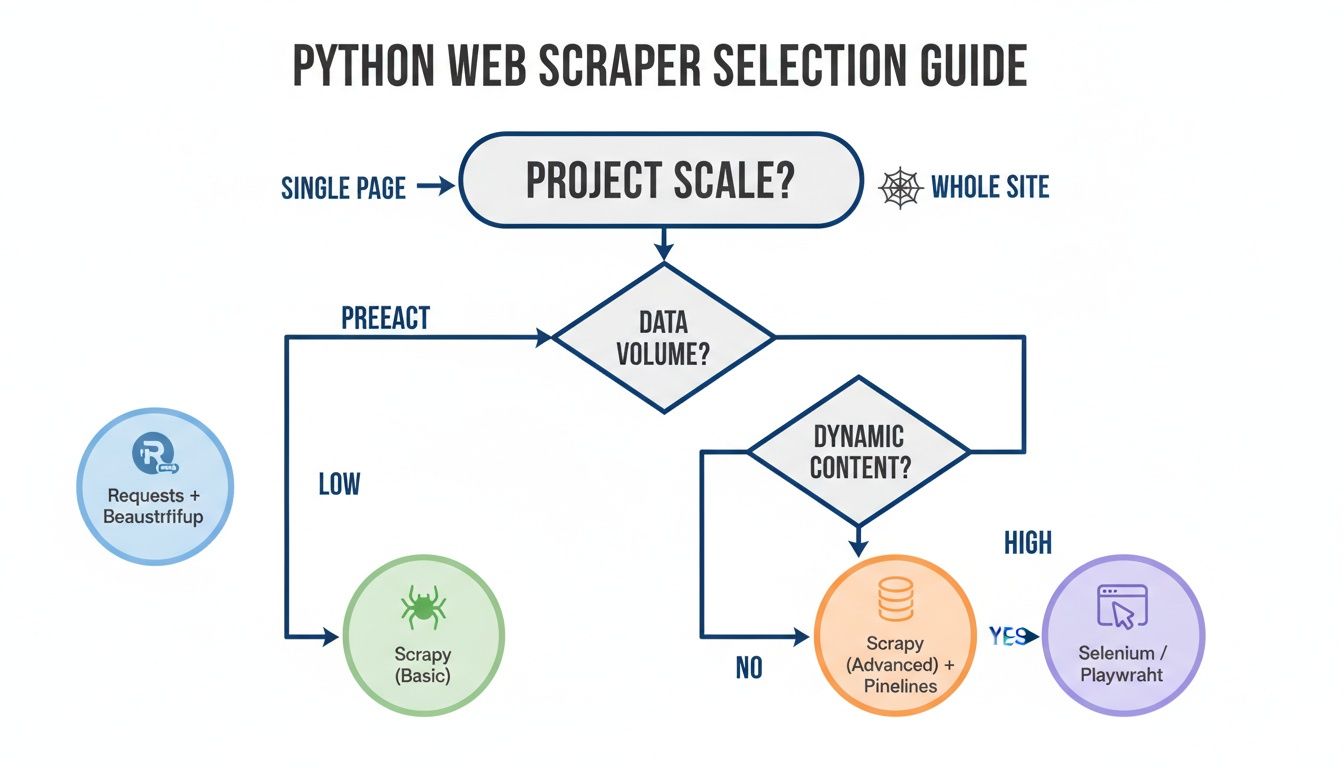

Choosing the right tool is the first step in building a responsible scraper. Image Source: CrawlKit.

Respecting Websites and Their Rules

Before you write any code, your first stop should always be the site's robots.txt file (e.g., example.com/robots.txt). This text file is where website owners tell crawlers which parts of their site are off-limits.

- Always check

robots.txt: This is the cardinal rule of polite scraping. - Set a legitimate User-Agent: Most libraries announce themselves with a generic User-Agent that screams "bot!" Change this to mimic a common web browser.

- Implement polite delays: Don't bombard a server with rapid-fire requests. Introduce randomized delays—say, between 2 to 5 seconds—to simulate human browsing speed.

Technical Strategies to Avoid Blocks

Beyond basic etiquette, you'll need technical tactics to navigate modern anti-bot systems. According to a recent web scraping ecosystem report on GroupBWT.com, 39.1% of Python projects now use proxies specifically to deal with IP-based bans.

A smart scraper is a resilient scraper. Implementing retries with exponential backoff for server errors (like a

502 Bad Gateway) ensures that temporary network glitches don't cause your entire crawl to fail.

Here’s a quick checklist for building more durable scrapers:

- Rotate Your IP Address: This is the single most effective way to avoid getting flagged. Using a pool of proxies distributes your traffic, making it harder to trace.

- Use Different Proxy Types: Datacenter proxies are fast but easy to detect. Residential and mobile proxies appear as legitimate user traffic and are far more effective.

- Manage Sessions and Cookies: Many sites track user behavior. By maintaining session cookies across requests, you can better mimic a real user's browsing journey.

Platforms like CrawlKit are built to handle this complexity for you. As a developer-first web data platform, it manages the entire scraping infrastructure—from proxy rotation and browser fingerprinting to solving CAPTCHAs. This lets you start free and focus on building your application, not on the endless game of avoiding blocks.

Using a Web Scraping API to Simplify Everything

Building scrapers from scratch is a fantastic skill, but managing the infrastructure can become a full-time job. You can quickly find yourself juggling rotating proxies, debugging headless browsers, and dealing with an endless stream of CAPTCHAs.

This is where a developer-first web scraping API like CrawlKit comes in. It’s the logical next step when you need reliable data without the DevOps overhead. Instead of fighting with browser automation or managing a fragile proxy pool, you make a single, clean API call. The service does all the heavy lifting and hands you back clean, structured JSON.

An API abstracts the complex infrastructure of web scraping into a simple, elegant solution. Image Source: CrawlKit.

The API-First Advantage

CrawlKit is a web data platform built to handle the real-world complexities that bring custom scripts to their knees. It’s an API-first solution, meaning you can plug it into your workflow with just a few lines of code and get back to focusing on using data, not just fighting to get it.

Here’s what you get to offload:

- No Scraping Infrastructure: Forget about managing servers, browsers, or proxy networks. The entire stack is handled for you.

- Proxies and Anti-Bot Abstracted: Residential proxies, browser fingerprinting, and CAPTCHA solving are all taken care of automatically for higher success rates.

- Structured Data Out of the Box: Get clean, LLM-ready JSON for things like search results, screenshots, app reviews, and even detailed LinkedIn company or person data.

Key Takeaway: A good web scraping API lets you treat the web like a database. You ask for the data you need, and the API delivers it, handling all the intermediary chaos so you don't have to.

Practical Implementation with CrawlKit

Getting started is refreshingly simple. You can start free and be fetching data in minutes. Here’s a quick cURL example that scrapes a dynamic, JavaScript-heavy page and returns the rendered HTML:

1curl "https://api.crawlkit.sh/v1/scrape/raw-html?url=https%3A%2F%2Fquotes.toscrape.com%2Fjs%2F" \

2 -H "x-crawlkit-api-key: YOUR_API_KEY"Dropping this into a Python script is just as easy. The snippet below uses the requests library to do the same thing:

1import requests

2import json

3

4api_key = 'YOUR_API_KEY' # Get yours from crawlkit.sh

5target_url = 'https://quotes.toscrape.com/js/'

6

7response = requests.get(

8 'https://api.crawlkit.sh/v1/scrape/raw-html',

9 params={'url': target_url},

10 headers={'x-crawlkit-api-key': api_key}

11)

12

13if response.status_code == 200:

14 # The API returns the rendered HTML in a JSON object

15 data = response.json()

16 print(json.dumps(data, indent=2))

17else:

18 print(f"Error: {response.status_code}")This API-driven workflow effectively turns a complex engineering challenge into a straightforward data retrieval task. If you want to go deeper, check out our comprehensive web scraping API guide.

Frequently Asked Questions (FAQ)

Is web scraping with Python legal?

Web scraping exists in a legal gray area. Generally, scraping publicly available data that is not personal or copyrighted is permissible. However, you enter risky territory if you bypass login walls, violate a website's Terms of Service, or overload a server with requests. Always check the robots.txt file and scrape politely with delays. For commercial projects, consulting with a legal professional is advised.

What is the best Python library for web scraping?

There is no single "best" library; the right tool depends on the website's complexity.

- For simple, static sites: The combination of

Requests(for fetching HTML) andBeautifulSoup(for parsing it) is fast, efficient, and easy to learn. - For dynamic, JavaScript-heavy sites: Use a browser automation tool like Playwright, which can render the page in a real browser before extracting data.

- For large-scale crawling projects: A full-featured framework like Scrapy is the industry standard. It provides a structured environment for managing concurrent requests, data processing pipelines, and error handling.

How can I scrape a website that requires a login?

Your scraper needs to authenticate just like a user. The most reliable method is using a browser automation tool like Playwright to programmatically fill in the login form. The browser instance will then maintain the session cookies, allowing you to navigate to protected pages. For simpler, one-off scripts, you can log in manually in your browser, copy the session cookies from the developer tools, and include them in the headers of your requests calls.

How do I handle pagination when scraping multiple pages?

Pagination requires a loop. Your script should scrape the current page, then look for a "Next Page" link. If the link exists, extract its URL, and instruct your scraper to visit that page and repeat the process. The loop terminates when the "Next Page" link is no longer found on the page, indicating you've reached the end. Scrapy has built-in features that make handling this logic particularly elegant.

What is the best format to store scraped data?

The two most common formats are JSON and CSV. Use JSON when your data is nested or has a complex structure, as it's highly flexible. Use CSV for tabular data that can be easily opened in a spreadsheet application like Excel or Google Sheets. For larger, ongoing projects, storing data in a database like SQLite (for small projects), PostgreSQL, or MongoDB is recommended for better management and querying capabilities.

Can Python scrape data from a PDF?

Yes, Python has several libraries for extracting text and data from PDF files. Libraries like PyPDF2, pdfplumber, and tabula-py can parse PDF documents, extract text content, and even identify and extract tables into a structured format like a Pandas DataFrame.

Next steps

Now that you have the fundamentals of web scraping with Python, you're ready to tackle more advanced projects. These guides will help you level up your data extraction skills.