Meta Title: 12 Best Website Data Extraction Tools for Developers (2024) Meta Description: Discover the top website data extraction tools. We compare 12 API-first and no-code platforms for developers, including features, pricing, and use cases.

Finding the right website data extraction tools is critical for building reliable data pipelines, powering applications, or training AI models. The market is saturated with options, from simple APIs that handle headless browsers and proxy rotation to comprehensive platforms for orchestrating complex, large-scale crawls. This guide cuts through the noise to help you select the best tool for your specific engineering challenge.

We've curated and analyzed the top 12 platforms designed for developers, data engineers, and technical teams. Instead of generic marketing copy, you'll find a practical breakdown of each tool's core strengths, limitations, and ideal use cases. We evaluate everything from API design and developer experience to the underlying infrastructure that powers them.

This article provides a direct comparison to help you make a faster, more informed decision. Whether you're building a price monitoring system, aggregating market research data, or enriching user profiles, this resource will help you find the right toolkit to get the job done without the overhead of building and maintaining your own scraping infrastructure.

Table of Contents

- 1. CrawlKit

- 2. Zyte

- 3. Apify

- 4. Bright Data

- 5. Oxylabs

- 6. ScrapeHero

- 7. ParseHub

- 8. Octoparse

- 9. Web Scraper (webscraper.io)

- 10. ScraperAPI

- 11. SerpApi

- 12. Diffbot

- Comparison Table

- Making the Right Choice

- Frequently Asked Questions

- Next Steps

1. CrawlKit

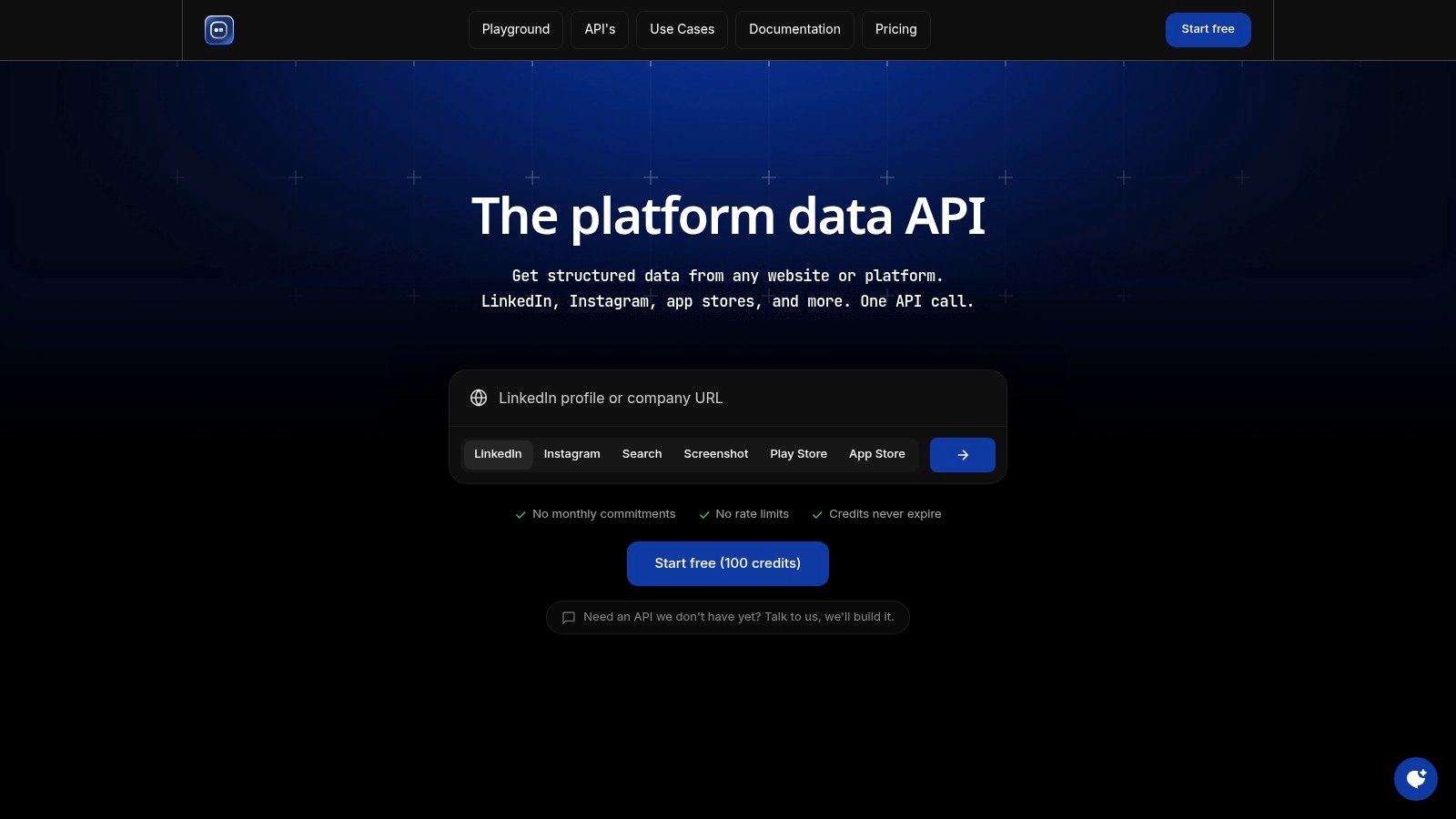

CrawlKit is a developer-first, API-first web data platform designed to transform any website, social media profile, or app store page into structured JSON. It abstracts away the need for any scraping infrastructure, as proxies and anti-bot measures are handled automatically. This approach allows engineering teams to focus purely on data integration, making it an exceptional choice among modern website data extraction tools.

Caption: CrawlKit's unified platform data API simplifies extracting structured JSON from web, social, and app store sources. (Source: CrawlKit)

Caption: CrawlKit's unified platform data API simplifies extracting structured JSON from web, social, and app store sources. (Source: CrawlKit)

Why CrawlKit is a Top-Tier Tool

CrawlKit’s core advantage is its consolidation of disparate data sources into a single, elegant API. Instead of juggling separate tools for scraping websites, taking screenshots, or extracting LinkedIn company/person data or app reviews, developers can use one consistent interface. It handles full-page rendering for dynamic, JavaScript-heavy sites, returning validated JSON ready for immediate use.

Here's a simple cURL example to extract a LinkedIn company profile:

1curl -X POST 'https://api.crawlkit.sh/v1/linkedin/company' \

2-H 'Content-Type: application/json' \

3-H 'Authorization: Bearer YOUR_API_KEY' \

4-d '{"url": "https://www.linkedin.com/company/google"}'Key Features and Use Cases

- Unified Data Sources: A single API provides access to web search results, social profiles (LinkedIn), app store reviews (Play Store, App Store), screenshots, and general website content.

- No Scraping Infrastructure: Completely manages rotating proxies, headless browser rendering, and rate-limit handling. Proxies and anti-bot measures are fully abstracted.

- Developer-Centric Experience: Offers simple HTTP endpoints, official SDKs (e.g., Node.js), and integrations with common cloud and AI stacks, enabling implementation in minutes.

- Production-Ready Output: Delivers clean, validated JSON, perfect for fine-tuning language models or powering business intelligence tools. Explore our guide on how to extract data from websites effectively.

Pricing and Access

CrawlKit operates on a transparent, pay-as-you-go credit system. You can start free with 100 credits, and credits never expire. Failed requests are automatically refunded, ensuring you only pay for successful extractions.

- Pros:

- One API for web, social, and app platforms reduces complexity.

- Fully managed infrastructure saves significant engineering resources.

- Transparent, low-cost pricing with a free tier.

- Output is pre-validated and formatted for AI/LLM applications.

- Cons:

- Users are responsible for ensuring their data collection practices comply with relevant legal and platform policies.

- Certain niche endpoints might be in development or require a custom build request.

CTAs: Try the Playground / Read the Docs / Start Free Website: https://crawlkit.sh

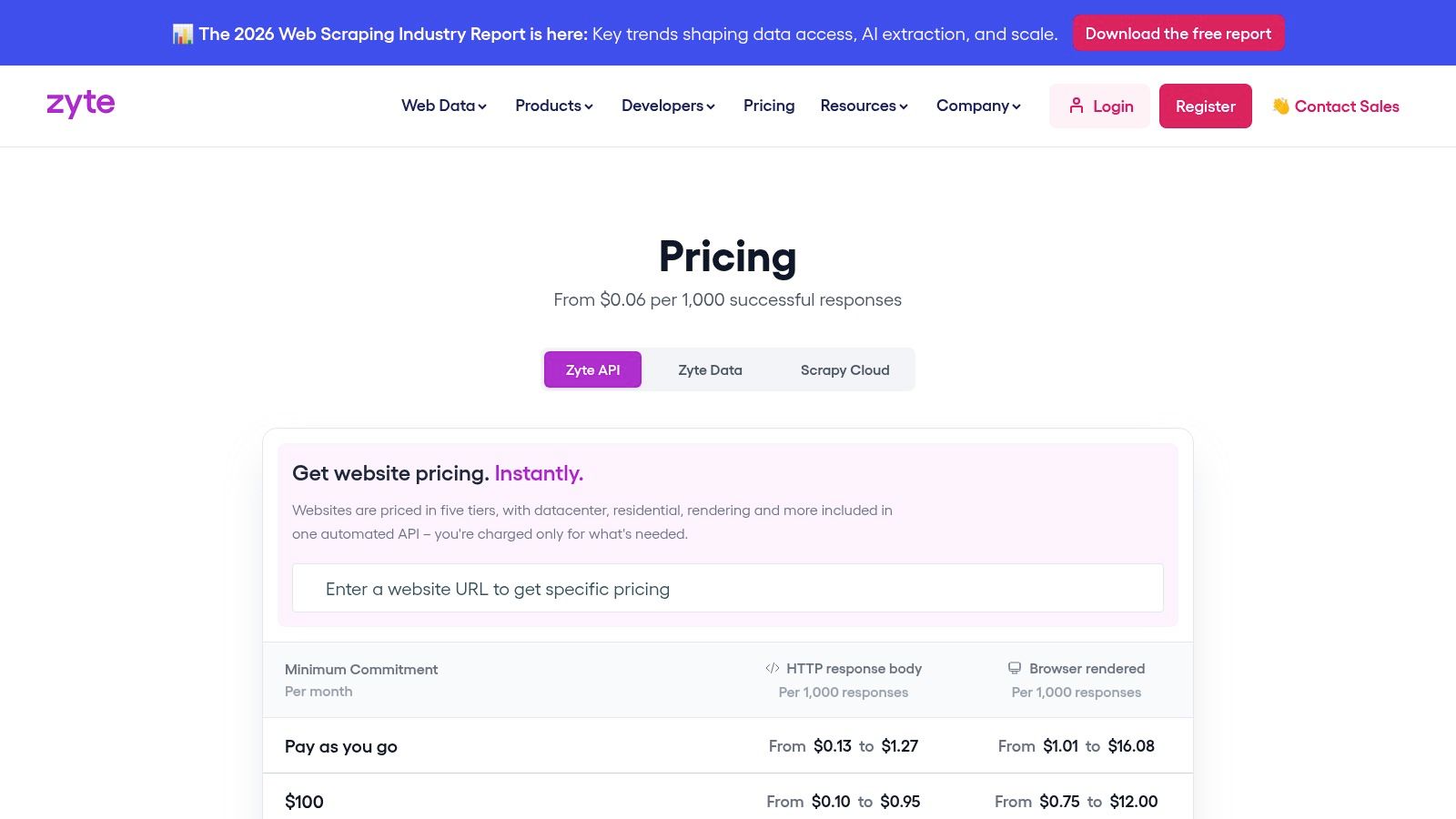

2. Zyte

Zyte, formerly Scrapinghub, offers a comprehensive, developer-focused platform for web scraping at scale. Its Zyte API intelligently selects the right unblocking strategy for each request, making it a robust tool for extracting data from challenging websites.

Caption: Zyte's pricing page details the various tiers available for their web data extraction API. (Source: Zyte)

Caption: Zyte's pricing page details the various tiers available for their web data extraction API. (Source: Zyte)

Key Features & Use Cases

Zyte stands out with its "pay-per-success" model, ensuring you are only billed for successful responses.

- Integrated Infrastructure: Combines residential, datacenter, and mobile proxies with smart rotation, automatically handling CAPTCHAs and blocks.

- Advanced Rendering: Supports full browser rendering for JavaScript-heavy sites and allows for user actions like clicks and scrolls.

- Developer-Friendly: Provides official client libraries for Python and Node.js.

- Typical Use Cases: Ideal for e-commerce price monitoring, real estate data aggregation, and financial data collection.

Pricing & Limitations

Zyte’s pricing is tiered based on request volume and complexity. While the per-request cost is transparent, forecasting can be difficult as different sites may require different request types. For instance, a key part of building an effective data extraction toolkit is selecting the best proxies for web scraping to ensure reliable operations, which Zyte manages for you.

- Pros: Pay-per-success model, extensive proxy network, and strong enterprise-level controls.

- Cons: Cost can be difficult to predict, and add-on features increase the overall price.

Website: https://www.zyte.com/

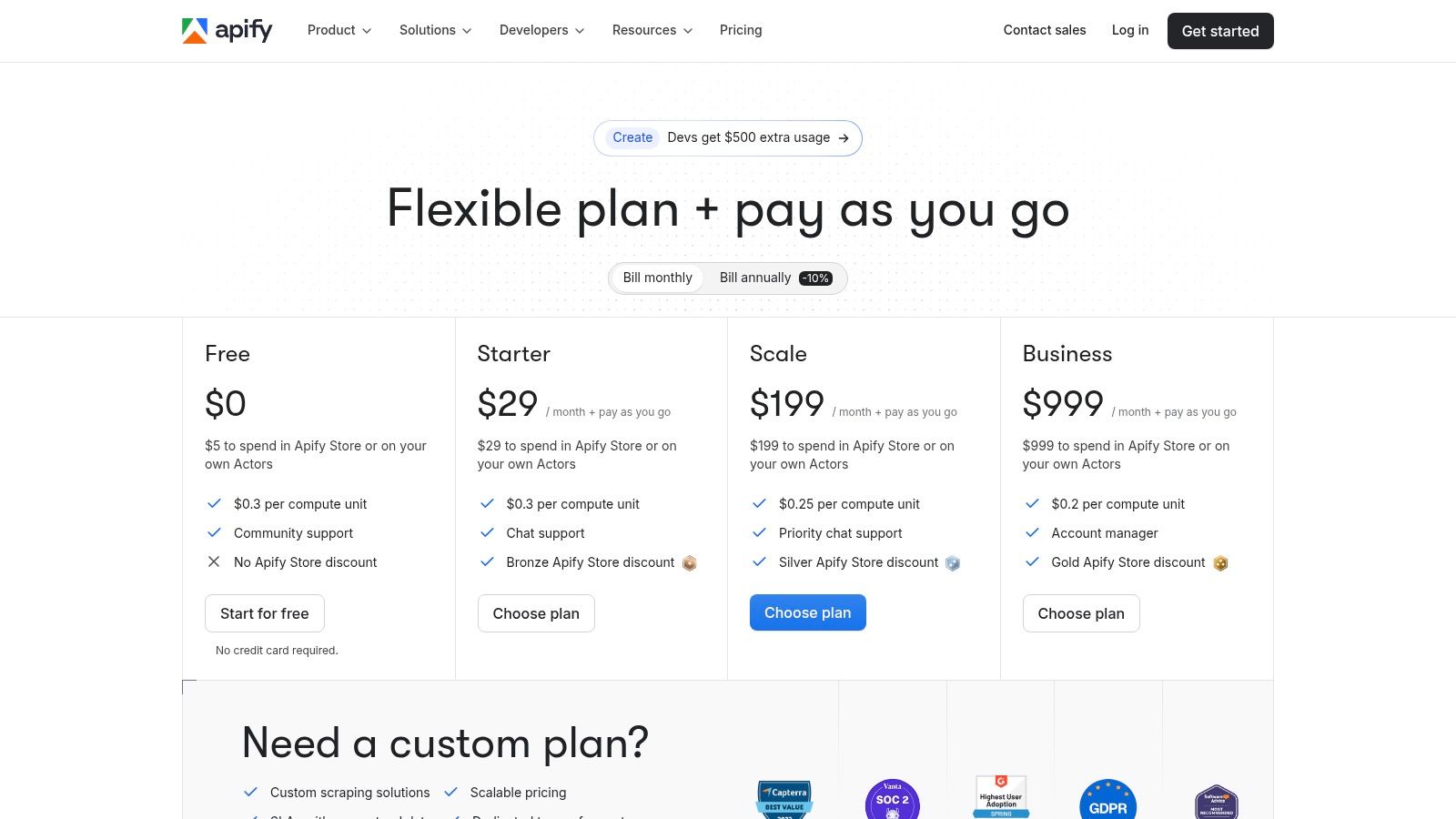

3. Apify

Apify is a full-fledged platform designed to build, run, and manage web scrapers, which it calls "Actors." It stands out with a large marketplace of pre-built scrapers, making it a great choice for teams who want to accelerate development.

Caption: Apify offers a range of pricing plans, including a free tier for developers to start building. (Source: Apify)

Caption: Apify offers a range of pricing plans, including a free tier for developers to start building. (Source: Apify)

Key Features & Use Cases

Apify's core strength lies in its Actor marketplace, which offers a wide array of ready-to-run tools. For those interested in the underlying infrastructure, it's a valuable lesson in how to build data pipelines effectively.

- Actor Marketplace: A large catalog of pre-built scrapers for common targets like social media, e-commerce sites, and search engines.

- Serverless Cloud Platform: Run scrapers on managed infrastructure with concurrency that scales based on your subscription plan.

- Integrated Proxies: Offers datacenter, residential, and SERP proxies as add-ons.

- Typical Use Cases: Excellent for social media monitoring, lead generation, and market research.

Pricing & Limitations

Apify’s pricing is based on platform usage, measured in "compute units." While transparent, the total cost can be variable and depends heavily on the complexity and runtime of the Actors you use.

- Pros: Extensive marketplace of ready-to-run scrapers and flexible monetization models.

- Cons: Total cost can be difficult to predict based on Actor usage.

Website: https://apify.com/pricing

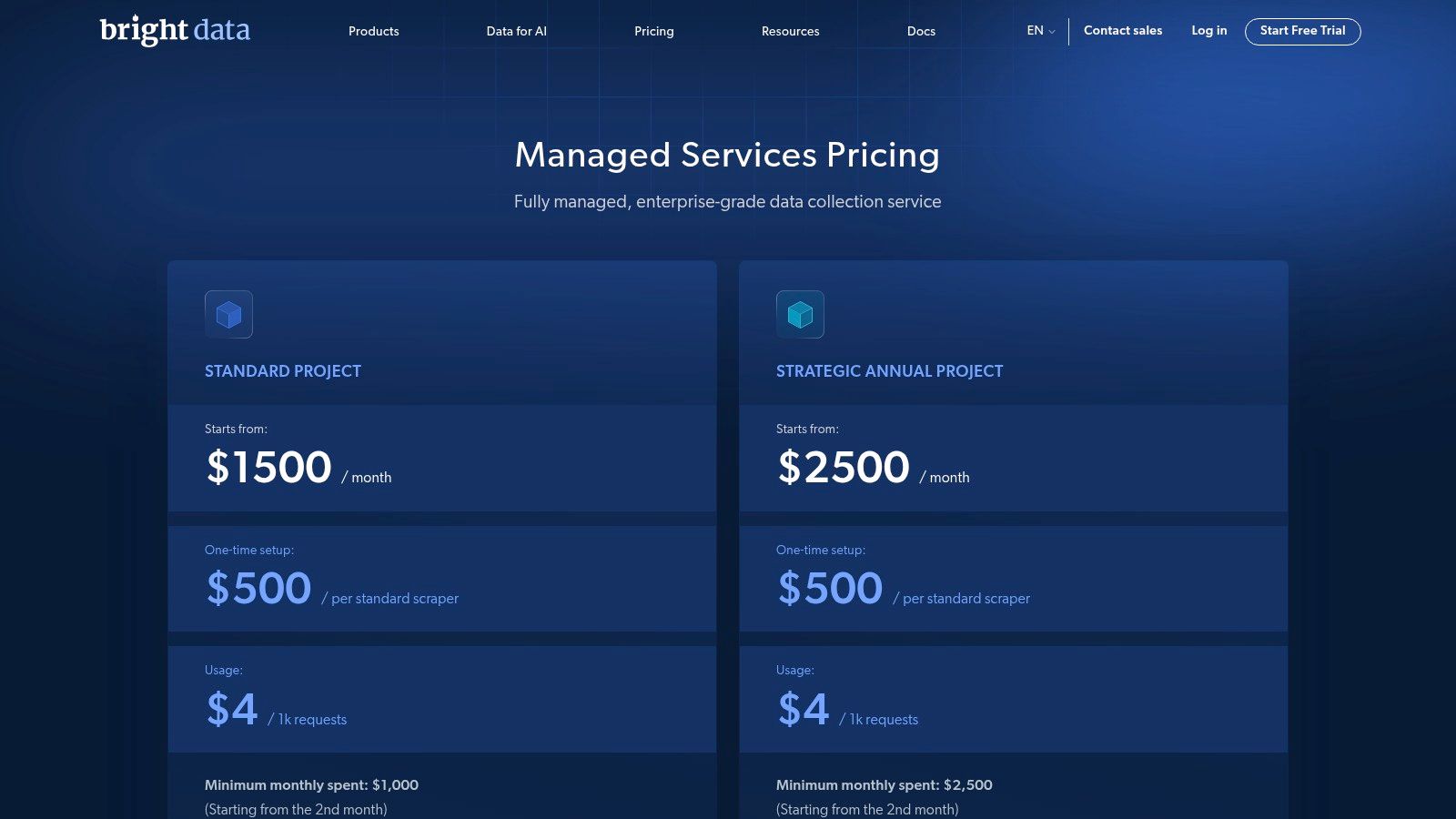

4. Bright Data

Bright Data is an enterprise-grade web data platform offering a vast suite of products, from an extensive proxy network to a comprehensive Web Scraper API and ready-made datasets. The platform’s depth makes it a powerful choice for organizations needing a one-stop solution.

Caption: Bright Data's pricing structure covers a wide array of services, from proxies to managed data collection. (Source: Bright Data)

Caption: Bright Data's pricing structure covers a wide array of services, from proxies to managed data collection. (Source: Bright Data)

Key Features & Use Cases

Bright Data stands out with its multi-faceted approach, allowing teams to either build their own solutions or offload collection entirely. Its SOC 2 compliance appeals to organizations with stringent legal requirements. As noted by one analysis from Gartner, its enterprise features are a key differentiator.

- Diverse Product Suite: Offers a massive proxy pool, a Web Unlocker, and a Web Scraper IDE.

- Managed Services & Datasets: Provides fully managed data collection and a dataset marketplace.

- Enterprise-Ready: Features strong compliance credentials and dedicated support.

- Typical Use Cases: Best for large-scale e-commerce intelligence, ad verification, and financial market analysis.

Pricing & Limitations

Bright Data’s pricing is highly segmented across its different products. While powerful, the costs can be significant, especially for managed services which often come with monthly minimums.

- Pros: Excellent performance, strong enterprise support, and SOC 2 compliance.

- Cons: Can be significantly more expensive than competitors.

Website: https://brightdata.com/pricing/managed-services

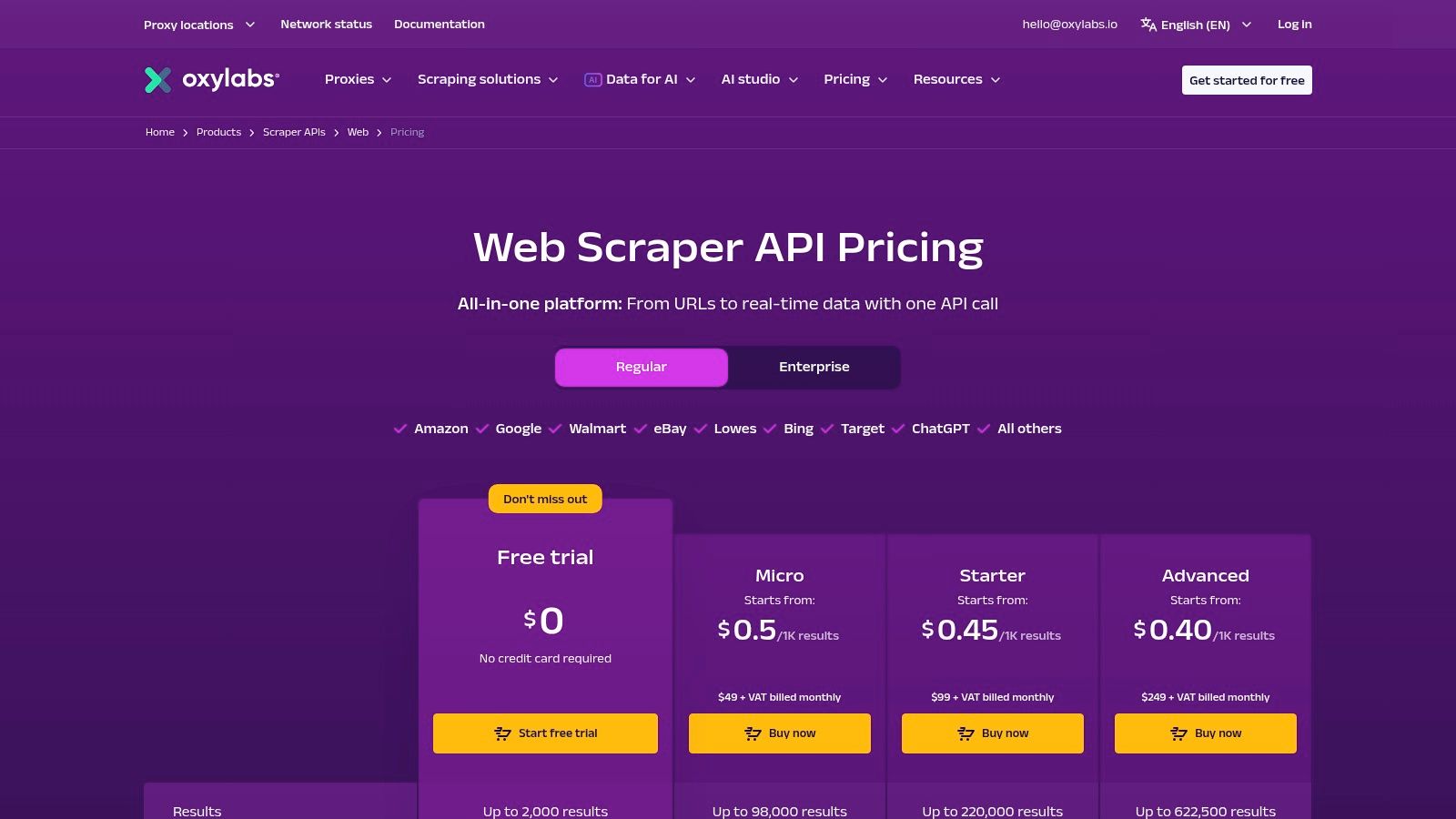

5. Oxylabs

Oxylabs provides enterprise-grade proxy networks and a suite of sophisticated Web Scraper APIs. It is engineered for large-scale data collection operations that demand high reliability and robust unblocking capabilities.

Caption: Oxylabs' pricing for its Web Scraper API scales based on request volume and features. (Source: Oxylabs)

Caption: Oxylabs' pricing for its Web Scraper API scales based on request volume and features. (Source: Oxylabs)

Key Features & Use Cases

Oxylabs distinguishes itself with a pay-per-successful-result model for its Scraper API, where pricing is often tailored to the specific type of website being targeted.

- Global Proxy Infrastructure: Offers one of the largest pools of residential, datacenter, and mobile proxies.

- AI-Powered Unblocker: Integrates an AI-driven unblocking solution that dynamically adapts to anti-bot measures.

- Target-Specific APIs: Provides specialized endpoints optimized for scraping popular targets like Google and Amazon.

- Typical Use Cases: Excellent for ad verification, brand protection, and SEO monitoring.

Pricing & Limitations

Oxylabs offers tiered pricing that scales from small projects to massive enterprise volumes. However, the pricing matrix can be nuanced, with costs varying based on the target domain.

- Pros: Exceptional scalability, extensive global proxy coverage, and specialized APIs.

- Cons: Pricing can be complex to navigate, and premium tiers may be expensive for smaller teams.

Website: https://oxylabs.io/

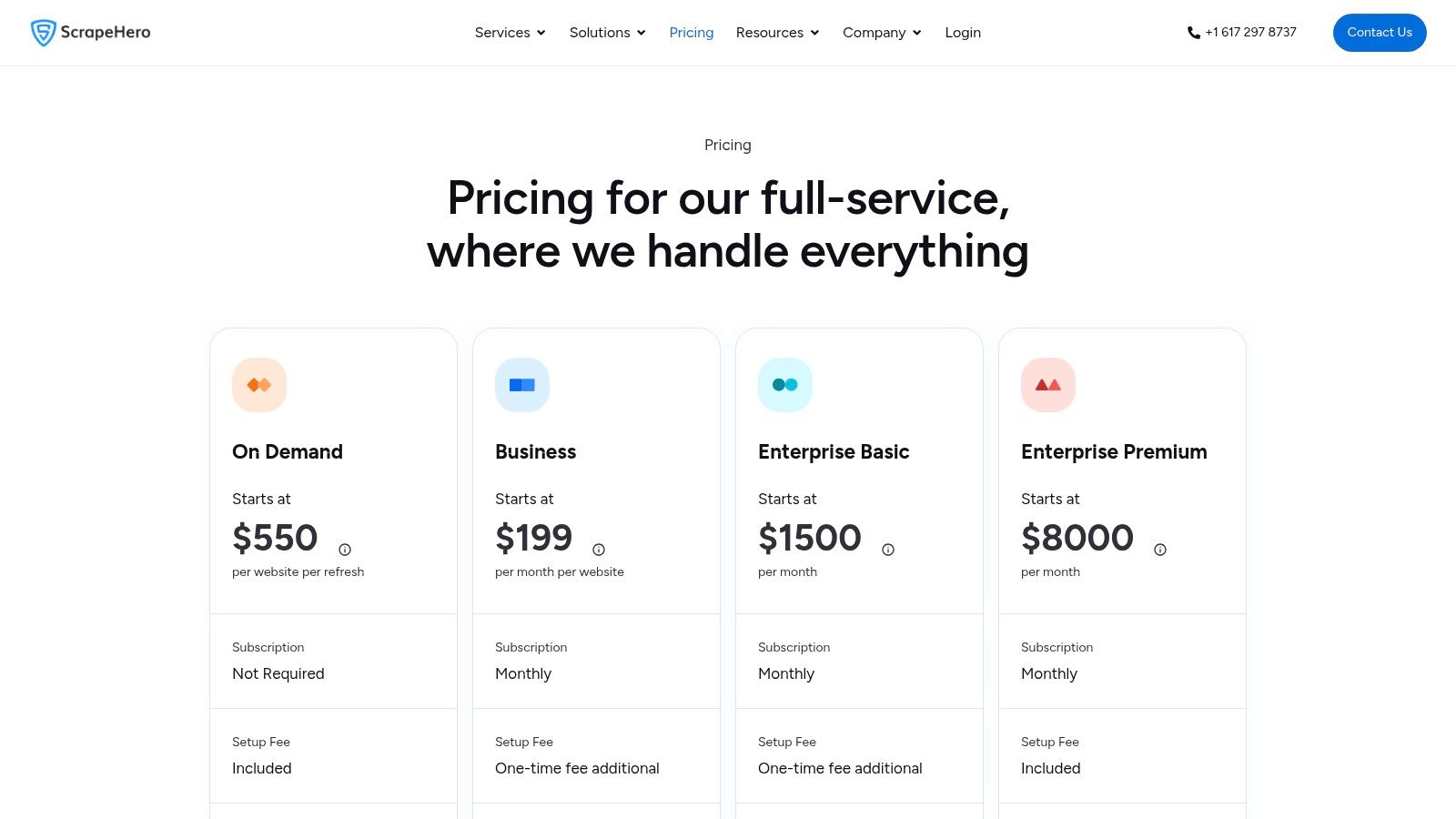

6. ScrapeHero

ScrapeHero offers fully managed, done-for-you data extraction services. It is an excellent option for teams that prefer to outsource the entire process, receiving clean, structured data feeds without managing any infrastructure.

Caption: ScrapeHero's pricing for managed services is typically based on project scope and data volume. (Source: ScrapeHero)

Caption: ScrapeHero's pricing for managed services is typically based on project scope and data volume. (Source: ScrapeHero)

Key Features & Use Cases

ScrapeHero’s core value is eliminating the engineering overhead associated with web scraping. Customers define their data requirements, and ScrapeHero handles the rest.

- Full-Service Data Delivery: Provides structured data on a set refresh frequency delivered via API, S3, or other formats.

- Managed Infrastructure: The entire backend is managed by their team.

- Quality Assurance: Includes a QA process to ensure the data delivered is accurate.

- Typical Use Cases: Best for lead generation, competitive intelligence, and market research.

Pricing & Limitations

ScrapeHero’s pricing is typically on a per-site, per-month basis. While this offers predictable expenses, it can become costly when aggregating data from many sources.

- Pros: Reduces engineering overhead, predictable costs, and includes data quality assurance.

- Cons: Less hands-on control compared to API-based platforms.

Website: https://www.scrapehero.com/pricing/

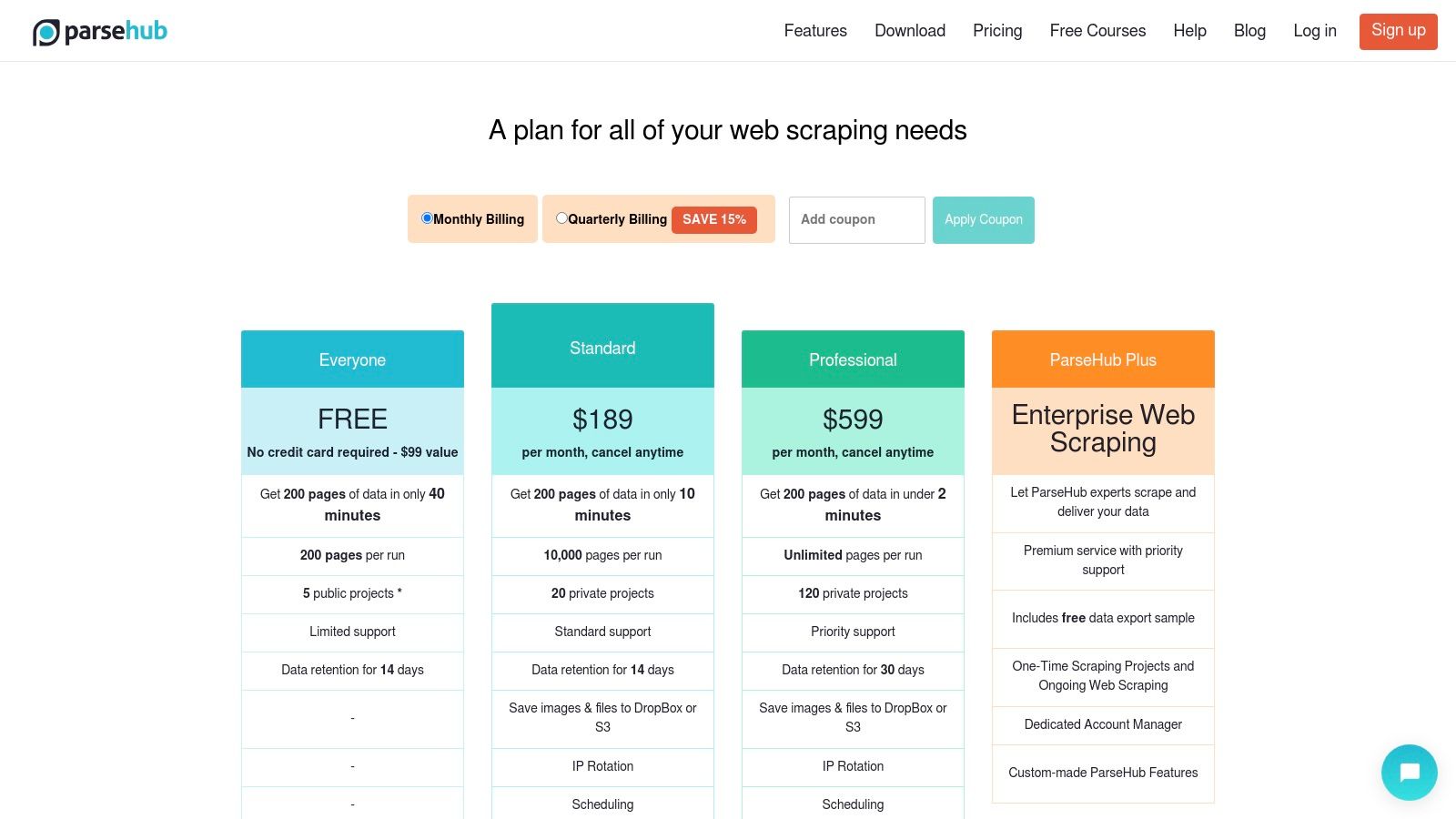

7. ParseHub

ParseHub is a desktop-first, no-code web scraping tool designed for users who need a visual interface for data extraction. It appeals to analysts, marketers, and researchers who require a point-and-click solution without writing any code.

Caption: ParseHub's pricing includes a free plan, with paid tiers unlocking more features and speed. (Source: ParseHub)

Caption: ParseHub's pricing includes a free plan, with paid tiers unlocking more features and speed. (Source: ParseHub)

Key Features & Use Cases

ParseHub’s main advantage is its visual project builder, which simplifies the process of selecting and structuring data from web pages.

- Visual Project Builder: Interactive point-and-click interface to select data without code.

- Cloud-Based Scheduling: Paid tiers allow for scheduled runs, automating recurring data collection tasks.

- Built-in IP Rotation: Automatically rotates IP addresses to help avoid blocks.

- Typical Use Cases: Great for ad hoc research, lead generation, and simple tasks like tracking e-commerce product prices.

Pricing & Limitations

ParseHub offers a free tier, but it is limited. Paid plans scale based on the number of projects, pages per run, and crawl speed. It can struggle with highly dynamic, JavaScript-heavy websites.

- Pros: Gentle learning curve for non-developers and a generous free plan.

- Cons: Pricing can become expensive as project needs grow.

Website: https://www.parsehub.com/pricing

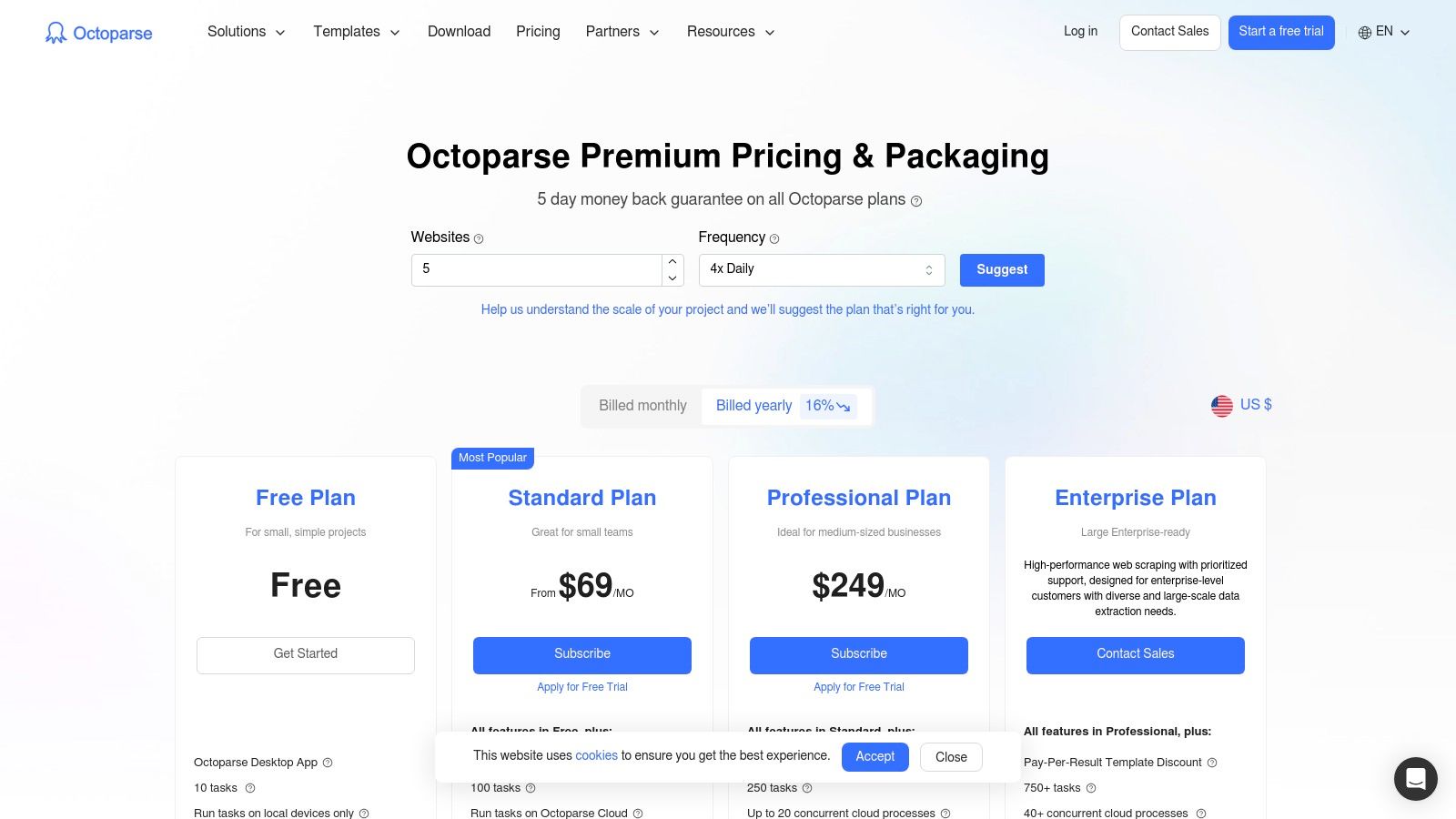

8. Octoparse

Octoparse is a powerful no-code website data extraction tool that serves both non-technical users and teams wanting to avoid extensive development. It provides a visual, point-and-click interface complemented by a cloud-based platform for scheduled extractions.

Caption: Octoparse's pricing tiers offer increasing levels of concurrency and cloud-based features. (Source: Octoparse)

Caption: Octoparse's pricing tiers offer increasing levels of concurrency and cloud-based features. (Source: Octoparse)

Key Features & Use Cases

Octoparse stands out by offering a comprehensive ecosystem around its visual workflow builder, including ready-to-use templates and premium add-ons.

- Visual Workflow Builder: Design complex scraping tasks with a point-and-click interface.

- Flexible Deployment: Run tasks locally or schedule them to run on their cloud platform.

- Data Export Options: Export data to CSV, Excel, JSON, or directly to databases.

- Typical Use Cases: Excellent for lead generation, academic research, and marketing analysis.

Pricing & Limitations

Octoparse offers several pricing tiers, including a free plan. Advanced features like residential proxies and CAPTCHA solving are available as add-ons, which can increase the total cost.

- Pros: Intuitive visual interface, robust anti-blocking features, and available managed services.

- Cons: Achieving success on highly protected targets often requires purchasing additional add-ons.

Website: https://www.octoparse.com/pricing

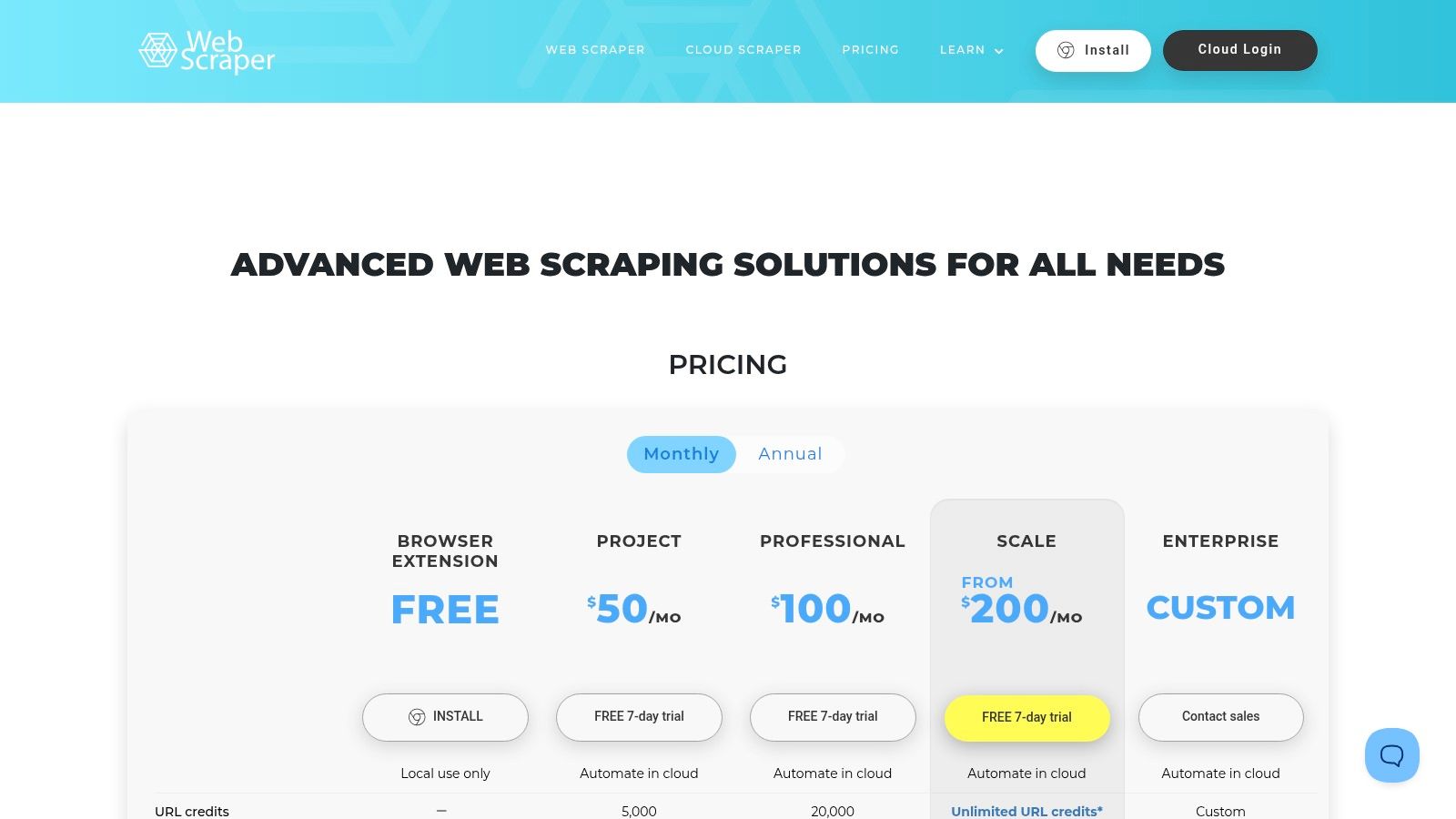

9. Web Scraper (webscraper.io)

Web Scraper offers one of the most accessible entry points into website data extraction, beginning with its popular free browser extension. It's perfect for non-programmers looking for a quick, visual way to build scrapers directly in Chrome or Firefox.

Caption: Web Scraper's pricing is based on a credit system for its cloud-based scraping services. (Source: Web Scraper)

Caption: Web Scraper's pricing is based on a credit system for its cloud-based scraping services. (Source: Web Scraper)

Key Features & Use Cases

The core strength of Web Scraper lies in its point-and-click interface, which simplifies the process of creating a "sitemap" to navigate and extract data from a target website.

- Visual Scraper Builder: A free browser extension allows users to define extraction logic by clicking on elements.

- Cloud Platform: Enables scheduling, IP rotation, and parallel task execution.

- Data Export Options: Export data to CSV, XLSX, and JSON, or access via an API.

- Typical Use Cases: Great for lead generation, market research, and e-commerce data collection.

Pricing & Limitations

Web Scraper's pricing is straightforward, with a generous free tier and tiered cloud plans based on "cloud credits." Its extension-based foundation can struggle with sophisticated anti-bot measures.

- Pros: Extremely low barrier to entry with the free extension and simple credit-based pricing.

- Cons: Less effective against heavily protected websites.

Website: https://webscraper.io/

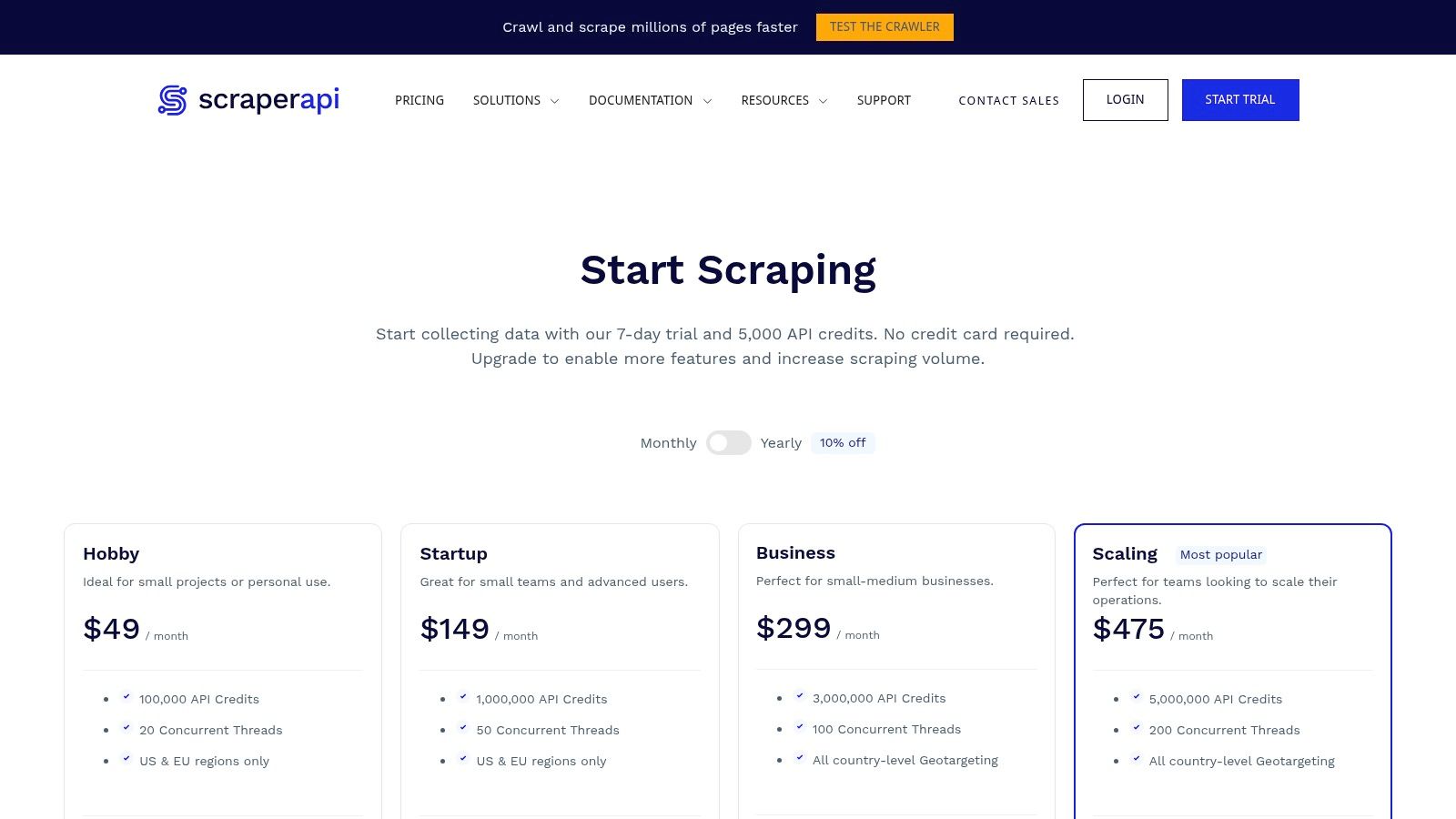

10. ScraperAPI

ScraperAPI provides a straightforward, developer-friendly solution for teams that want to manage their own parsing logic while offloading the complexities of web scraping infrastructure. It's a simple API endpoint that handles proxies, browser rendering, and CAPTCHAs.

Caption: ScraperAPI's plans are based on monthly API credits and concurrency limits. (Source: ScraperAPI)

Caption: ScraperAPI's plans are based on monthly API credits and concurrency limits. (Source: ScraperAPI)

Key Features & Use Cases

ScraperAPI’s strength lies in its plug-and-play model. Developers simply route their requests through the API. For a deeper analysis of how different services structure their plans, review this complete web scraping API guide for 2025.

- Simple API Integration: Integrates with any existing HTTP client or scraping script.

- Managed Infrastructure: Automatically rotates a pool of over 40 million IPs.

- Optional JS Rendering: Provides the ability to render JavaScript-heavy pages.

- Typical Use Cases: Great for small to mid-sized teams building custom scrapers for e-commerce, lead generation, or SEO monitoring.

Pricing & Limitations

The pricing model is based on monthly subscriptions that provide a set number of API credits. More advanced features like premium proxies are restricted to higher-priced tiers.

- Pros: Generous 7-day free trial, simple credit-based pricing, and easy integration.

- Cons: JavaScript rendering and premium IPs are tied to more expensive plans.

Website: https://www.scraperapi.com/pricing/

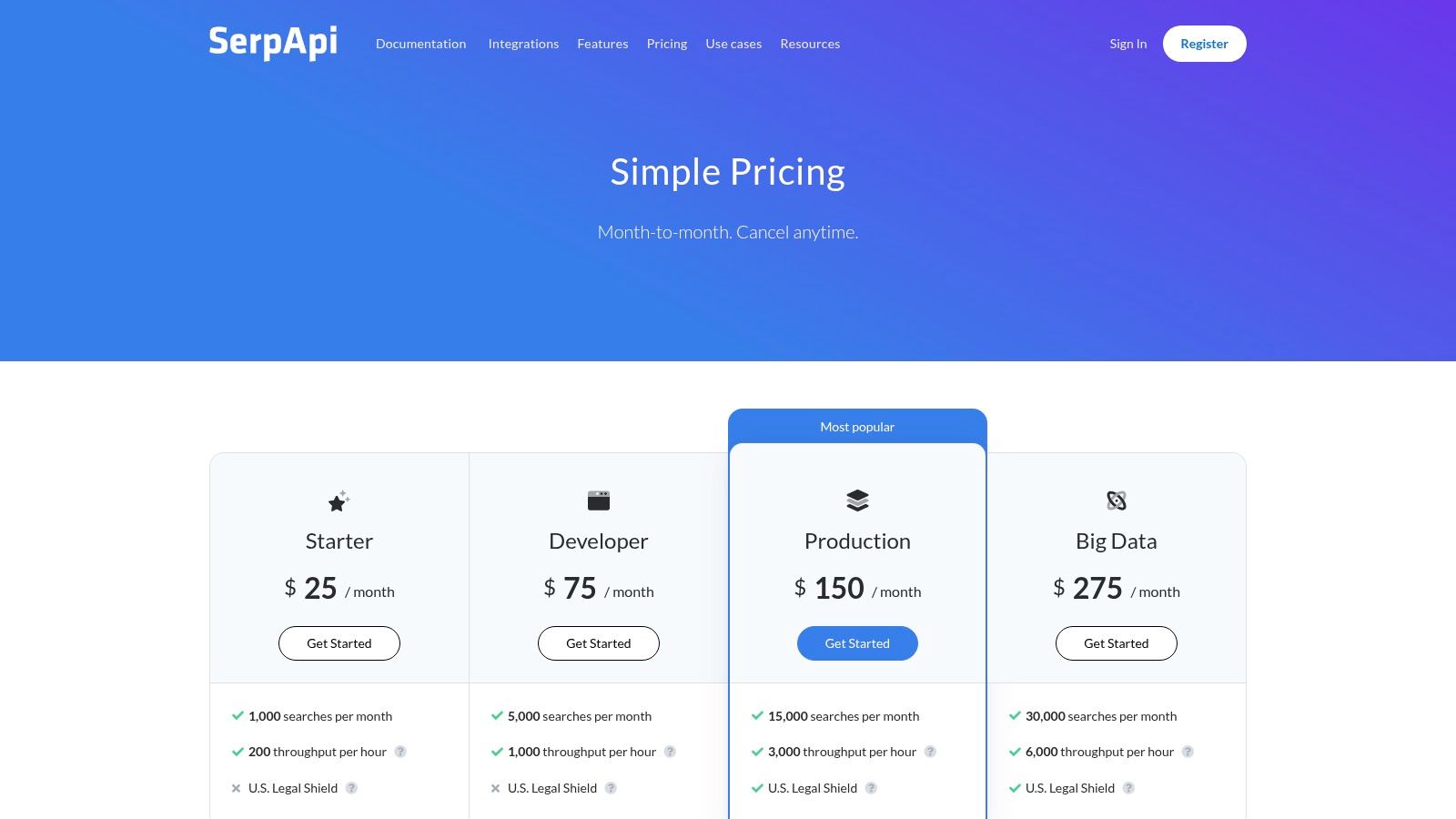

11. SerpApi

SerpApi is a highly specialized website data extraction tool focused exclusively on search engine results pages (SERPs). It provides a real-time API that scrapes and parses search results from major engines like Google, delivering structured JSON data.

Caption: SerpApi offers tiered pricing based on the number of searches performed per month. (Source: SerpApi)

Caption: SerpApi offers tiered pricing based on the number of searches performed per month. (Source: SerpApi)

Key Features & Use Cases

SerpApi’s strength is handling the nuances of search engine scraping, such as localized results and CAPTCHA solving.

- Structured SERP Data: Automatically parses and returns clean JSON for organic results, ads, knowledge graphs, and local packs.

- Location and Device Targeting: Allows for precise geo-targeting and supports various devices.

- Legal Scrutiny: The company maintains a public legal status page regarding search engine scraping.

- Typical Use Cases: Perfect for rank tracking tools, competitor analysis, and paid search intelligence.

Pricing & Limitations

SerpApi offers clear, tiered monthly plans based on the number of successful searches. While its specialization is a major strength, it is also its primary limitation; the tool is not designed for scraping arbitrary websites.

- Pros: Highly reliable and accurate for SERP data, simple API, and predictable pricing.

- Cons: Strictly limited to search engines and can become expensive at a massive scale.

Website: https://serpapi.com/

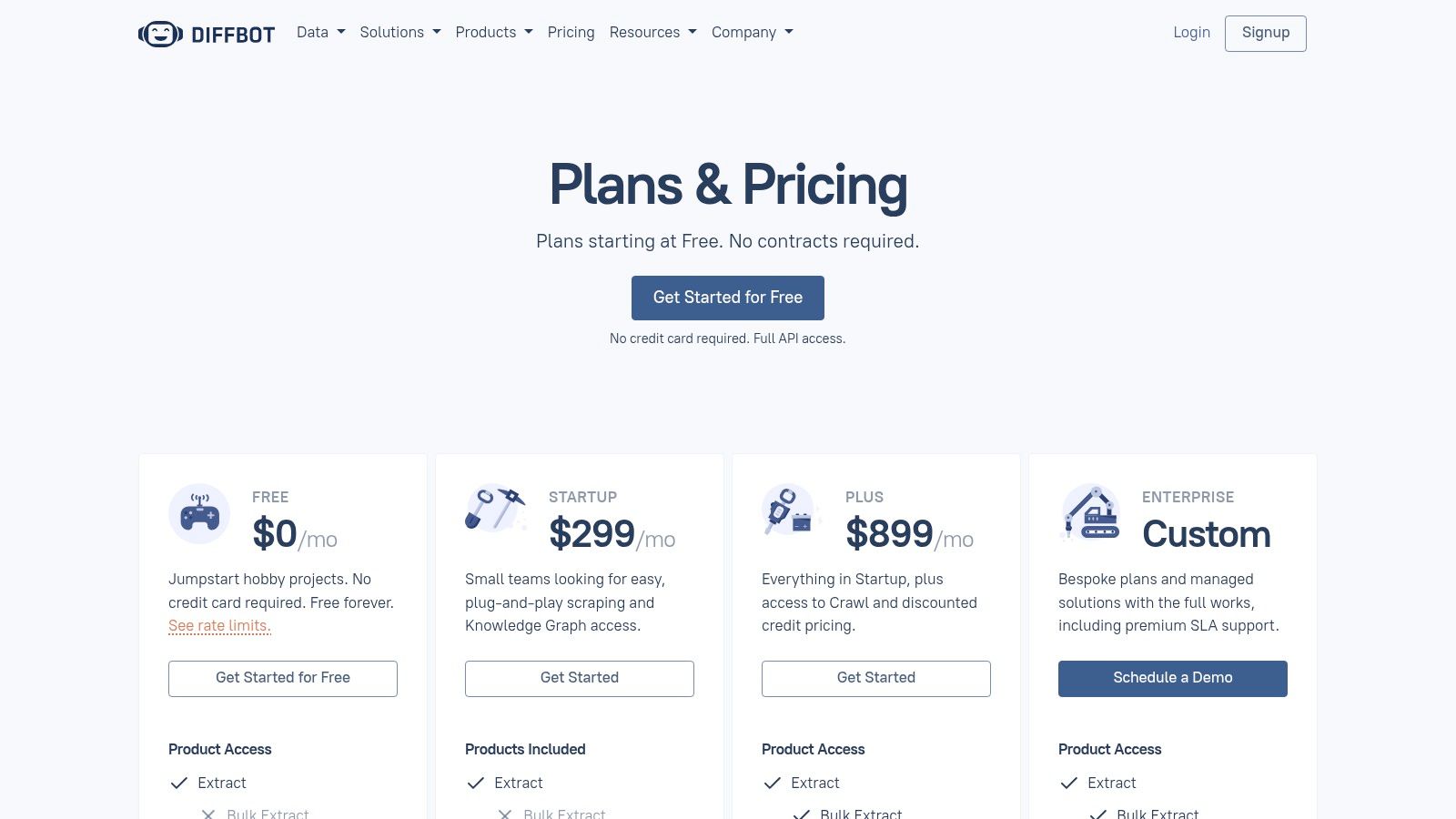

12. Diffbot

Diffbot positions itself as an AI-powered data extraction platform, designed for teams that need structured data without building custom parsers. It uses machine learning to automatically understand web pages and convert them into clean, organized JSON.

Caption: Diffbot uses a credit-based pricing model for its automatic extraction APIs and Knowledge Graph. (Source: Diffbot)

Caption: Diffbot uses a credit-based pricing model for its automatic extraction APIs and Knowledge Graph. (Source: Diffbot)

Key Features & Use Cases

Diffbot combines page-level extraction with its extensive Knowledge Graph, allowing users to scrape raw data and enrich it with contextual information.

- Automatic Extraction APIs: Requires no site-specific rules; it automatically parses articles, products, and more into structured formats.

- Crawlbot: Enables users to crawl entire websites to build a structured database.

- Knowledge Graph: Provides access to a pre-built graph of web entities for data enrichment.

- Typical Use Cases: Market intelligence, news monitoring, and sourcing machine learning training data.

Pricing & Limitations

Diffbot operates on a credit-based model. The pricing can become complex when scaling, as different API calls consume varying amounts of credits.

- Pros: Powerful AI-driven automatic extraction and a free tier for development.

- Cons: The credit-based pricing can be difficult to predict for large projects.

Website: https://www.diffbot.com/pricing/

Top 12 Web Data Extraction Tools Comparison

| Product | Core features | Key benefits | Pricing & value | Best for |

|---|---|---|---|---|

| CrawlKit | Unified API for web, social & app platforms (LinkedIn, App Store, SERPs, screenshots); validated JSON; SDKs | Developer-first; no scraping infrastructure; proxies/anti-bot abstracted; start free | Credit-based PAYG; 100 free credits; credits never expire; refunds on failures | AI teams, backend engineers, RAG/fine-tuning, CRM enrichment, production workflows |

| Zyte | Integrated proxy/IP rotation; JS rendering; automatic extraction add-ons | Auto-selects unblocking stack; charged per-success; enterprise controls | Tiered pay-per-success; clear per-1,000 pricing; extra fees for advanced features | Teams wanting managed rendering and per-success billing |

| Apify | Serverless Actors, large marketplace of prebuilt scrapers; proxy add-ons | Fast time-to-value via marketplace; flexible scaling | Compute-unit pricing; transparent but variable cost by Actor usage; tiered plans | Teams needing ready-made scrapers and managed runs |

| Bright Data | Large proxy pool (residential/mobile/ISP); managed scraping; Web Unlocker | Enterprise-grade tooling; managed services; strong compliance posture | Per-request pricing with monthly minimums; generally pricier | Enterprises needing large-scale, compliant data collection |

| Oxylabs | Premium global proxies; Web Scraper API with target-specific endpoints | Scales to very high volumes; robust geo-targeting & unblocking | Pay-per-success with target/rendering rates; nuanced pricing | High-volume collectors requiring geo-targeting and reliability |

| ScrapeHero | Done-for-you extraction services; API delivery; managed infra & QA | Outsourced crawler ops; clear scoping and delivery SLAs | Per-site / on-demand / monthly pricing; cost grows with sources | Teams that want white-glove data delivery without building scrapers |

| ParseHub | Visual project builder (desktop-first) + cloud scheduling; IP rotation | No-code, gentle learning curve; good for ad-hoc jobs | Paid tiers for cloud runs; pricing scales with pages/runs | Analysts/marketers and non-developers needing point-and-click scraping |

| Octoparse | No-code visual workflows; concurrent runs; proxy & CAPTCHA add-ons | Templates and anti-blocking ease ramp-up; optional managed services | Multiple plans and discounts; add-on costs | Teams wanting a no-code platform with optional white-glove help |

| Web Scraper | Free browser extension + cloud scheduling; URL credit model | Very low barrier to entry; straightforward URL-credit pricing | URL-credit based cloud plans; clear tiers and trials | Quick starts and light-weight extractions |

| ScraperAPI | Plug-and-play API for proxies and rendering; optional JS rendering | Lets dev teams keep parsers while offloading unblocking | Credit/plan model with concurrency limits; regional limits on low tiers | Developers maintaining custom parsers who need robust unblocking |

| SerpApi | Purpose-built SERP APIs with location/device params; structured JSON | Mature, high-throughput SERP coverage; rich SERP fields | Monthly tiers with generous throughput; costs scale with volume | SEO, pricing intelligence, and retrieval tasks focused on SERPs |

| Diffbot | AI Extract APIs, automatic site crawling; Knowledge Graph for enrichment | Automatic structured extraction + entity-level enrichment | Credits-based with free tier; pricing can rise for large crawls | Teams wanting turnkey extraction plus entity enrichment |

Final Thoughts: Integrating Data Extraction Into Your Workflow

Navigating the landscape of website data extraction tools can feel complex, but the right solution can transform raw web data into a strategic asset. The key takeaway is that the "best" tool aligns with your project's specific needs, technical resources, and scalability goals.

Your choice ultimately hinges on a trade-off between control, convenience, and cost. Do you need a simple, reliable API to handle proxy rotation and JavaScript rendering, or do you require a full-scale infrastructure for massive, enterprise-level crawls? Answering this question is the first step toward integrating an effective data extraction pipeline into your workflow.

Making the Right Choice: A Practical Framework

Selecting from the many available website data extraction tools requires a clear assessment of your objectives. For developers building applications that rely on structured data from diverse sources like LinkedIn, Google, or app stores, a unified, API-first platform like CrawlKit offers a streamlined path, abstracting away tedious infrastructure management.

Conversely, if your team lacks engineering resources, a visual tool like ParseHub can provide an accessible entry point. For large-scale projects where data quality is paramount, investing in a comprehensive platform like Bright Data or outsourcing to a service like ScrapeHero might yield a better return. Always start with a free trial to validate that a tool can access your target websites before committing.

Key Factors to Consider Before You Start

- Data Structure and Quality: A tool that delivers clean, structured JSON reduces your post-processing workload.

- Total Cost of Ownership: This includes subscription fees plus developer hours spent on implementation and maintenance.

- Ethical and Legal Framework: Respect

robots.txtfiles, avoid overloading servers, and ensure compliance with regulations like GDPR and a website's terms of service.

From Data to Action

Once you have a reliable data pipeline, the possibilities are vast. You can fuel machine learning models, monitor competitor pricing, or enhance your product with unique datasets. You can even explore advanced techniques like programmatic SEO to scale your content efforts and turn your extracted data into a powerful growth engine.

Frequently Asked Questions

1. What are website data extraction tools?

Website data extraction tools are software or services that automate the process of collecting data from websites. They can range from simple browser extensions and no-code visual interfaces to powerful developer APIs that handle complex tasks like JavaScript rendering, proxy rotation, and CAPTCHA solving to return structured data like JSON or CSV.

2. Is it legal to extract data from a website?

The legality of web scraping is nuanced and depends on the data being collected, the methods used, and jurisdiction. It is generally legal to scrape publicly available data, but you must comply with a website's Terms of Service, robots.txt file, and data privacy regulations like GDPR and CCPA. Avoid scraping personal data or copyrighted content without permission. Consulting with a legal professional is always recommended.

3. What's the difference between a web scraping API and a no-code tool?

A web scraping API (like CrawlKit or ScraperAPI) is designed for developers. It provides programmatic access to scraping infrastructure, allowing you to integrate data extraction into your applications. A no-code tool (like ParseHub or Octoparse) offers a visual interface where users can point and click to select data, requiring no programming knowledge.

4. Why do I need proxies for web data extraction?

Websites often block or serve misleading information to IP addresses that make too many requests in a short period, a common sign of a scraper. Proxies route your requests through different IP addresses, making it appear as if the requests are coming from multiple users. This helps avoid IP bans and ensures you can collect data reliably and at scale.

5. Can these tools extract data from sites that require a login?

Some advanced website data extraction tools can handle authenticated sessions. This typically involves passing session cookies or other credentials with your API requests. However, this is more complex and raises additional legal and ethical considerations, as you are accessing non-public data.

6. How do I choose the right website data extraction tool for my project?

Consider these factors:

- Technical Skill: Are you a developer who needs an API, or do you prefer a no-code visual tool?

- Target Websites: Are they simple static sites or complex, JavaScript-heavy applications with anti-bot measures?

- Scale: Do you need to scrape a few hundred pages or millions?

- Budget: Are you looking for a free tool for a small project or an enterprise-grade solution?

- Data Format: Do you need raw HTML or structured JSON output?

7. What is JavaScript rendering and why is it important for data extraction?

Many modern websites use JavaScript to load content dynamically after the initial page loads. A simple HTTP request will only fetch the initial HTML source, missing the dynamic content. JavaScript rendering involves using a real browser (often a "headless" one) to load the page fully, execute all scripts, and render the final content, ensuring you can extract all visible data.

8. What is the best website data extraction tool for developers?

For developers, an API-first platform like CrawlKit is often the best choice. It provides a unified API for various data sources (web, social, apps), handles all infrastructure like proxies and browsers, and returns clean, structured JSON, significantly speeding up development time.

Next Steps

Ready to skip the infrastructure and start building? CrawlKit provides a developer-first API platform to scrape and extract structured data from any website, including LinkedIn, app stores, and more. Try our Playground, read our docs, or start free to get clean JSON data in minutes.