Meta Title: How to Scrape LinkedIn Data: A Developer's Guide (2024) Meta Description: Learn how to scrape LinkedIn data using APIs or DIY methods. This practical guide covers legal considerations, anti-bot bypass, and scaling your scraper.

Looking for a reliable way to scrape LinkedIn data? When developers tackle this, they quickly realize it's a choice between two paths: building a custom scraper from scratch or leveraging a third-party API designed for the job. This guide will walk you through both.

Going the DIY route gives you absolute control, but it also means you're on the hook for managing proxies, dealing with anti-bot measures, and keeping everything updated. On the other hand, a developer-first, API-first platform handles all that heavy lifting, delivering reliable data so you can focus on building your application.

Table of Contents

- Why Scrape LinkedIn Data and Is It Legal?

- Comparing Scraping Options: DIY vs. API

- How to Navigate LinkedIn's Anti-Scraping Defenses

- Architecting Your Scraper for Clean, Structured Data

- How to Scale Your LinkedIn Scraping Operations

- Frequently Asked Questions

- Next Steps

Why Scrape LinkedIn Data and Is It Legal?

LinkedIn is a massive, living database of professional contacts. It's an incredible source for everything from lead generation and market research to talent acquisition. For a developer, tapping into this data programmatically can unlock powerful automation, like enriching CRM records or tracking industry hiring trends.

But let's be clear: this isn't a walk in the park. LinkedIn has serious defenses to prevent automated access. This guide isn't formal legal advice, but it's a developer-to-developer look at the guardrails you need to respect.

Caption: Balancing the need for data with the ethical and technical challenges of DIY scraping versus using an API. Source: CrawlKit

Caption: Balancing the need for data with the ethical and technical challenges of DIY scraping versus using an API. Source: CrawlKit

The Legal and Ethical Landscape

The conversation boils down to one critical distinction: publicly available data versus private data. Public data is what anyone can see without logging in—a company's public page, a job posting, or a profile visible to the internet.

LinkedIn's User Agreement explicitly forbids any kind of automated access or scraping. While a landmark U.S. court case, hiQ Labs v. LinkedIn, suggested that scraping public data doesn't violate the Computer Fraud and Abuse Act (CFAA), this doesn't stop LinkedIn from banning your accounts and IP addresses.

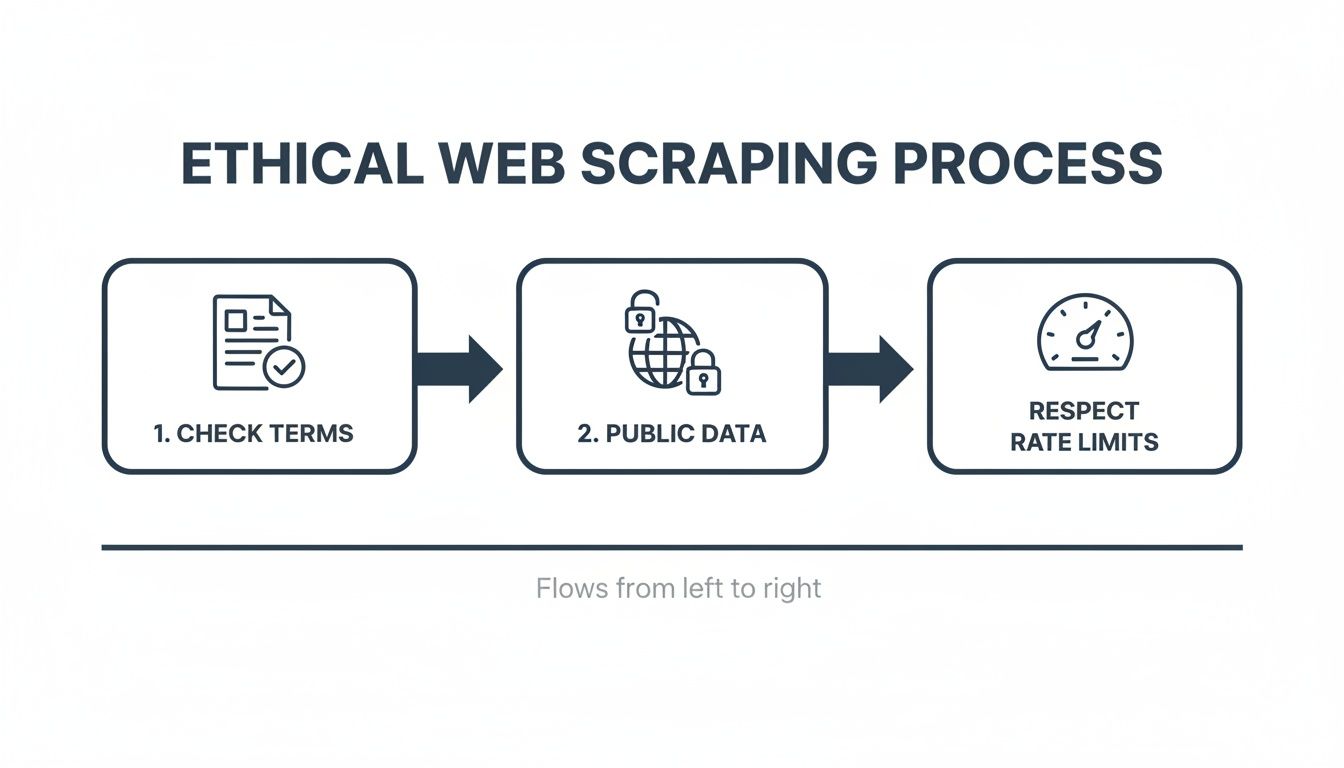

Here’s a simple checklist to keep your scraping ethical and above board:

- Public Data Only: If you can't see it in an incognito browser window without logging in, don't scrape it.

- Respect Server Load: Don't blast their servers with thousands of requests a minute. Implement sane rate limits and delays.

- Identify Yourself: Use a clear

User-Agentstring in your request headers. - Check

robots.txt: While not legally binding, respecting a site'srobots.txtis a professional courtesy.

For a deeper dive into how we approach this for production systems, you can check out our acceptable use policy for web scraping. Sticking to public data and using respectful collection techniques is the only way to build a sustainable data pipeline.

Comparing Scraping Options: DIY vs. API

When it's time to decide how to pull this data, you're making a choice that will affect your project's timeline, budget, and maintenance workload.

Do-It-Yourself (DIY) Scraping: This means using libraries like Puppeteer or Selenium to build your own scraper. You control everything, but you're also responsible for everything—proxies, headless browsers, solving CAPTCHAs, and constant maintenance. It's a huge engineering commitment.

API-First Platforms: A service like CrawlKit takes a different approach. As a developer-first web data platform, it manages all the scraping infrastructure for you. The messy parts like proxies and anti-bot systems are abstracted away. You just make a simple API call and get clean JSON data back.

Caption: The choice between building a scraper yourself and using an API comes down to a trade-off between control and convenience. Source: CrawlKit

To make the choice clearer, let's break down the trade-offs.

| Factor | DIY Scraping (e.g., Puppeteer) | API-Based Scraping (e.g., CrawlKit) |

|---|---|---|

| Time to First Data | Weeks to months | Hours to days |

| Initial Cost | High (engineering salaries) | Low (pay-as-you-go, start free) |

| Ongoing Maintenance | Constant (adapting to site changes) | Handled by the provider |

| Scalability | Complex (managing proxy pools) | Built-in and managed |

| Reliability | Variable (depends on infrastructure) | High (backed by an SLA) |

A DIY solution only makes sense if web scraping is a core part of your business and you have the engineering resources to dedicate to it. For most other projects, an API is a more practical and scalable path.

How to Navigate LinkedIn's Anti-Scraping Defenses

Successfully pulling data comes down to outsmarting the platform's sophisticated anti-bot systems. This is where most custom scripts fail, getting hit with login prompts, CAPTCHAs, or IP blocks.

LinkedIn's system constantly calculates a "fraud score" for every visitor based on signals like:

- IP Reputation and Proxies: Requests from data center IPs are immediately suspicious. You need to route your traffic through residential or mobile proxies. This often requires you to build a proxy server or use a service that handles rotation.

- Request Headers and User-Agents: Your scraper must send headers that mimic a legitimate, modern web browser. A generic or missing

User-Agentis an instant red flag. - Behavioral Analysis: LinkedIn watches your navigation flow. A script that hits profile URLs directly without a referring page is behaving unnaturally.

Caption: A sustainable scraping strategy involves respecting terms of service, focusing on public data, and managing request rates. Source: CrawlKit

Caption: A sustainable scraping strategy involves respecting terms of service, focusing on public data, and managing request rates. Source: CrawlKit

Here’s a basic cURL example showing how to set a custom User-Agent and use a proxy:

1curl "https://www.linkedin.com/in/williamhgates" \

2 -H "User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/124.0.0.0 Safari/537.36" \

3 --proxy http://your_proxy_address:portThis simple command spoofs a common browser and routes the request through your proxy.

The API Approach: An Abstraction Layer

While DIY techniques are possible for small-scale projects, they create a massive maintenance burden. This is where an API-first platform like CrawlKit completely changes the game.

Instead of managing complex scraping infrastructure, you just make a single API call. CrawlKit handles all the anti-bot mitigation behind the scenes, abstracting away proxies and CAPTCHA solving so you can focus on the data itself. For a deeper dive into these principles, check out our guide on web scraping best practices.

Architecting Your Scraper for Clean, Structured Data

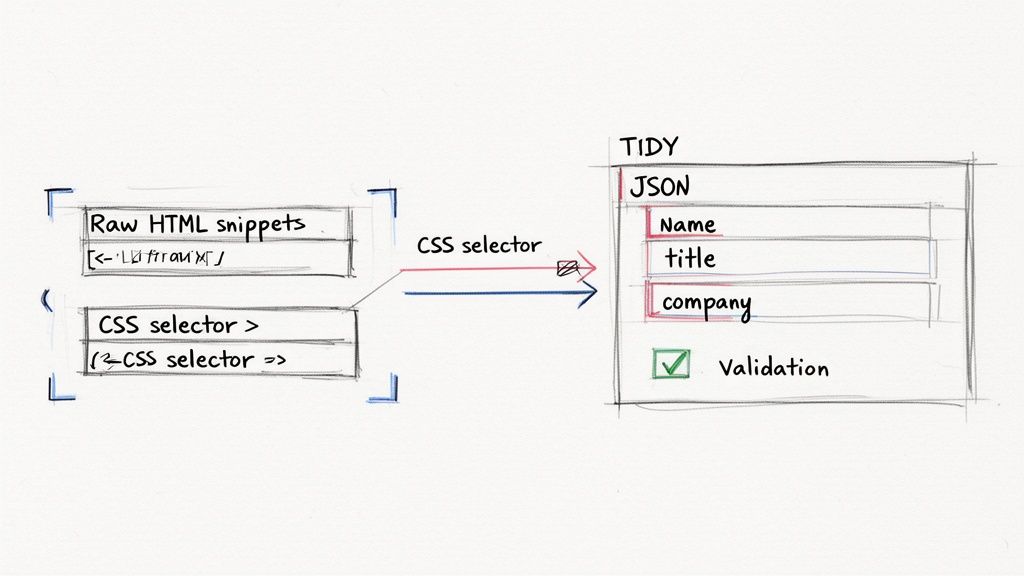

Getting the raw HTML is just the first step. The real craft in learning how to scrape LinkedIn data is transforming that chaos into clean, structured JSON. This requires a thoughtful architecture.

Caption: The process of parsing HTML, targeting data with selectors, and structuring it into clean JSON is central to any scraping project. Source: CrawlKit

Caption: The process of parsing HTML, targeting data with selectors, and structuring it into clean JSON is central to any scraping project. Source: CrawlKit

Targeting Data with CSS Selectors

The most common way to target data within an HTML document is with CSS selectors. A good selector is specific enough to grab the right element but resilient enough not to break when LinkedIn's frontend updates. You'll spend a lot of time in your browser's DevTools inspecting elements to find these selectors.

Defining a Clear Data Schema

Before writing any parsing code, map out your desired output. A JSON schema is your blueprint, ensuring every record is consistent and clean. A well-designed schema for a profile should be logical and nested.

1{

2 "profileUrl": "https://www.linkedin.com/in/example-profile",

3 "fullName": "Jane Doe",

4 "headline": "Senior Software Engineer at Tech Corp",

5 "location": "San Francisco Bay Area",

6 "currentCompany": {

7 "name": "Tech Corp",

8 "linkedinUrl": "https://www.linkedin.com/company/tech-corp"

9 },

10 "experience": [

11 {

12 "title": "Senior Software Engineer",

13 "company": "Tech Corp",

14 "duration": "Jan 2022 – Present"

15 }

16 ]

17}This structure is predictable and easy to work with. For developers thinking about what happens after extraction, understanding how to build data pipelines is a great next step.

The API-First Alternative

Building and maintaining parsing logic is a chore. Every time LinkedIn tweaks its frontend, your CSS selectors can break. A developer-first API like CrawlKit changes this.

Instead of writing custom parsing logic, you make a single API call. CrawlKit handles fetching the page, dealing with JavaScript, and parsing the content into a predefined, clean JSON schema. All the messy scraping infrastructure and maintenance is completely abstracted away.

This Node.js snippet shows how simple it is to get structured data for a person's profile:

1const response = await fetch('https://api.crawlkit.sh/v1/persons/scrape', {

2 method: 'POST',

3 headers: { 'Content-Type': 'application/json' },

4 body: JSON.stringify({

5 token: 'YOUR_API_TOKEN',

6 url: 'https://www.linkedin.com/in/satyanadella'

7 })

8});

9

10const data = await response.json();

11console.log(data);You get structured JSON back without ever looking at HTML. You can start free and see results for yourself.

How to Scale Your LinkedIn Scraping Operations

Pulling data from a few profiles is one thing; doing it for thousands is a completely different beast. At scale, you're not just managing HTTP requests; you're orchestrating proxy pools, dynamic rate limiting, and bulletproof error handling.

**Caption:** A technical overview of building scalable and resilient web scraping systems. **Source:** CrawlKitThe Reality of Scaling In-House

When you scale your own scraping operation, you're signing up for a serious engineering commitment:

- Proxy Management: Sourcing, testing, and rotating thousands of high-quality residential or mobile IPs.

- Concurrency and Rate Limiting: Building sophisticated job queues and throttling mechanisms to avoid getting banned.

- Error Handling and Retries: Creating smart logic to handle network hiccups, timeouts, and CAPTCHAs.

- Data Storage and Normalization: Building a solid pipeline for storing, cleaning, and validating the data firehose.

Caption: Building and maintaining scalable scraping infrastructure is a significant engineering challenge. Source: CrawlKit

This is a huge distraction from your actual product. You end up spending more time fighting anti-bot measures than analyzing the data.

The API-First Shortcut to Scalability

This is exactly where an API-first platform like CrawlKit comes in. It's a developer-first web data platform built to solve these scaling problems. All the painful parts—proxy management, anti-bot mitigation, and concurrency—are completely abstracted away. You don't have to touch any scraping infrastructure.

With CrawlKit, you can confidently make thousands of requests without worrying about getting blocked. You can start free, test requests in our playground, and get clean JSON integrated into your app in minutes. For a deeper dive into this model, check out some of the best web scraping APIs available.

Frequently Asked Questions

Is it legal to scrape data from LinkedIn?

While scraping publicly available data is generally considered legal in the U.S. (hiQ Labs v. LinkedIn), it directly violates LinkedIn’s User Agreement. This means LinkedIn can ban your account and block your IP, but legal action for scraping public data is unlikely. The safest path is to only scrape public information and never use an authenticated account.

Can I scrape LinkedIn Sales Navigator results?

Technically possible, but a very bad idea. Scraping Sales Navigator requires a paid login, and automating anything behind that login wall is a fast way to get permanently banned. LinkedIn's detection systems are extremely aggressive for authenticated sessions.

What is the best programming language for scraping LinkedIn?

Most developers use Python (with libraries like requests and BeautifulSoup) or Node.js (with Puppeteer or Playwright). However, when you use a scraping API like CrawlKit, the language doesn't matter. You're just making a simple HTTP request, so you can use cURL, Python, Go, Rust, or whatever you're comfortable with.

How many profiles can I scrape per day?

There's no magic number. For DIY scraping, a common rule of thumb is to stay under 80-100 profile views per day to mimic human behavior, but even that isn't a guarantee. Professional scraping APIs are built to solve this by managing requests across massive pools of proxies and browsers, allowing for much higher, reliable volumes.

Can I get email addresses from LinkedIn profiles?

Probably not directly. Email addresses are private data and are not visible on most public LinkedIn profiles. Any tool claiming to find emails from LinkedIn is almost certainly enriching the data on the back end by using external databases to find a corresponding email address, which is an enrichment step, not a scraping one.

What is a headless browser and why do I need it?

A headless browser is a web browser, like Chrome, that runs without a graphical user interface. It's essential for scraping modern sites like LinkedIn that rely on JavaScript to load dynamic content. Tools like Puppeteer let you control this browser programmatically, but it can be slow and memory-intensive. This is another complex piece that services like CrawlKit handle for you.

Why do my LinkedIn scrapers get blocked so easily?

LinkedIn uses a multi-layered defense system that analyzes IP reputation (data center vs. residential), request patterns, and user behavior. It also uses CAPTCHAs to challenge suspicious activity. Without a smart combination of high-quality residential proxies, realistic user agents, and human-like browsing patterns, most simple scripts are blocked almost immediately.

Should I use my personal LinkedIn account for scraping?

Absolutely not. This is the fastest way to get your personal account permanently banned. It directly violates the terms you agreed to and puts your entire professional network at risk. Always use methods that do not require you to be logged in.

Next Steps

Ready to skip the infrastructure and get straight to the data? CrawlKit abstracts away the complexity of proxies, anti-bot systems, and parsing.

- Try the API Playground: Make your first request in seconds

- Read the Docs: Explore the LinkedIn Person & Company Scraper APIs

- Web Scraping Best Practices: Learn how to build resilient scrapers