Meta Title: 10 Web Scraping Best Practices for Developers (2024 Guide) Meta Description: Master web scraping best practices. Learn to handle proxies, JavaScript, rate limits, and anti-bot systems for reliable, ethical, and scalable data collection.

Building a powerful web scraper is one thing, but ensuring it's reliable, ethical, and scalable is another challenge entirely. Mastering web scraping best practices is what separates brittle, easily-blocked scripts from robust data pipelines that deliver consistent value. This guide moves beyond basic tutorials to offer a comprehensive roundup of the top ten practices every developer needs to know, from respecting target sites to architecting for massive scale.

We'll cover practical techniques that prevent IP bans, handle modern web complexities like JavaScript rendering, and ensure the data you collect is clean and accurate. Think of this as a field guide for building professional-grade web scrapers. The principles here are not just about avoiding blocks; they are about creating sustainable and maintainable data extraction systems. These practices align with broader development principles, much like how general Software Engineering Best Practices form the foundation for any robust application.

Table of Contents

- 1. Respect

robots.txtand Terms of Service - 2. Implement Intelligent Rate Limiting

- 3. Rotate User-Agents and HTTP Headers

- 4. Use Proxy Rotation

- 5. Handle JavaScript Rendering

- 6. Implement Robust Error Handling

- 7. Structure Data Correctly

- 8. Monitor and Log Operations

- 9. Implement Caching

- 10. Design for Scalability

- FAQ

1. Respect `robots.txt` and Site Terms of Service: The Ethical Foundation

Before writing a single line of code, your first stop should always be the target website's /robots.txt file and its Terms of Service. This isn't just a courtesy; it's the ethical foundation of responsible data collection and a cornerstone of sustainable web scraping best practices. Ignoring these guidelines can lead to IP bans, legal action, and damage to your reputation.

Understanding `robots.txt`

The robots.txt file is a plain text file at the root of a domain (e.g., example.com/robots.txt). It uses the Robots Exclusion Protocol (REP) to tell "user-agents" (web crawlers) which parts of the site they should not access. While not legally binding, reputable crawlers adhere to these rules.

A typical robots.txt file might look like this:

1User-agent: *

2Disallow: /admin/

3Disallow: /cart/

4Disallow: /private/

5

6User-agent: BadBot

7Disallow: /User-agent: *: This rule applies to all bots.Disallow: /admin/: This directive blocks access to the/admin/directory.

Key Insight: The

robots.txtfile often reveals the site's structure and can hint at which endpoints are considered sensitive. It's a roadmap for what to avoid, protecting both your scraper and the website's infrastructure from unintentional strain.

Navigating Terms of Service (ToS)

The Terms of Service is a legal document outlining the rules for using the website. Look for clauses related to "automated access," "scraping," or "data mining." Many sites explicitly prohibit these activities. While the legal enforceability of ToS can be complex, violating them can lead to account termination or legal challenges. Respecting the ToS is a fundamental principle for any ethical web scraping project.

Caption: Respecting robots.txt and Terms of Service is the first step in responsible data collection.

Source: [Author's illustration]

2. Implement Intelligent Rate Limiting and Throttling

Sending too many requests in a short period is the fastest way to get your scraper blocked. Intelligent rate limiting is a crucial web scraping best practice that involves controlling your request frequency to avoid overwhelming the target server. This technique is a strategic necessity to prevent IP bans and ensure data collection reliability.

Understanding Rate Limiting

Rate limiting is the practice of setting a deliberate delay between consecutive requests. A more dynamic approach, throttling, adjusts the request rate based on the server's responses. A server under heavy load might respond with 429 Too Many Requests or 503 Service Unavailable. An intelligent scraper interprets these signals and automatically slows down, a process known as adaptive throttling or exponential backoff.

Many sites specify a preferred crawl rate in their robots.txt using the Crawl-Delay directive:

1User-agent: *

2Disallow: /search

3Crawl-Delay: 5Crawl-Delay: 5: This non-standard but widely respected directive asks crawlers to wait 5 seconds between requests.

Key Insight: Effective rate limiting isn't about crawling as slowly as possible; it's about crawling as fast as the server comfortably allows. Start with a conservative delay and dynamically adjust based on server feedback.

Implementing Dynamic Delays

The most robust strategies avoid predictable patterns. Instead of a fixed 2-second delay, use a randomized delay between 1.5 and 2.5 seconds. This "jitter" makes your scraper's traffic pattern appear more human-like. For large-scale jobs, distributing requests evenly across a 24-hour period is far more effective than a short, intense burst of traffic.

Developer-first platforms like CrawlKit manage this automatically. The API handles proxies and anti-bot measures, abstracting away the complexity of adaptive throttling so you can focus on data extraction.

3. Rotate User-Agents and HTTP Headers

Beyond respecting site policies, your scraper's next challenge is to appear human. Sending identical requests repeatedly is a clear sign of a bot, making User-Agent and HTTP header rotation a critical part of web scraping best practices. This technique involves varying your scraper's digital fingerprint to mimic natural user traffic.

Understanding the User-Agent

The User-Agent string is an HTTP header that identifies the client (e.g., browser, application) making the request. A static User-Agent like python-requests/2.28.1 immediately flags your activity as automated. Rotating between a list of real browser User-Agents makes each request appear to come from a different user.

Here are a few common User-Agent strings:

1# Chrome on Windows

2Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/108.0.0.0 Safari/537.36

3

4# Firefox on macOS

5Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:107.0) Gecko/20100101 Firefox/107.0

6

7# Safari on iPhone

8Mozilla/5.0 (iPhone; CPU iPhone OS 16_1_1 like Mac OS X) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Mobile/15E148 Safari/604.1Key Insight: Simply rotating User-Agents is not enough. Advanced anti-bot systems check for consistency between the User-Agent and other HTTP headers.

Crafting Realistic HTTP Headers

A real browser sends a rich set of headers with every request. To avoid detection, your scraper should mimic these. Essential headers to include and rotate are Accept, Accept-Language, Accept-Encoding, and Referer. Failing to send these, or sending inconsistent combinations, is an easy giveaway.

Caption: Rotating headers makes each request appear unique, mimicking traffic from different browsers and devices. Source: [Author's illustration]

4. Use Proxy Rotation and IP Management

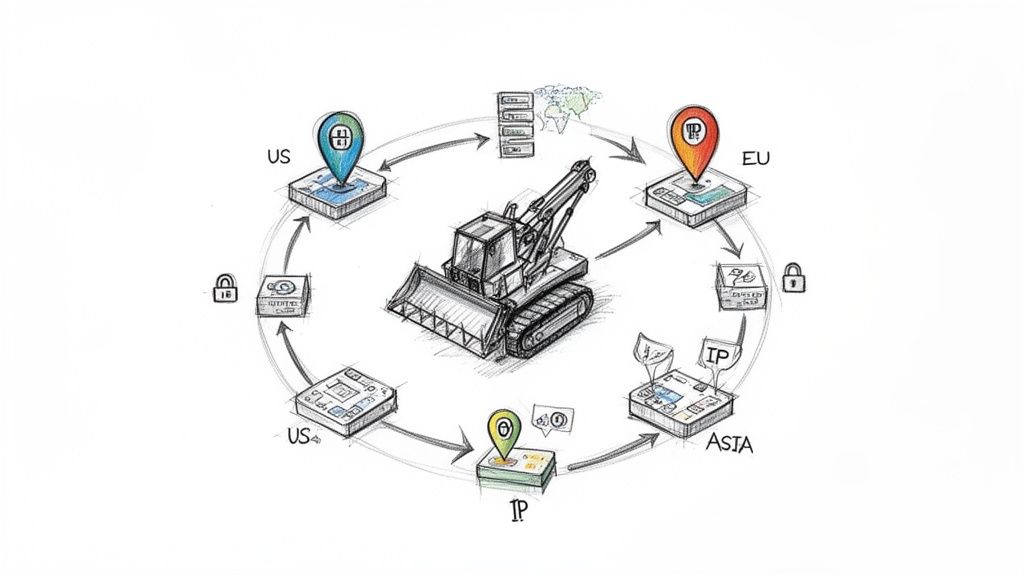

Relying on a single IP address for a large-scale scraping job is a direct path to getting blocked. Websites use IP-based rate limiting as a primary defense against automated traffic. Proxy rotation is an essential web scraping best practice that masks your origin and distributes requests across a pool of different IP addresses.

Caption: Proxy rotation distributes requests across multiple IPs, mimicking organic traffic from various locations.

Source: [Author's illustration]

Caption: Proxy rotation distributes requests across multiple IPs, mimicking organic traffic from various locations.

Source: [Author's illustration]

Understanding Proxy Rotation

Proxy rotation involves channeling your web requests through an intermediary server (a proxy) that assigns a new IP address to each request. This technique prevents the target server from identifying and blocking a single, high-volume IP address. To learn more about this topic, check out this in-depth guide: Proxies Explained: Types, Benefits, and How to Use Nstproxy Like a Pro.

For developers who want to avoid the headache of managing infrastructure, a web scraping API often handles proxy and IP rotation automatically.

1# Example of using a proxy with Python's requests library

2import requests

3

4# A single proxy from your pool

5proxy_url = 'http://user:password@proxy_host:proxy_port'

6

7proxies = {

8 'http': proxy_url,

9 'https': proxy_url,

10}

11

12# The request is routed through the specified proxy

13response = requests.get('https://example.com', proxies=proxies)

14

15print(response.status_code)Key Insight: Combining proxy rotation with User-Agent rotation and realistic request headers creates a synergistic effect. This multi-layered approach makes your scraper significantly harder to distinguish from legitimate human traffic.

Implementing Effective IP Management

Simply using proxies isn't enough; you need a strategy. This includes selecting the right type of proxy (datacenter, residential, or mobile) based on the target's sophistication. Residential proxies, for example, are real IP addresses from internet service providers, making them highly effective. Additionally, rotate IPs at a sensible frequency, adjusting based on the target site’s sensitivity.

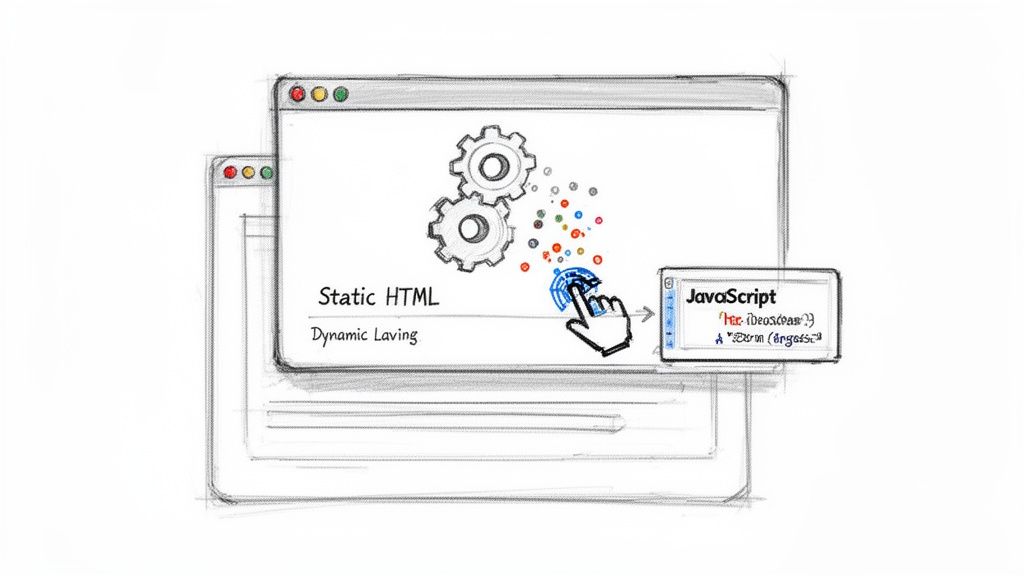

5. Handle JavaScript Rendering and Dynamic Content

Modern websites rely heavily on JavaScript to fetch and render content after the initial page load. A simple HTTP request will only return the initial, often incomplete, HTML skeleton. Mastering JavaScript rendering is an essential component of modern web scraping best practices.

Caption: Modern websites use JavaScript to load content dynamically, requiring scrapers to render the page like a browser.

Source: [Author's illustration]

Caption: Modern websites use JavaScript to load content dynamically, requiring scrapers to render the page like a browser.

Source: [Author's illustration]

Understanding the Challenge

When your scraper requests a URL, it receives raw HTML. On a dynamic site, this HTML might be a placeholder, with content like product prices or user comments loaded separately via JavaScript calls. To scrape this data, you must execute the JavaScript just as a real browser would. This is where headless browsers like Puppeteer or Playwright come in, allowing you to control a browser programmatically to get the fully rendered HTML.

Key Insight: Relying on headless browsers introduces significant performance and memory overhead. Instead of a fixed

sleep(5), use intelligent waiting strategies that wait for a specific element to appear (e.g.,waitForSelector('.product-price')).

Effective Rendering Strategies

While powerful, running a full browser for every page is resource-intensive. A better approach is to use a dedicated scraping API that manages this for you. For instance, CrawlKit is a developer-first web data platform that automatically detects if a page requires JavaScript and renders it, returning the complete HTML without you needing to manage any scraping infrastructure. For a deeper dive into practical implementation, you can explore how to handle web scraping with Python and integrate these techniques.

6. Implement Robust Error Handling and Retry Logic: Building a Resilient Scraper

Network failures, server timeouts, and temporary blocks are inevitable. A scraper that gives up after a single failed request is unreliable. Implementing robust error handling and intelligent retry logic is a critical web scraping best practice that transforms a brittle script into a resilient, production-grade data collection engine.

Designing a Smart Retry System

A naive retry loop can exacerbate problems. The key is to implement exponential backoff, which progressively increases the delay between retries. This gives the target system time to recover and prevents your scraper from overwhelming an already struggling server.

1# A simple retry decorator in Python

2import time

3from functools import wraps

4

5def retry(retries=3, delay=1, backoff=2):

6 def decorator(func):

7 @wraps(func)

8 def wrapper(*args, **kwargs):

9 mtries, mdelay = retries, delay

10 while mtries > 1:

11 try:

12 return func(*args, **kwargs)

13 except Exception as e:

14 print(f"Error: {e}, Retrying in {mdelay} seconds...")

15 time.sleep(mdelay)

16 mtries -= 1

17 mdelay *= backoff

18 return func(*args, **kwargs)

19 return wrapper

20 return decorator

21

22@retry(retries=4, delay=2, backoff=2)

23def fetch_url(url):

24 # This will be retried with delays of 2s, 4s, 8s

25 passHandling Specific HTTP Status Codes

Not all errors are equal. Your scraper should differentiate between temporary issues (like 503 Service Unavailable) and permanent ones (like 404 Not Found). Specifically, you should implement logic for status codes like 429 Too Many Requests, which is an explicit signal to slow down. Platforms like CrawlKit abstract this complexity away, automatically managing retries and handling transient network failures.

Key Insight: Monitor your retry and failure rates. A sudden spike is often the first indicator that a site has changed its structure, updated its anti-bot measures, or that your scraper's behavior has become detectable.

7. Parse and Structure Data Correctly

Extracting raw HTML is only half the battle; the real value lies in transforming that chaotic soup of tags into clean, structured, and usable data. This is where proper parsing becomes a critical component of web scraping best practices. A robust parsing strategy ensures your data is accurate, reliable, and ready for any downstream application.

From HTML to Structured Data

Parsing involves using selectors (like CSS selectors or XPath) to pinpoint and extract specific pieces of information. The goal is to create a predictable, machine-readable format like JSON, moving from unstructured web content to a well-defined schema. For example, CrawlKit offers an API that can extract data directly to structured JSON based on your instructions.

A scraper targeting a job board would capture distinct fields for each listing:

1{

2 "jobTitle": "Senior Software Engineer",

3 "company": "Tech Solutions Inc.",

4 "location": "San Francisco, CA",

5 "salary": "$150,000 - $180,000",

6 "postedDate": "2023-10-26"

7}Key Insight: A well-defined data structure is the foundation of a scalable data pipeline. By enforcing a schema during extraction, you prevent data quality issues from propagating downstream, saving significant time on cleaning and validation later.

Implementing Resilient Parsing Logic

Websites change, and selectors break. A resilient parser anticipates this by incorporating validation and cleaning steps directly after extraction. This includes stripping unwanted whitespace, decoding HTML entities (e.g., & to &), and handling missing fields gracefully. For those working with diverse data formats, understanding how to convert a Python string to JSON is a fundamental skill.

Caption: Effective parsing turns messy HTML into clean, structured data formats like JSON, ready for analysis. Source: [Author's illustration]

8. Monitor, Log, and Observe Scraping Operations

A web scraper running in production without visibility is a black box waiting to fail. Implementing comprehensive monitoring, logging, and observability is a critical practice for maintaining data quality and debugging issues. This system acts as your early warning mechanism, transforming silent failures into actionable insights.

Implementing Comprehensive Logging

At a minimum, every request should generate a log entry with crucial details. Adopting a structured format like JSON is essential for making logs easily searchable and machine-readable.

A typical structured log entry should contain:

1{

2 "timestamp": "2023-10-27T10:00:05Z",

3 "job_id": "job-123-product-scrape",

4 "url": "https://example.com/product/456",

5 "status_code": 200,

6 "latency_ms": 750,

7 "proxy_ip": "192.0.2.1",

8 "response_size_bytes": 15360,

9 "data_extracted_items": 1

10}timestamp: Records when the event occurred.status_code: Reveals the HTTP outcome (e.g., 200, 404, 429).latency_ms: Measures the request-response time.

Key Insight: Separate your operational logs (like the example above) from your data logs (the actual scraped content). This simplifies debugging and performance analysis.

Monitoring and Alerting on Key Metrics

While logs record what happened, monitoring tracks performance in real-time. Build dashboards and automated alerts based on key performance indicators (KPIs).

Essential metrics to monitor:

- Success Rate: Percentage of successful requests (e.g., 2xx status codes).

- Error Rate Breakdown: Track specific error codes like 403, 429, and 5xx.

- Average Latency: The average time taken for a request to complete.

Tools like Datadog, Grafana, or AWS CloudWatch can ingest structured logs and power these dashboards, providing a centralized view of your scraper's health.

9. Respect Rate Limits and Implement Caching

Bombarding a server with too many requests is a surefire way to get your IP address banned. Respecting rate limits is a core tenet of ethical scraping, but an even smarter strategy is to avoid unnecessary requests altogether. Implementing an intelligent caching layer is one of the most effective web scraping best practices for reducing server load, cutting costs, and speeding up data collection.

Understanding Caching in Web Scraping

Caching involves temporarily storing data you've already fetched. Instead of hitting the target website every time, you first check your local cache. If the data is present and still "fresh," you use the cached version, bypassing the network request.

A caching strategy can be implemented at various levels:

- Full HTTP Response Caching: Store the entire raw HTML response.

- Parsed Data Caching: Store the structured JSON data after extraction.

- Aggregated Results Caching: Cache the final, combined results of multiple scraped pages.

Key Insight: Caching transforms your scraper from a constant resource consumer into an efficient data processor. It minimizes your footprint on the target server and significantly reduces bandwidth and proxy usage costs.

Caching Implementation and Invalidation

The effectiveness of a cache depends on its "invalidation" strategy. A common approach is using a Time-To-Live (TTL), where data expires after a set period. For example, a news aggregator might cache an article for 12 hours, while a price tracker might cache a product page for only 1 hour. Distributed caching systems like Redis or Memcached are excellent for managing caches in large-scale scraping operations.

Caption: Caching reduces redundant requests by serving previously fetched data from a local store like Redis. Source: [Author's illustration]

10. Design for Scalability and Distributed Scraping

Scraping a few hundred pages is one thing; scraping millions requires a fundamental shift in architecture. Designing for scalability is a critical web scraping best practice that prevents your scraper from collapsing under its own weight. Instead of a single, monolithic script, you should architect your system as a distributed, parallelized operation.

From Monolith to Micro-Tasks

A scalable scraping architecture breaks the process down into independent, stateless tasks. Each URL becomes a job that can be processed by any available "worker." This model allows you to add or remove workers (horizontal scaling) based on demand.

The key is to decouple the different stages of scraping:

1// Conceptual Job for a Task Queue

2{

3 "jobId": "a1b2-c3d4-e5f6",

4 "url": "https://example.com/product/123",

5 "parser": "product_page_parser",

6 "metadata": {

7 "category": "electronics",

8 "priority": "high"

9 }

10}- URL Generation: A "producer" process finds URLs and adds them to a queue.

- Fetching: Stateless "worker" processes pull URLs from the queue and fetch the content.

- Parsing: A separate pool of workers consumes the HTML and extracts the data.

Key Insight: Treat every URL as an independent, queueable unit of work. This simple principle is the foundation for building a robust, distributed crawler that can scale almost infinitely.

Strategies for Scalable Scraping

To implement a distributed system, focus on statelessness and effective job management. Use task queues like Celery (Python) or BullMQ (Node.js). For URL management at scale, employ a deduplication mechanism like a Bloom filter or a Redis set to avoid re-scraping the same page. Cloud-native solutions or platforms like CrawlKit abstract away this infrastructure complexity entirely. For a deeper dive into these patterns, you can learn more about building a web crawler from scratch.

FAQ: Web Scraping Best Practices

1. Is web scraping legal?

Web scraping publicly available data is generally considered legal, but it exists in a legal gray area. Best practices include never scraping private or copyrighted data, respecting robots.txt and Terms of Service, and avoiding overwhelming the target server. Consult with a legal professional for specific use cases.

2. How do I avoid getting blocked while scraping? The best way is to mimic human behavior. Key techniques include rotating high-quality residential proxies, using realistic and rotating User-Agents, implementing intelligent rate limiting with randomized delays, and handling CAPTCHAs when they appear.

3. What is the most important web scraping best practice?

Ethical considerations are paramount. Always start by checking the website's robots.txt file and Terms of Service. Scraping responsibly ensures the long-term viability of your project and respects the website's resources.

4. How do I scrape websites that use JavaScript? You need to render the page's JavaScript. This can be done by using a headless browser library like Puppeteer or Playwright. Alternatively, a web scraping API like CrawlKit can handle JavaScript rendering automatically, saving you from managing complex browser infrastructure.

5. What is a User-Agent and why should I rotate it? A User-Agent is an HTTP header that identifies your browser and operating system to the server. Rotating User-Agents makes your requests look like they are coming from different users on different devices, which helps avoid simple bot detection systems.

6. Should I build my own scraping infrastructure or use an API? Building your own infrastructure gives you full control but requires significant investment in managing proxies, headless browsers, and anti-bot solutions. An API-first platform like CrawlKit abstracts away this complexity, letting you focus on data extraction. It's often more cost-effective and scalable, especially for teams.

7. How fast can I scrape a website?

This depends on the site's Crawl-Delay directive in robots.txt and its tolerance for automated traffic. A safe starting point is one request every 2-5 seconds. Use adaptive rate limiting to adjust your speed based on server responses (like HTTP 429 errors).

8. What's the best data format for scraped data? JSON (JavaScript Object Notation) is the most common and versatile format. It is lightweight, human-readable, and easily parsed by virtually all programming languages, making it ideal for APIs, databases, and data analysis pipelines.

Next steps

Mastering these web scraping best practices transforms you from someone who can write a script into a developer who can build reliable, scalable, and ethical data pipelines. By combining technical skill with a respectful approach, you can unlock the immense value of web data without disrupting the ecosystem.

The next step is to put this knowledge into practice. Instead of building and maintaining your own complex scraping infrastructure, consider how a developer-first platform can accelerate your work. CrawlKit is an API-first web data platform that handles proxies, JavaScript rendering, and anti-bot systems, so you can focus on what matters: the data. You can start free, with no scraping infrastructure required.

- Try the Playground: How CrawlKit's API Works

- Read the Docs: CrawlKit API Documentation

- Build a Web Crawler: How to Build a Web Crawler from Scratch