Meta Title: Proxy IP Rotator: The Ultimate Guide for Web Scraping Meta Description: Learn what a proxy IP rotator is, how it works, and why it's essential for web scraping. Avoid IP blocks and gather data at scale with our guide.

If you've ever tried to collect web data at scale, you've likely hit a wall—literally. Websites use anti-bot systems to spot and block automated traffic, and their first line of defense is tracking requests from a single IP address. A proxy IP rotator is your essential tool for overcoming this, making your scraper look less like a single bot and more like a crowd of unique, organic users.

Table of Contents

- What Is a Proxy IP Rotator and Why Is It Essential?

- How Different IP Rotation Strategies Work

- Implementing Your Own Proxy IP Rotator

- Advanced Techniques to Evade Bot Detection

- How to Choose the Right Type of Rotating Proxies

- Let CrawlKit Handle Your Entire Scraping Infrastructure

- Frequently Asked Questions

What Is a Proxy IP Rotator and Why Is It Essential?

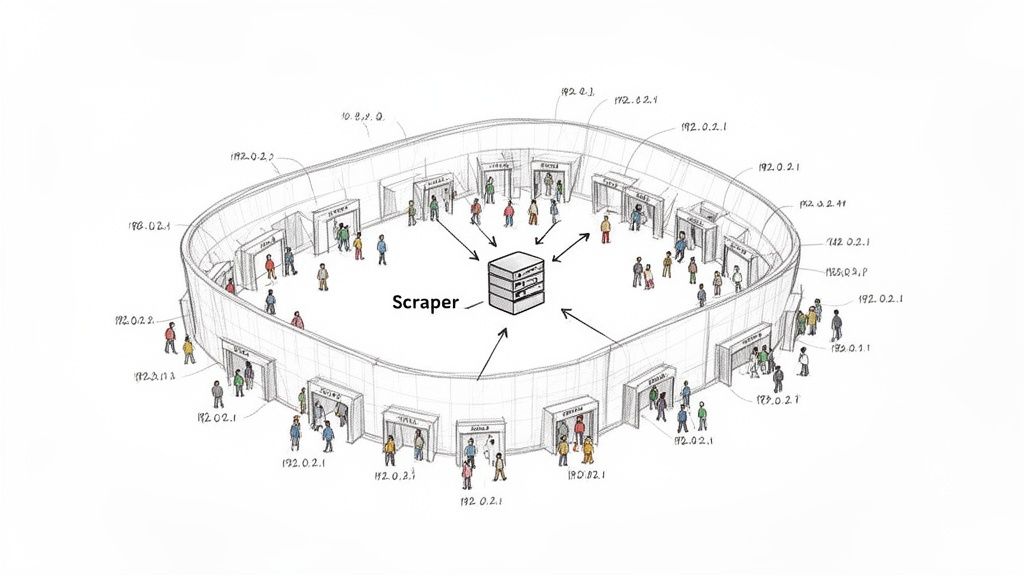

A proxy IP rotator is a service that automatically cycles through a pool of IP addresses for each connection you make. Instead of hammering a website with thousands of requests from a single IP—a dead giveaway that you're scraping—a rotator spreads your traffic across many different IPs. This makes it much harder for a website to detect and block your data collection efforts.

Think of it like getting a thousand people into a packed stadium. Sending them all through one gate creates a jam and draws security's attention. A proxy rotator gives each person their own entrance, making the flow smooth, distributed, and unnoticeable.

Caption: A proxy rotator distributes requests across a vast network of IP addresses, mimicking organic user traffic.

Source: Generated with AI

Caption: A proxy rotator distributes requests across a vast network of IP addresses, mimicking organic user traffic.

Source: Generated with AI

The Core Problems Solved by IP Rotation

Manually managing a list of proxies is a nightmare of testing, replacing dead IPs, and updating lists. A proxy IP rotator automates this heavy lifting, solving the most common challenges in web data collection.

| Scraping Challenge | How an IP Rotator Solves It |

|---|---|

| IP Bans and Blocks | Constantly changes your IP, preventing sites from flagging you for high request volume. |

| Rate Limiting | Distributes requests across many IPs so no single one hits the rate limit threshold. |

| Geo-Restrictions | Lets you use IPs from specific countries to access localized content like regional pricing. |

| Anonymity & Footprints | Masks your scraper's true IP address, making your activity harder to trace. |

This technology has become so critical that the global rotating proxy market is projected for massive growth. It's foundational infrastructure for any serious data operation. You can read the full research about the rotating proxy market for a deeper dive.

For developers, this means you no longer need to build and maintain complex proxy infrastructure. API-first platforms like CrawlKit bundle proxy rotation, browser rendering, and anti-bot measures into a single API call. You focus on the data you get back—all the proxy and anti-bot logic is abstracted away.

How Different IP Rotation Strategies Work

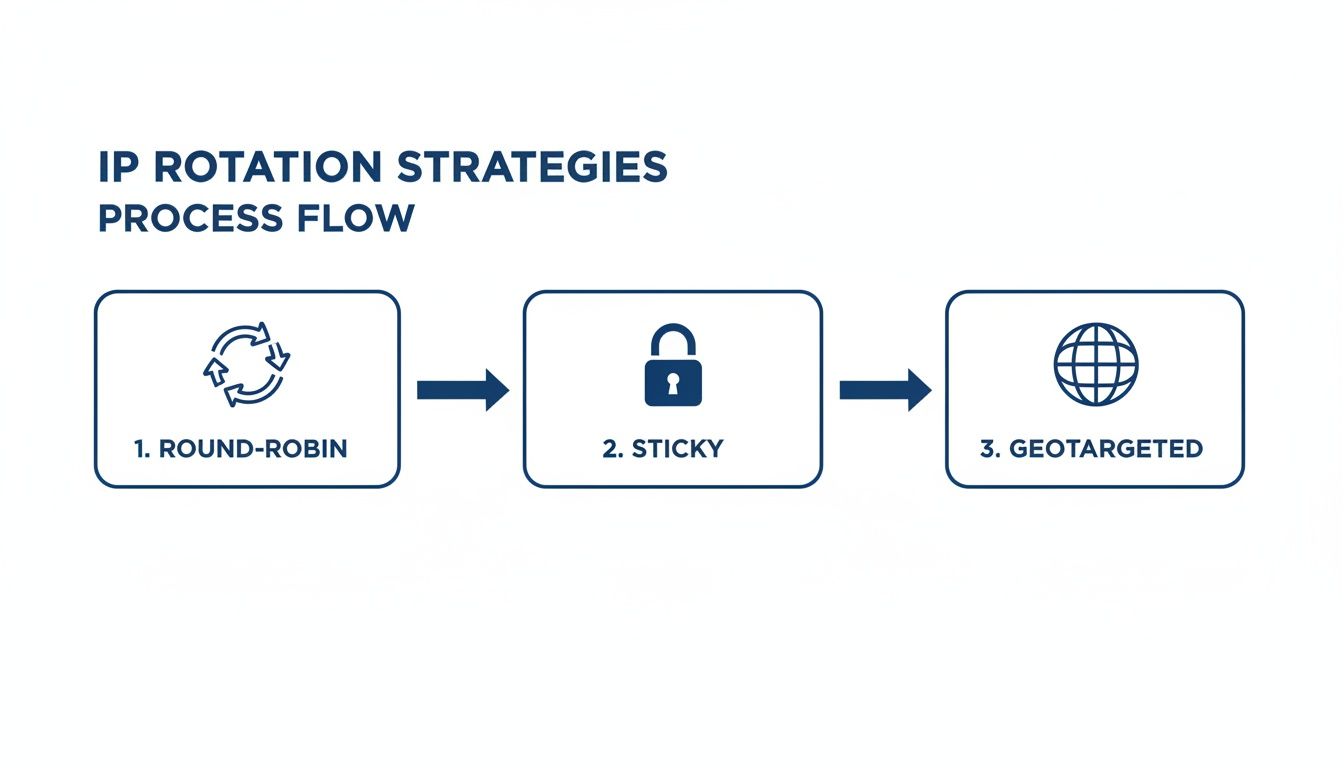

Not all IP rotation is the same. Choosing the right proxy IP rotator strategy depends on your target website and data collection goals. Some tasks require a quick, disposable identity, while others need a consistent session to complete a multi-step process.

Caption: Different IP rotation strategies—like round-robin, sticky sessions, and geotargeting—serve different scraping needs.

Source: Generated with AI

Caption: Different IP rotation strategies—like round-robin, sticky sessions, and geotargeting—serve different scraping needs.

Source: Generated with AI

Round Robin Rotation

This is the simplest strategy: for every new request, the rotator provides the next IP address in the pool, looping back to the start when it reaches the end.

- Best For: Scraping large sets of independent pages, like product listings or search results, where each request is a standalone event.

- Advantage: It spreads requests evenly across your entire IP pool, minimizing the load on any single proxy.

- Limitation: It will break any user session that requires a login or multi-step navigation, as the IP changes with every request.

Here's how this looks with cURL. Each command would use a different IP from the pool.

1# Each time this command runs, the proxy provider rotates the outbound IP

2curl -x "http://rotating.gateway.com:8080" "https://example.com/product/1"

3curl -x "http://rotating.gateway.com:8080" "https://example.com/product/2"Sticky Sessions

For modern web apps, you need a stable identity. Sticky sessions (or persistent sessions) assign a single IP address to your scraper that "sticks" for a set time or a certain number of requests.

This mimics how a real person browses, staying on the same IP while clicking through pages, filling out forms, or adding items to a cart.

For any task involving user accounts, checkout processes, or complex navigation, sticky sessions are non-negotiable. Without them, you'll be logged out or have your session invalidated with every new request.

For example, our guide on how to scrape LinkedIn data effectively explains why stable session management is critical for professional networking sites.

Geotargeted and ISP Rotation

Advanced strategies offer more precise control.

- Geotargeted Rotation: Lets you route traffic through proxies from specific countries or cities. This is essential for verifying localized content, testing international SEO, or accessing geo-restricted services.

- ISP Rotation: Lets you use proxies assigned to specific Internet Service Providers (like Comcast or AT&T). This helps mimic authentic residential users, making it extremely difficult for anti-bot systems to distinguish your scraper from legitimate traffic.

Implementing Your Own Proxy IP Rotator

When it's time to implement a proxy IP rotator, you can either build a basic one yourself or use a proxy provider's gateway endpoint, which handles the logic for you. The choice depends on your project's scale, engineering resources, and how much infrastructure you want to manage.

The DIY Approach: Building Your Own Rotator

Building your own IP rotator gives you total control and a deep understanding of proxy management. The core idea is simple: maintain a list of proxy IPs and cycle through them with each request.

Here’s a basic round-robin example in Python:

1import requests

2

3proxy_list = [

4 'http://proxy1:port',

5 'http://proxy2:port',

6 'http://proxy3:port'

7]

8current_proxy_index = 0

9

10def get_rotating_proxy():

11 global current_proxy_index

12 proxy = proxy_list[current_proxy_index]

13 current_proxy_index = (current_proxy_index + 1) % len(proxy_list)

14 return {'http': proxy, 'https': proxy}

15

16# Usage

17url = 'https://api.example.com/data'

18proxies = get_rotating_proxy()

19response = requests.get(url, proxies=proxies)

20print(response.text)However, a production-ready DIY rotator needs much more:

- Health Checks: Logic to detect and sideline dead or slow proxies.

- Retry Mechanisms: A system to automatically retry a failed request with a new proxy.

- Session Management: Code to implement sticky sessions for sites that require them.

- Scalability: A way to efficiently manage thousands of proxies.

The Provider Gateway Approach

This is the more common and practical route. Instead of building the rotator, you use a proxy provider's single gateway endpoint (often called a "backconnect proxy"). Your scraper sends all requests to this one address, and the provider's servers handle the IP rotation.

This approach abstracts away all the complexity. You don't manage proxy lists, health checks, or rotation logic.

You just configure your HTTP client to use the gateway address:

1# Example using a proxy provider's gateway endpoint

2curl -x "http://username:password@proxy.provider.com:8080" \

3 -H "User-Agent: MyScraper/1.0" \

4 "https://target-website.com"This method is far more scalable and reliable. The provider’s business is to maintain a massive, healthy pool of IPs and ensure their rotation strategies work flawlessly. To learn more about implementation, see how this pattern works in our guide to web scraping with Java.

Advanced Techniques to Evade Bot Detection

A good proxy IP rotator is your foundation, but the toughest websites look at more than just your IP. To truly blend in, you need to mimic the subtle fingerprints of a real user's browser, ensuring consistency across all aspects of your request.

Going Beyond Simple IP Rotation

Sophisticated anti-bot systems analyze your entire request profile. If your IP is from a residential home in Germany, but your browser headers indicate a preference for English and a California timestamp, you'll raise a red flag.

Here are other key elements to manage:

- User-Agents: Rotate through a list of realistic, up-to-date User-Agent strings (e.g., the latest versions of Chrome on Windows or Safari on macOS).

- HTTP Headers: Randomize other headers like

Accept-Language,Accept-Encoding, andRefererto build a believable profile and mimic a natural browsing journey. - Browser Fingerprints: Modern bot detectors use JavaScript to collect data points like screen resolution, installed fonts, and plugins to create a unique fingerprint that can identify your scraper even if you change IPs.

Caption: A browser fingerprint is composed of dozens of data points that can uniquely identify a user's device. Source: Example.com

Navigating CAPTCHAs and Advanced Hurdles

CAPTCHAs are designed to stop bots. The best strategy is to avoid triggering them in the first place, and high-quality residential proxies are key. These IPs come from real home internet connections and are inherently trusted by websites, making them far less likely to be flagged.

To learn more, check out our guide on how to scrape leads safely without getting blocked.

How CrawlKit Simplifies Evasion

Managing IP rotation, User-Agents, headers, fingerprints, and CAPTCHA avoidance is a full-time infrastructure job. This is the problem CrawlKit was built to solve. As a developer-first, API-first web data platform, we handle all this complexity for you.

When you make a request to our API, we automatically manage:

- Massive Proxy Rotation: Using a huge, diverse pool of high-quality proxies.

- Header and Fingerprint Management: Generating realistic browser headers and unique fingerprints for every request.

- Anti-Bot Abstraction: Navigating and bypassing common anti-bot measures, including CAPTCHAs.

There is no scraping infrastructure for you to build or maintain. You send us a URL, and we send you back clean, structured JSON. Our guide on web scraping best practices offers more detail. You can start free and focus on using data, not fighting for it.

How to Choose the Right Type of Rotating Proxies

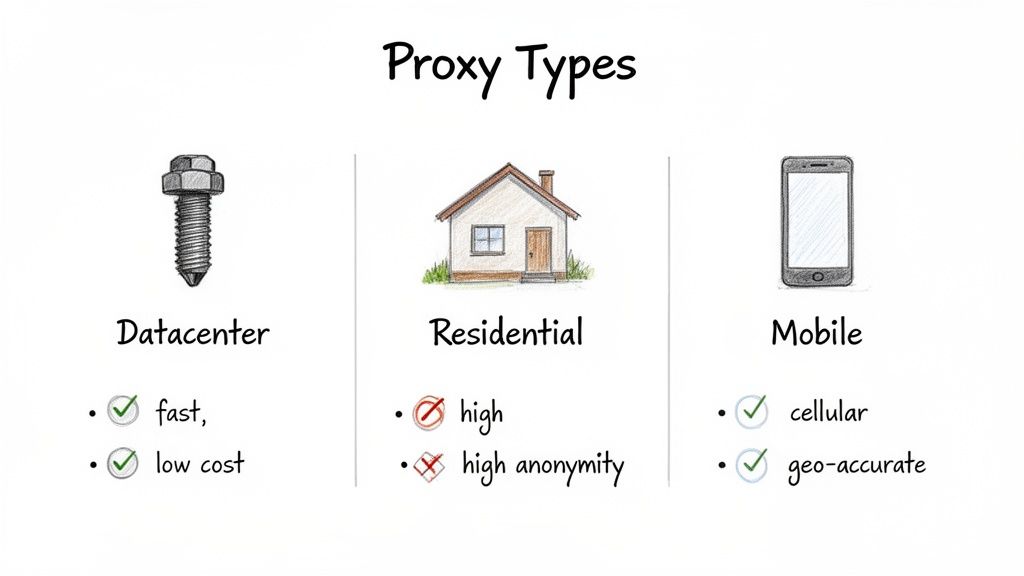

Picking the right proxy type for your proxy IP rotator can make or break your project. The choice depends on your budget, request volume, and the target's security. The three main types are Datacenter, Residential, and Mobile proxies.

Caption: Each proxy type offers a different balance of cost, speed, and anonymity.

Source: Generated with AI

Caption: Each proxy type offers a different balance of cost, speed, and anonymity.

Source: Generated with AI

Datacenter Proxies

These are fast, budget-friendly IPs from servers in data centers. However, because they come from known commercial blocks, they are the easiest for websites to detect and block.

Residential Proxies

The gold standard for scraping protected targets. These are real IP addresses from Internet Service Providers (ISPs) assigned to actual homes. Their traffic appears completely legitimate, allowing you to bypass blocks and CAPTCHAs that stop datacenter proxies.

Because they use real home internet connections for authenticity, residential rotating proxies have become the dominant force in the market. Discover more insights about the rotating proxy market on archivemarketresearch.com.

Mobile Proxies

The most advanced and expensive option. These IPs are assigned to devices on cellular networks. Websites are very reluctant to block mobile IPs because they are often shared by thousands of real users, risking locking out a large portion of their legitimate audience.

Datacenter vs. Residential vs. Mobile Proxies

| Proxy Type | Key Feature | Best For | Detection Risk |

|---|---|---|---|

| Datacenter | Fast & Cheap | Scraping sites with weak bot detection | High |

| Residential | High Trust & Authentic | E-commerce, social media, protected sites | Low |

| Mobile | Highest Anonymity | Mobile-first content, the toughest targets | Very Low |

For lenient targets, datacenter proxies are a good start. For most scalable scraping projects, residential proxies offer the best reliability. For the toughest targets, mobile proxies provide the highest level of anonymity.

Let CrawlKit Handle Your Entire Scraping Infrastructure

Building and maintaining a proxy IP rotator is a complex infrastructure project that distracts engineers from their core mission. That's why we built CrawlKit. It’s a developer-first, API-first web data platform designed to completely abstract away the messy backend of web scraping.

Stop Babysitting Infrastructure

Instead of wrestling with flaky scripts and blacklisted proxies, you make one clean API call. We handle everything behind the scenes—proxies, browsers, and anti-bot systems—so you get the data you need without the drama.

The philosophy is simple: developers should focus on building products, not maintaining brittle scraping infrastructure.

Our platform is engineered to turn any website into structured JSON. You can learn more about our powerful scraping API and see how it eliminates the need for in-house infrastructure.

Caption: CrawlKit abstracts the entire scraping stack, turning complex infrastructure management into a simple API call. Source: CrawlKit.sh

Get Started in Minutes, Not Months

There is no scraping infrastructure for you to build or maintain. Period. Our intelligent system automatically manages a massive, diverse proxy pool, rotates headers and fingerprints, and navigates common anti-bot defenses for you.

You can start free and see the difference immediately.

- Try the Playground for free: Make live requests right from your browser.

- Read the Docs: Check out our clear, practical API documentation.

- Start Free: Sign up and get your API key in seconds.

Frequently Asked Questions

Here are answers to common questions about using a proxy IP rotator.

1. What is a proxy IP rotator?

A proxy IP rotator is a service that automatically cycles through a large pool of IP addresses. For each request your application makes, it uses a different IP, which helps avoid IP blocks, bypass rate limits, and make your traffic appear like it's coming from many different users instead of a single bot.

2. Is using a proxy IP rotator legal?

Yes, the technology itself is completely legal and is a standard tool for security, privacy, and data collection. The legality depends on how you use it. Always respect a website's terms of service, avoid scraping personal or copyrighted data, and comply with data privacy regulations like GDPR and CCPA.

3. How many proxies do I need for rotation?

It depends on your target. For a simple site, a small pool of 10-20 datacenter proxies might be enough. For a heavily protected e-commerce or social media site, you may need thousands of residential proxies to keep your request-per-IP ratio low enough to avoid detection. A platform like CrawlKit manages this for you.

4. What is the difference between a rotating proxy and a backconnect proxy?

They are often used interchangeably. A rotating proxy is the general concept of cycling through IPs. A backconnect proxy is a specific implementation where you connect to a single gateway address, and the provider's server handles the rotation on the backend. This is the model most modern proxy providers use.

5. Can I build my own proxy IP rotator?

Yes, you can write a simple script to rotate through a list of IPs. However, building a production-ready system that handles health checks, retries, session management, and scalability is a complex engineering challenge. Using a provider or an API platform is often more reliable and cost-effective.

6. How do sticky sessions work with a rotator?

A sticky session assigns a single IP address to your scraper for a set duration (e.g., 10 minutes) or a certain number of requests. This is crucial for multi-step actions like logging in or completing a checkout process, as it ensures all actions appear to come from the same user.

7. Are rotating proxies the same as a VPN?

No. A VPN is designed for an individual's privacy, encrypting all of a device's traffic through a single, stable server. A proxy rotator is built for automation at scale, distributing thousands of unencrypted requests across many IPs to mimic human traffic and avoid blocks.

8. What's the best type of proxy for rotation?

- Datacenter proxies are fast and cheap but easily detected.

- Residential proxies are IPs from real homes, making them highly trusted and effective for most targets.

- Mobile proxies offer the highest anonymity but are the most expensive. For most serious scraping projects, residential proxies provide the best balance of performance and reliability.

Next steps

- Web Scraping Best Practices: A Developer's Guide

- How to Scrape LinkedIn Data Without Getting Blocked

- The Ultimate Guide to Web Scraping with Java