Meta Title: 12 Best Data Extraction Software for 2024 (APIs & Tools) Meta Description: Discover the best data extraction software, from developer APIs to no-code tools. Compare features, pricing, and use cases to find the right solution.

Finding the right tool to gather web data is critical for everything from market research and lead generation to training AI models. This guide dives deep into the best data extraction software, providing a practical resource for developers, data scientists, and business analysts. We'll move beyond marketing claims to offer a hands-on analysis of the top platforms available today.

Table of Contents

- 1. CrawlKit

- 2. Zyte (Zyte API + Scrapy Cloud)

- 3. Bright Data (Web Scraper API)

- 4. Oxylabs (Web Scraper API)

- 5. Apify (Platform + Apify Store)

- 6. ScrapingBee (Web Scraping API)

- 7. ScraperAPI

- 8. Octoparse

- 9. ParseHub

- 10. Diffbot

- 11. SerpApi (Search Engines Data Extraction)

- 12. Capterra – Web Scraping Software Category

- Comparison of Top Data Extraction Tools

- Making Your Final Decision

- Frequently Asked Questions (FAQ)

- Next Steps

1. CrawlKit

CrawlKit is a developer-first, API-first web data platform designed to turn complex websites, social media profiles, and app store pages into structured JSON with a single API call. It's one of the best data extraction software options for teams that need reliable, production-ready data without building or maintaining scraping infrastructure. All the complexities of proxies, headless browsers, and anti-bot systems are abstracted away.

CrawlKit’s strength lies in its simplicity and reliability. Engineers can focus on using data rather than acquiring it. The platform handles proxy rotation, JavaScript rendering, rate limit management, and anti-bot countermeasures behind the scenes. It also ensures pages have fully loaded before returning data, preventing common issues with partial or broken results. You can start free to test the API.

A developer interacts with the CrawlKit API to pull structured data into an AI application. Source: CrawlKit.

A developer interacts with the CrawlKit API to pull structured data into an AI application. Source: CrawlKit.

Key Features & Use Cases

- Broad Platform Coverage: Specialized APIs for scraping web pages, taking screenshots, performing searches, and extracting structured data from LinkedIn (companies, people), app reviews (Google Play/Apple App Store), and more.

- Structured, LLM-Ready JSON: All responses are delivered in validated JSON, ideal for CRM enrichment, competitive intelligence, and collecting high-quality training data for AI models.

- Developer-Centric Integrations: Simple HTTP endpoints and SDKs integrate seamlessly into any tech stack. For those interested in the underlying mechanics, the team offers guides on how to build a web crawler.

Here's how to scrape a website's content and metadata with a simple cURL request:

1curl "https://api.crawlkit.sh/v1/scrape/web" \

2 -H "Authorization: Bearer YOUR_API_TOKEN" \

3 -d '{ "url": "https://example.com" }'Pricing

CrawlKit uses a transparent, pay-as-you-go credit system. Credits never expire, and there are no monthly subscriptions. You can start free with 100 credits to test the API. Refunds are automatically issued for failed requests, so you only pay for successful extractions.

Pros and Cons

- Pros:

- No Scraping Infrastructure: Abstracted proxies and anti-bot systems mean you can start immediately.

- Reliable, Production-Ready Output: Validated JSON responses ensure data quality.

- Transparent and Flexible Pricing: Credit-based model with a free tier and refunds on failures.

- Developer-Focused: Built for engineers with excellent documentation.

- Cons:

- API-Only: Not suitable for non-technical users who need a visual interface.

- Emerging Platform: While coverage is strong, some enterprise-level features may require a direct conversation with their team.

Try the Playground / Read Docs / Start Free

2. Zyte (Zyte API + Scrapy Cloud)

Zyte offers an enterprise-focused suite for web data extraction, pairing the powerful Zyte API with Scrapy Cloud for comprehensive pipeline orchestration. It stands out by abstracting away the complexities of proxy management, CAPTCHA solving, and browser rendering, bundling them into a success-based pricing model. This makes it a strong contender in the landscape of the best data extraction software, especially for teams already invested in the Scrapy ecosystem.

The Zyte platform dashboard allows users to manage API keys and monitor usage. Source: Zyte.

The Zyte platform dashboard allows users to manage API keys and monitor usage. Source: Zyte.

The platform’s core strength lies in its tight integration with Scrapy, the popular open-source web crawling framework. By deploying Scrapy spiders to Scrapy Cloud, developers can manage, schedule, and scale their data pipelines efficiently without maintaining their own servers. For those new to the core technology, you can learn how to web scrape with Python to better understand the foundations.

Key Features & Use Case

Success-Based Billing: A major advantage is that you only pay for successful requests, which minimizes budget waste on blocked or failed attempts.

Scrapy Cloud Integration: Provides a seamless, managed environment for deploying and running Scrapy spiders.

Automated Anti-Bot Handling: Intelligently manages proxies, user agents, and browser rendering to bypass sophisticated anti-scraping measures.

Tiered Pricing by Site Complexity: Costs are tiered based on the difficulty of scraping a target domain.

Pricing: Starts at $25/month for the API; Scrapy Cloud has a free tier.

Pros: Natural fit for Scrapy users, transparent cost estimator, bills only for successful responses.

Cons: Cost forecasting requires estimating site complexity, and achieving the best discounts necessitates a higher monthly spend.

Best For: Teams building production-grade scraping pipelines with Scrapy.

3. Bright Data (Web Scraper API)

Bright Data has established itself as a major player in the data extraction space, primarily known for its massive and diverse proxy network. Its Web Scraper API builds on this foundation, offering a powerful, managed service that handles unblocking, JavaScript rendering, and proxy rotation automatically. This makes it an excellent choice for teams that need reliable access to a wide variety of target sites, solidifying its spot among the best data extraction software.

Bright Data’s Web Scraper IDE provides a coding environment for building and testing scrapers. Source: Bright Data.

The platform's key advantage is its integration of an industry-leading proxy network with a robust API that simplifies complex scraping tasks. To get the most out of such a powerful tool, it's crucial to follow certain guidelines, and you can learn more by reviewing some essential web scraping best practices before starting a large-scale project.

Key Features & Use Case

Very Large, Diverse Proxy Network: Leverages residential, datacenter, ISP, and mobile proxies for unparalleled access.

Specialized Scrapers and Dataset Marketplace: Offers pre-built scrapers for common targets and a marketplace for pre-collected datasets.

Web Unlocker-Grade Unblocking: Integrates advanced anti-bot circumvention techniques, including CAPTCHA solving and browser fingerprinting.

Record-Based Pricing: You pay based on the number of successful records (CPMs).

Pricing: Pay-as-you-go starts at $22.50/CPM, with monthly plans offering lower rates. A free trial is available.

Pros: Strong documentation, clear bundles for high-volume record packages, and enterprise-grade SLAs.

Cons: Can be pricier than competitors at smaller volumes, and the definition of a "record" can vary.

Best For: Enterprise teams requiring high-volume, reliable data extraction with extensive proxy coverage.

4. Oxylabs (Web Scraper API)

Oxylabs provides a suite of powerful Scraper APIs designed for large-scale, enterprise-level data extraction projects. It is a formidable player in the best data extraction software market, distinguishing itself with vertical-specific APIs for e-commerce, search engines, and general web data, coupled with a robust proxy infrastructure.

The Oxylabs dashboard displays usage statistics and provides access to different Scraper APIs. Source: Oxylabs.

The Oxylabs dashboard displays usage statistics and provides access to different Scraper APIs. Source: Oxylabs.

The platform's core offering is its set of specialized APIs that handle everything from proxy rotation and JavaScript rendering to CAPTCHA solving. Oxylabs' integrated Unblocker technology is particularly effective at overcoming sophisticated anti-bot systems, making it a reliable choice for accessing protected data sources.

Key Features & Use Case

Vertical-Specific Scraper APIs: Offers tailored APIs for e-commerce sites like Amazon and search engines like Google.

Integrated Unblocker: Advanced, AI-driven proxy and unblocking solution to handle dynamic anti-bot measures.

Real-Time Data Extraction: Capable of rendering JavaScript-heavy pages to retrieve dynamic content.

Pay-Per-Result Pricing: Users are only charged for successfully retrieved data.

Pricing: Free trial available. Pricing is pay-as-you-go per successful result, with costs varying by API type.

Pros: Clear per-vertical pricing, very high success rates for difficult targets, excellent 24/7 customer support.

Cons: The feature set and pricing can be more geared toward enterprise users than small projects.

Best For: Enterprise teams requiring reliable, high-volume data from challenging sources like major e-commerce platforms and search engines.

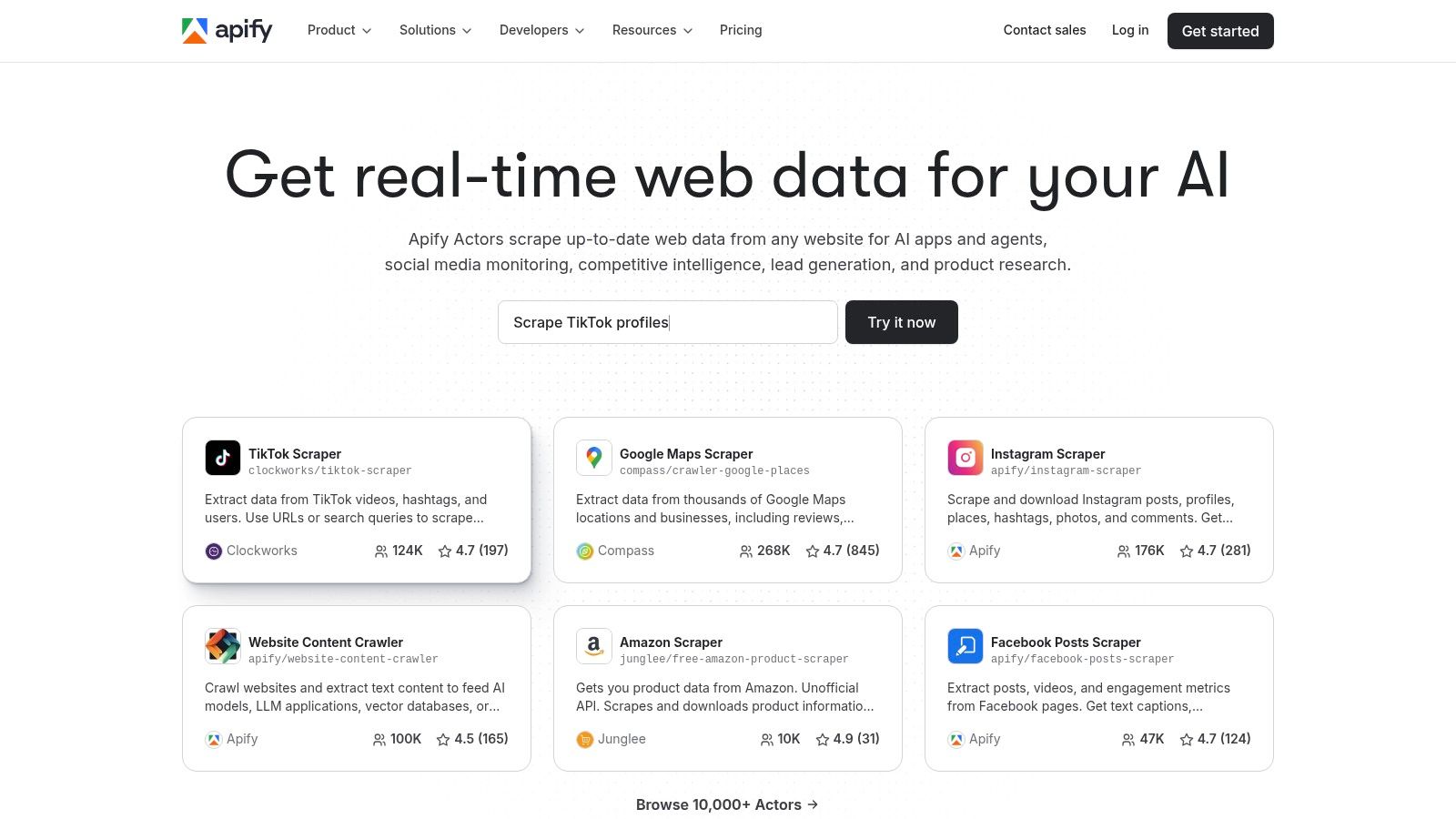

5. Apify (Platform + Apify Store)

Apify is a full-fledged cloud platform that democratizes web scraping through its unique app-store model, the Apify Store. It provides a comprehensive ecosystem where developers can run custom scrapers or leverage thousands of pre-built crawlers, known as "Actors." This marketplace approach makes Apify a powerful choice among the best data extraction software, especially for teams needing a quick, off-the-shelf solution.

The Apify Store features thousands of pre-built “Actors” for scraping popular websites. Source: Apify.

The Apify Store features thousands of pre-built “Actors” for scraping popular websites. Source: Apify.

The platform's core value lies in its speed to production. For those interested in the fundamental techniques behind these tools, understanding how to extract data from websites provides a solid foundation for leveraging platforms like Apify more effectively.

Key Features & Use Case

Apify Store (Marketplace): An extensive library of thousands of pre-built scrapers (Actors) for a wide range of websites.

Integrated Scraping Platform: Provides a complete cloud environment with compute units, integrated proxies, data storage, and scheduling.

Developer SDKs: Offers SDKs for building custom Actors, giving developers flexibility.

Flexible Pricing Models: Users can pay for platform usage via compute units or pay per result.

Pricing: Features a generous free tier with $5 in monthly platform credits. Paid plans start at $49/month.

Pros: Extremely fast to get started using pre-built Actors, a generous free tier, and a comprehensive all-in-one platform.

Cons: The pricing models can be complex to forecast at scale, and the quality of third-party Actors can vary.

Best For: Teams who need a quick solution for common scraping targets or a managed cloud platform to run custom crawlers.

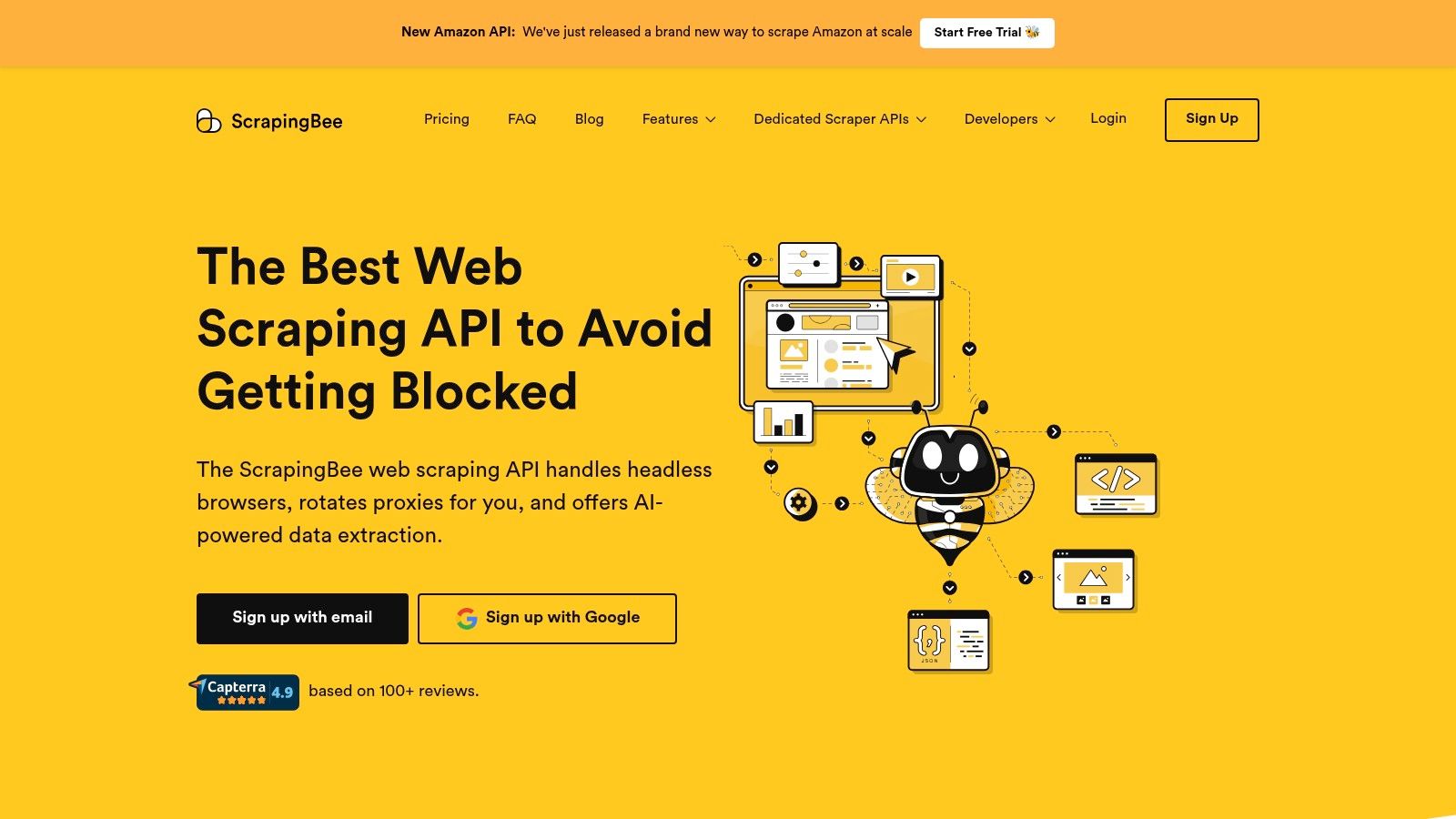

6. ScrapingBee (Web Scraping API)

ScrapingBee delivers a developer-friendly web scraping API designed to handle common extraction challenges like JavaScript rendering and proxy rotation right out of the box. It positions itself as an accessible and straightforward solution, making it a powerful choice among the best data extraction software for developers who need to get started quickly.

ScrapingBee’s documentation provides clear examples for using its web scraping API. Source: ScrapingBee.

ScrapingBee’s documentation provides clear examples for using its web scraping API. Source: ScrapingBee.

The API's core value is its simplicity. By managing headless browsers and a large pool of premium rotating proxies, ScrapingBee allows users to focus on parsing data rather than bypassing anti-bot measures. With a generous free trial of 1,000 API calls and clear, credit-based monthly plans, it offers a predictable and low-risk entry point.

Key Features & Use Case

Built-in JS Rendering & Proxy Rotation: Automatically handles modern JavaScript-heavy websites and rotates proxies.

Geotargeting and Screenshot Capture: Allows requests from specific countries and captures full-page screenshots.

Extraction Rules: Lets you define CSS selectors directly in the API call to extract specific data elements.

Generous Free Trial: Offers 1,000 free API calls for developers to test the service.

Pricing: Starts at $49/month for 100,000 credits. A free tier with 1,000 API calls is available.

Pros: Clear, predictable monthly credits and easy to trial, good price-to-feature ratio for startups.

Cons: Heavily protected anti-bot sites may still require tuning, and regional IP coverage depends on the selected pricing tier.

Best For: Developers and startups looking for a simple, all-in-one API to handle JavaScript rendering and proxy management.

7. ScraperAPI

ScraperAPI provides a straightforward API endpoint designed to simplify the web scraping process by handling proxies, browsers, and CAPTCHAs. It allows developers to make a single API call with their target URL and receive the raw HTML in return. This focus on simplicity and reliability makes it a strong choice among the best data extraction software, particularly for developers who need to get started quickly.

The ScraperAPI dashboard provides a clear overview of API usage and success rates. Source: ScraperAPI.

The ScraperAPI dashboard provides a clear overview of API usage and success rates. Source: ScraperAPI.

The platform’s core value lies in its managed proxy network and anti-bot circumvention systems. ScraperAPI automatically rotates through millions of residential, mobile, and datacenter IPs, retries failed requests, and can render JavaScript-heavy pages on demand. For larger-scale operations, it offers asynchronous workflows. This comprehensive approach is well-documented, and you can explore more options in this 2025 web scraping API guide to compare similar tools.

Key Features & Use Case

Automatic Unblocking: Integrates proxy rotation, browser rendering, and CAPTCHA solving.

Extensive IP Pool: Utilizes a vast network of residential, mobile, and datacenter proxies with geotargeting.

Asynchronous Scraping: Supports a separate async endpoint for high-volume jobs with webhooks.

Structured Data Endpoints: Offers specific endpoints for scraping structured data from popular sites.

Pricing: Free tier with 1,000 credits per month. Paid plans start at $49/month for 100,000 credits.

Pros: Generous free tier, competitive pricing for mid-volume usage, simple and well-documented API.

Cons: Geotargeting options are limited on lower-tier plans, and credit usage can be unpredictable for heavily protected sites.

Best For: Developers and small teams needing an easy-to-implement API to handle proxy rotation and anti-bot challenges.

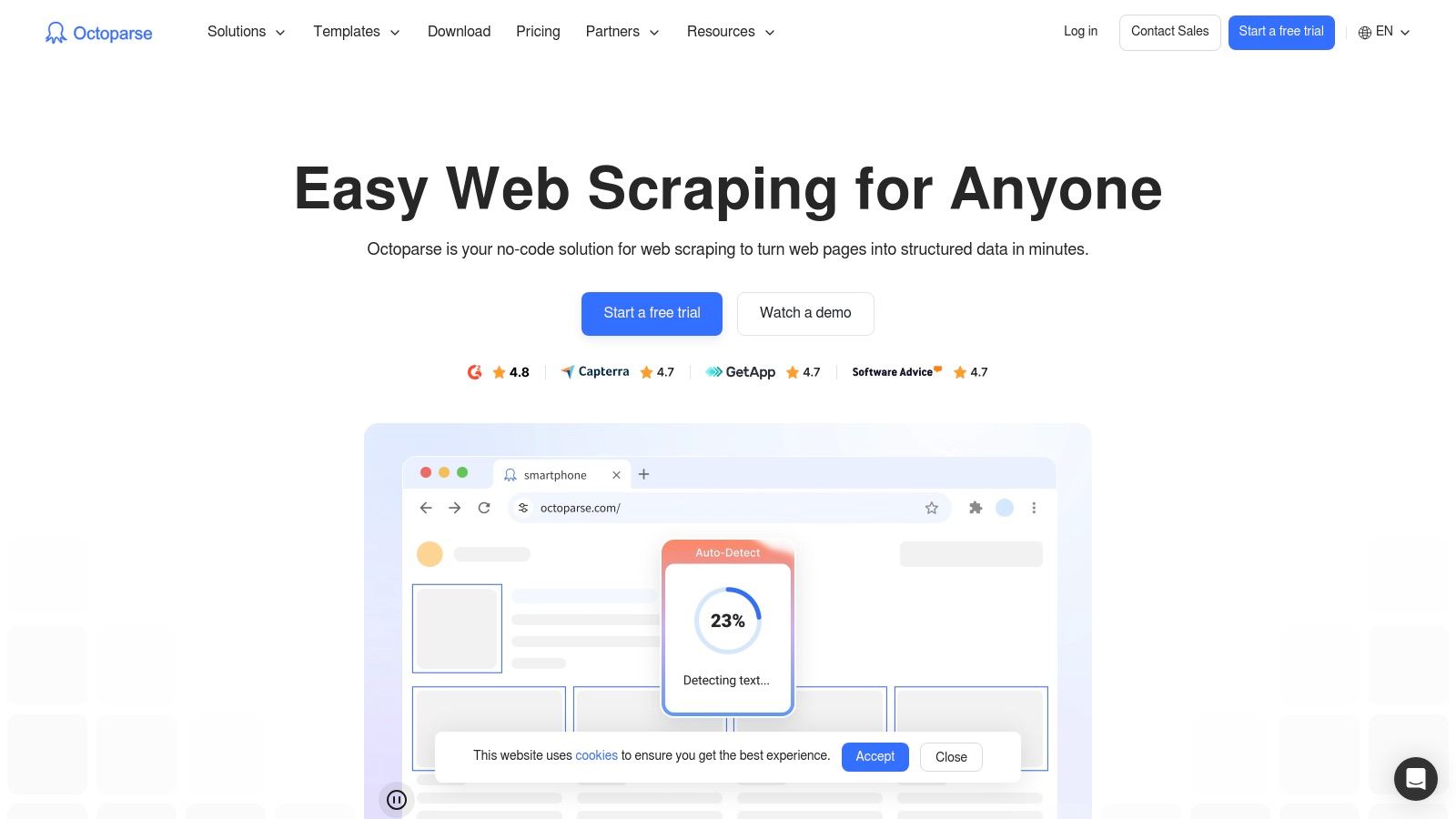

8. Octoparse

Octoparse democratizes data collection by offering a powerful no-code visual interface, making it a top choice among the best data extraction software for users without a programming background. It empowers business analysts, marketers, and researchers to build sophisticated scraping agents through a point-and-click workflow.

Octoparse’s visual interface allows users to build crawlers by clicking on webpage elements. Source: Octoparse.

Octoparse’s visual interface allows users to build crawlers by clicking on webpage elements. Source: Octoparse.

The core strength of Octoparse is its accessibility. Users can select from a library of pre-built templates for common websites or create custom "tasks" by visually selecting data points on a target page. The software automatically generates the underlying logic for navigating pagination, handling infinite scroll, and extracting structured data.

Key Features & Use Case

Visual Workflow Builder: A point-and-click interface that allows non-developers to build crawlers without writing any code.

Cloud & Desktop Operation: Build and test scrapers on the desktop application and then upload them to the cloud for scheduled runs.

Pre-built Templates: A library of ready-to-use templates for popular sites like Amazon, Yelp, and Twitter.

Managed Data Services: An optional service where the Octoparse team builds and maintains custom crawlers for clients.

Pricing: Offers a limited free plan; paid plans start at $89/month (or $75/month with annual billing).

Pros: Very easy for non-programmers to get started, offers a full managed service.

Cons: Power users may find the visual interface limiting compared to code-first APIs; CAPTCHA solving is a paid add-on.

Best For: Marketing teams, business analysts, and academic researchers who need to extract web data without writing code.

9. ParseHub

ParseHub stands as a long-standing no-code solution in the web scraping market, offering a visual, point-and-click interface that appeals to non-developers. It enables users like market researchers and e-commerce managers to build data extraction projects without writing a single line of code, making it a valuable tool in the broader landscape of the best data extraction software.

ParseHub's desktop app provides a visual environment for creating and managing scraping projects. Source: ParseHub.

ParseHub's desktop app provides a visual environment for creating and managing scraping projects. Source: ParseHub.

The platform's strength is its simplicity; you can click on the data elements you want to extract, and ParseHub’s engine learns the page structure. It handles common challenges like pagination, infinite scroll, and navigating through forms and dropdowns. Once a project is built, it runs on ParseHub's cloud infrastructure.

Key Features & Use Case

Visual Point-and-Click Interface: Allows non-technical users to build scrapers by simply clicking on the desired data fields.

Cloud-Based Execution: Projects are run on ParseHub's servers, which includes scheduling and IP rotation.

Handles Complex Interactions: Capable of navigating sites with dropdowns, logins, tabs, and infinite scroll.

API & Webhook Access: Provides an API to run projects and retrieve data programmatically.

Pricing: A free plan is available with strict limits; paid plans start at $169/month.

Pros: Very easy to learn for non-developers, handles complex JavaScript-heavy sites well, provides a REST API.

Cons: The free plan is highly restrictive, and performance can be slow on large jobs compared to code-based solutions.

Best For: Business analysts, marketers, and researchers who need to extract data from structured websites without an engineering team.

10. Diffbot

Diffbot elevates data extraction from simple HTML parsing to intelligent, AI-driven entity recognition. Instead of just scraping text, its API automatically identifies and structures complex data types like articles, products, and people from any URL. This makes Diffbot one of the best data extraction software choices for teams that need to understand the meaning of web data, turning unstructured web pages into a structured knowledge base.

Diffbot's platform can automatically classify page types and extract structured data into a knowledge graph. Source: Diffbot.

Diffbot's platform can automatically classify page types and extract structured data into a knowledge graph. Source: Diffbot.

The platform's core power lies in its automated page-type classification and natural language processing (NLP). You provide a URL, and Diffbot's AI determines if it's a product page or an event listing, then returns a clean JSON object. Beyond its Extract API, Diffbot offers a massive, pre-built Knowledge Graph, allowing users to enrich their extracted data with contextual information.

Key Features & Use Case

Automatic Page Classification: AI identifies page types (article, product, etc.) and applies a relevant schema for structured extraction.

Knowledge Graph Enrichment: Augment extracted data by linking entities to Diffbot’s vast commercial database.

NLP and Entity Recognition: Goes beyond tags to understand and extract entities, facts, and relationships from unstructured text.

Credit-Based Usage: A flexible, product-agnostic credit system allows you to use credits across different APIs.

Pricing: Free plan with 10,000 initial credits; paid plans start at $299/month for 250,000 credits.

Pros: Powerful NLP for complex or messy pages, generous number of starting credits.

Cons: Pricier than lightweight scrapers; may be overkill for small, single-site projects.

Best For: Organizations building sophisticated data applications that require entity enrichment and automatic data structuring.

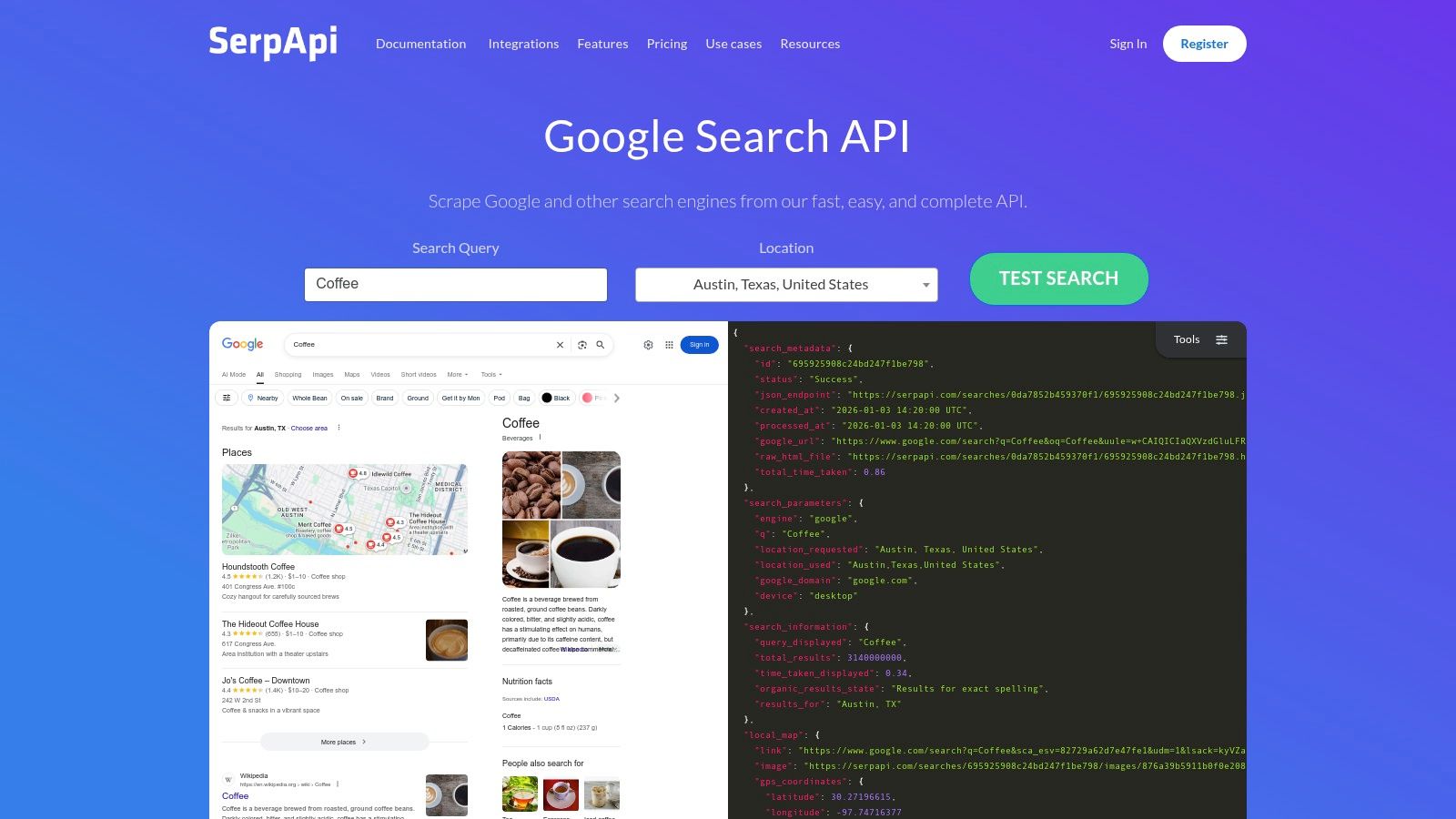

11. SerpApi (Search Engines Data Extraction)

SerpApi provides a specialized API designed exclusively for extracting structured data from search engine results pages (SERPs). Instead of a general-purpose web crawler, it offers a real-time solution for getting clean JSON output from Google, Bing, and others. This focus makes it a powerful piece of data extraction software for anyone needing reliable SERP data for SEO, market research, or competitive analysis.

The SerpApi Playground allows users to test queries and view the resulting structured JSON. Source: SerpApi.

The SerpApi Playground allows users to test queries and view the resulting structured JSON. Source: SerpApi.

The platform’s strength is its simplicity and reliability. It handles all the underlying complexities of proxies, CAPTCHA solving, and parsing. When pulling large volumes of search data, understanding the nuances between semantic search vs keyword search can help refine queries and improve the relevance of the extracted results.

Key Features & Use Case

- Structured SERP JSON: Delivers clean, predictable JSON output for various search engine verticals, including organic, local, and shopping results.

- High Reliability & SLA: Guarantees a 99.95%+ success rate, making it suitable for production systems.

- Multi-Engine Support: Provides access to a wide range of search engines beyond Google, including Bing, DuckDuckGo, and Yahoo.

- Pay-for-Success Model: Users are only billed for successful searches, which eliminates costs from failed requests.

Here's a quick Python snippet to fetch Google search results using SerpApi:

1from serpapi import GoogleSearch

2

3params = {

4 "q": "best data extraction software",

5 "api_key": "YOUR_API_KEY"

6}

7

8search = GoogleSearch(params)

9results = search.get_dict()

10print(results["organic_results"])- Pricing: Starts with a free plan for 100 searches/month. Paid plans begin at $50/month for 5,000 searches.

- Pros: Extremely fast and reliable for SERP data, simple API, bills only for successful searches.

- Cons: Highly specialized for search engines, not a general-purpose web crawler; large-scale plans can become costly.

- Best For: SEO agencies, marketing teams, and developers needing real-time, structured search engine data.

12. Capterra – Web Scraping Software Category

While not a data extraction tool itself, Capterra is an indispensable resource for researching and shortlisting the best data extraction software on the market. As a Gartner-owned software directory, it provides a comprehensive overview of available vendors, supplemented with verified user reviews and detailed feature comparisons. This makes it an essential first stop for teams evaluating their options.

Capterra’s web scraping category page helps users compare tools based on ratings and features. Source: Capterra.

The platform’s value lies in its aggregation of user-generated feedback. Instead of visiting dozens of vendor websites, you can use Capterra’s comparison tools to see how different products stack up side-by-side. For developers and managers, this significantly streamlines the initial discovery and vetting phase.

Key Features & Use Case

Curated Software Category: A dedicated section for web scraping software with powerful filters.

Verified User Reviews: Provides authentic feedback from real users on the pros and cons of each platform.

Software Comparison Tool: Allows you to directly compare features, pricing, and ratings for multiple products.

Buyers' Guides: Offers articles and resources to help educate teams on what to look for when purchasing data extraction solutions.

Pricing: Free to use for research and comparison.

Pros: Broad market overview with user reviews, helpful filtering by features, and availability of free trials.

Cons: Sponsored listings can influence the order of results; it's a directory only, so purchases happen on external sites.

Best For: Teams in the initial research phase looking to compare a wide range of web scraping tools.

Visit Capterra

Comparison of Top Data Extraction Tools

| Product | Best For | Approach | Key Feature | Starting Price |

|---|---|---|---|---|

| CrawlKit | Developers & AI Teams | API-First | No-infrastructure data platform | Free tier, then pay-as-you-go |

| Zyte | Scrapy Users | API + Platform | Success-based billing | $25/month |

| Bright Data | Enterprise Scale | API + Proxies | Massive proxy network | Pay-as-you-go |

| Oxylabs | E-commerce & SERP | API-First | Vertical-specific scrapers | Custom pricing |

| Apify | Rapid Deployment | Platform + Store | Pre-built "Actors" | Free tier, then $49/month |

| ScrapingBee | Startups & SMBs | API-First | Simple JS rendering | $49/month |

| ScraperAPI | Dev Teams | API-First | Automatic unblocking | Free tier, then $49/month |

| Octoparse | Non-Developers | No-Code GUI | Visual workflow builder | Free tier, then $89/month |

| ParseHub | Non-Developers | No-Code GUI | Visual point-and-click | Free tier, then $169/month |

| Diffbot | AI/Data Science | AI-Powered API | Knowledge Graph | Free tier, then $299/month |

| SerpApi | SEO & Marketing | Specialized API | Structured SERP data | Free tier, then $50/month |

| Capterra | Researchers | Directory | User reviews & comparisons | Free to use |

Making Your Final Decision

Navigating the landscape of the best data extraction software can feel overwhelming, but the right tool is the one that aligns with your project's needs, technical expertise, and budget. Whether you need a developer-centric API like CrawlKit or a no-code platform like Octoparse, the key is to choose strategically.

Before you commit, run through this quick checklist:

- Technical Skill Level: Are you a developer comfortable with APIs (CrawlKit, Zyte) or do you need a visual interface (ParseHub, Octoparse)?

- Scalability Requirements: Do you need to run thousands of concurrent requests or are your needs smaller? API-first platforms generally offer more seamless scaling.

- Target Data Complexity: Are you scraping simple HTML or dynamic, JavaScript-heavy sites that require advanced anti-bot circumvention?

- Data Structure Needs: Do you need clean, structured JSON, or is raw HTML acceptable? Tools like Diffbot and CrawlKit excel at providing structured data out of the box, which is critical for market research AI.

- Budget and Pricing Model: Do you prefer pay-as-you-go or a fixed monthly subscription? Factor in costs of proxies and CAPTCHA solving.

- Support and Documentation: How important is community support, detailed API documentation, and direct customer service to your team?

We highly recommend leveraging the free trials offered by most of these providers. Set up a small proof-of-concept to see how each tool performs on a representative target. This hands-on experience is invaluable.

Frequently Asked Questions (FAQ)

What is the best software for data extraction?

The "best" software depends on your needs. For developers who want a reliable, API-first platform without managing infrastructure, CrawlKit is a top choice. For non-technical users, a visual tool like Octoparse is often best. For large enterprises already using Scrapy, Zyte is a natural fit.

How do I choose a data extraction tool?

Consider four key factors: 1) your technical skill level (API vs. no-code), 2) the complexity of your target websites (static HTML vs. dynamic JavaScript), 3) your budget and preferred pricing model (subscription vs. pay-as-you-go), and 4) your scalability needs (small project vs. large-scale, ongoing extraction).

What is the difference between web scraping and data extraction?

Web scraping is the automated process of fetching data from websites (usually the raw HTML). Data extraction is the subsequent step of parsing that raw data to pull out specific, structured information (like product names, prices, or contact details) and organizing it into a usable format like JSON or a spreadsheet.

Can I extract data from any website?

Technically, you can attempt to extract data from most public websites. However, many sites employ anti-bot measures to prevent scraping. Furthermore, you must always respect a website's robots.txt file, terms of service, and relevant data privacy laws like GDPR and CCPA. Ethical scraping is crucial.

Is data extraction software legal?

Extracting publicly available data is generally considered legal, a principle reinforced by the hiQ Labs v. LinkedIn court case. However, the legality can become complex. You must not scrape private data, infringe on copyrights, or violate a website's terms of service in a way that causes harm. Always consult with a legal professional for specific use cases.

Do I need to know how to code to use data extraction software?

Not necessarily. No-code tools like Octoparse and ParseHub are designed for non-developers and use a visual, point-and-click interface. However, for more complex, scalable, or customized projects, developer-focused API tools like CrawlKit, Bright Data, or ScraperAPI offer greater flexibility and power.

What are the main challenges in data extraction?

The primary challenges include getting blocked by anti-bot systems, handling CAPTCHAs, managing rotating proxies, parsing websites with complex or frequently changing layouts, and rendering dynamic JavaScript-heavy content. Many of the best data extraction software solutions are designed specifically to solve these problems.

How much does data extraction software cost?

Costs vary widely. Many tools offer a free tier with limited usage. Paid plans can range from around $50 per month for startups and small businesses to thousands of dollars per month for enterprise-level services with high-volume needs, dedicated support, and advanced features.

Next Steps

Ready to skip the infrastructure and start building? CrawlKit offers a developer-first, API-centric platform that abstracts away the complexities of proxies, browser management, and anti-bot systems.

Get structured, LLM-ready data with a simple API call.